gregorio

Headphoneus Supremus

- Joined

- Feb 14, 2008

- Posts

- 6,846

- Likes

- 4,093

1. You are contradicting yourself: If YT had implemented a loudness standard then you would not "constantly have to adjust volume after every 1-2 songs", you would never have to adjust the volume because it would be the same between all the songs and commercials! YT does reportedly have their own loudness standards (which equate to about -13LUFS) but obviously they haven't applied this to everything, exactly how and when they apply it isn't known. Also, when loudness normalisation is applied, it would have to be applied during ingest, not playback.[1] I thought YT, Spotify, and other streaming services already had their own loudness standards, like -12 or -16LUFS or something. Automatical levelling would occur when viewers played stuff off them. .... I for one hate it when I'm listening to a YT playlist, and I constantly have to adjust volume after every 1-2 songs, or, get blasted out by a commercial(commercials were worst thing ever to happen to YT!)

[2] And how would standardized loudness "kill" streamers?

2. Most home/amateur videos are made with mic's built into video recording device (digital camera, mobile phone, etc.) and then posted with little/no audio processing, which almost invariably results in a relatively high noise floor. Applying loudness normalisation automatically on ingest would require adding a considerable amount of compression and make-up gain, which would result in a considerable increase in the noise floor and render the dialogue (and/or other wanted sound) of most home videos unintelligible. The other option would be for the public to get the necessary audio tools, learn how to use them and apply their own loudness normalisation before posting but that's a lot of time/effort, they just want to post their home videos/vBlogs, not learn audio post. Neither option would be acceptable and therefore if loudness normalisation were imposed, video distribution platforms which rely on content supplied by consumers would be "killed"!

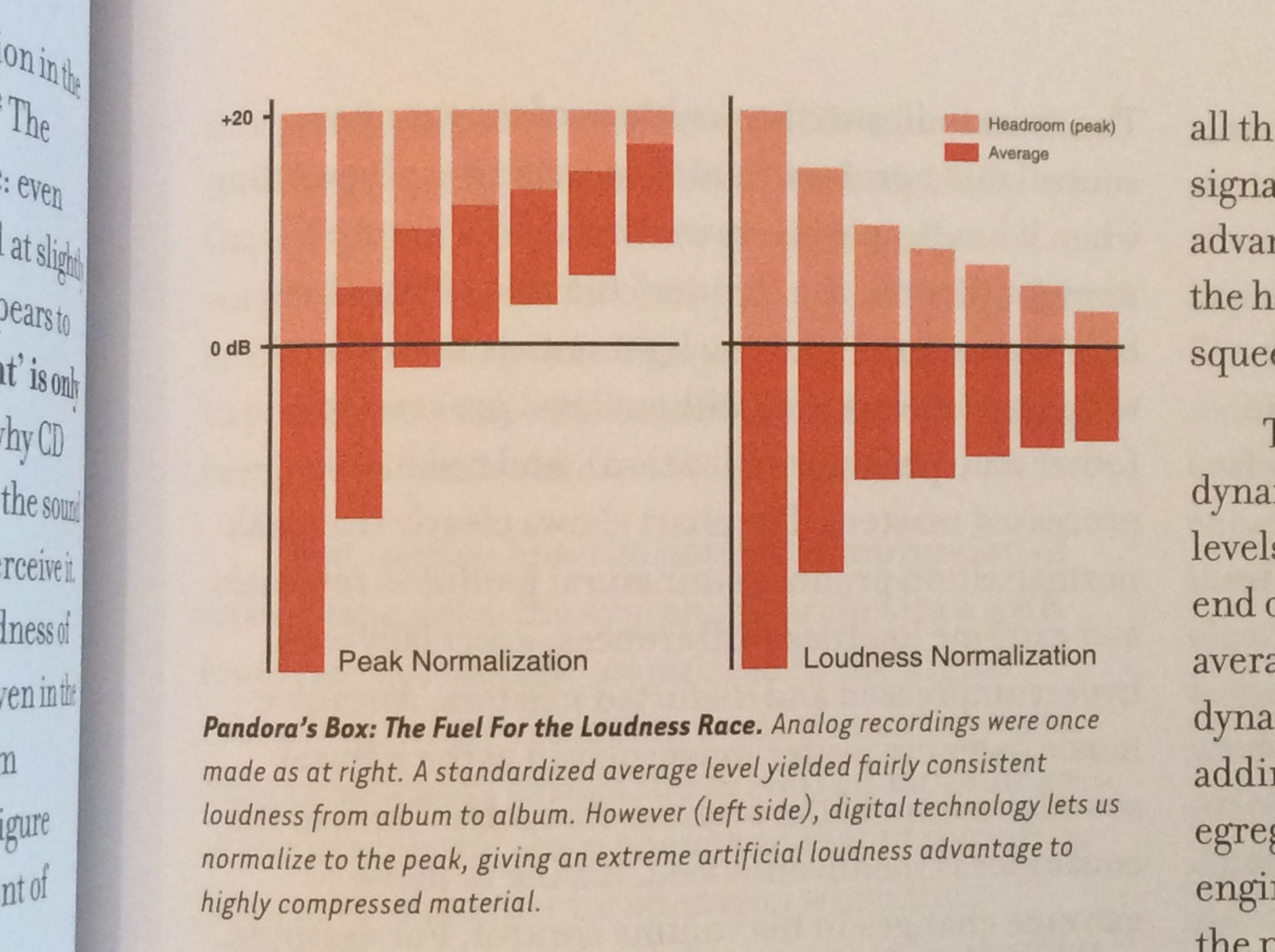

1. Clearly, just shaking someone's hand does not mean you have any understanding of what they're saying!![1] To borrow this diagram, from the book of a mastering engineer who I have shaken the hand of and hold in higher regard than most - sorry Greg, sorry Ludwig, and Lord-Alge)

[2] - the right-hand diagram represents(in principle) the loudness situation during the pre-digital VU analog meter era(basically from the birth of metering up to the early 1980s)

[2a] ... and also the ideal we must aim for and return to.

[3] Imagine that '-24' figure and associated red line in that right hand version to represent the zero on any typical VU meter manufactured between WW2 and the '80s.

[3a] That zero, and the 2dB above and below it on a typical VU, is the sweet spot where both producers and deliverers of broadcast content kept the needles on their recorders, mixers, and master output faders, effectively guaranteeing loudness consistency 90% of the time...

[4] The left diagram, of course, represents the path digital, since its advent ...

2. No it does NOT, you actually have this backwards! The left hand diagram represent the loudness situation of both the VU (analogue) meter era and the digital era before loudness normalisation. The right hand diagram represents the era of loudness normalisation, which cannot be achieved with a VU or any other analogue meter and can only be done in the digital era!

2a. So the peak (or "quasi peak" in the case of a VU and other QPPMs) level specifications as opposed to the loudness specifications is the "ideal" that we must aim to return to? The "ideal" that encouraged huge amounts of compression and other processing which allows the audio to sound louder without increasing the peak (or quasi-peak) levels. This is the exact opposite of what you've previously incessantly argued, that the "ideal" is less compression and more dynamic range!

3. I have great difficulty in "imagining that" because a typical VU or any other type of meter manufactured between ww2 and the '80's cannot measure loudness ONLY quasi-peak levels!

3a. Firstly, if producers kept the needles within plus or minus 2dB of the VU zero point, then the end result would have no more than 4dB dynamic range, which would in most cases require pretty heavy compression to maintain. Secondly, it would absolutely NOT "guarantee loudness consistency 90% of the time", a VU meter does not measure loudness, it measures the quasi-peak levels and it's mainly due to this fact that the TV commercials were so much louder than the programmes!!

Additionally, I presume you're talking about Bob Katz? Bob is an extremely knowledgeable, experienced and highly respected professional music mastering engineer, however, he's not a film or TV re-recording mixer. The charts you posted are not correct (or you are posting them out of context). Cinema Movies do not have any loudness normalisation but if you take theatrical mixes and measure their LUFS value, they should on average come out at around -32dBLUFS, not -24LUFS. However, this is a rough average and varies significantly, a big action/war blockbuster will obviously have a higher LUFS than say a gentle period drama (which is likely to be more around -35dBLUFS). This is one of the most compelling current arguments against applying loudness normalisation to cinema movies, filmmakers do not want a drama film to be the same loudness as say the loudest action packed war films.

4. and finally, NO, it doesn't!

With this post and the post I've responded to above, you are demonstrating that you have absolutely no idea what loudness normalisation actually is, or how it works! Loudness normalisation/specifications is a series of measurements of the signal which has had a filter (that roughly corresponds to a loudness contour) and gating applied and then all these short term measurements are averaged over the duration of the program to arrive at the LUFS (or in the case of the ATSC, LKFS) value.Meters with a loudness-ballistic(vs. peak based) algorithm should be mandatory, on pocket digital recorders, digital mixing consoles, and digital processors. A peak indicator could be provided, which remains green when peaks are below a specific threshold, turn yellow, then orange, and then red at the onset of clipping. 0 itself already has several suggested standards, ranging from LUFS-16 down to -24.

Your suggestion is therefore clearly nonsense! What "loudness-ballistic algorithm" could there possibly be that accurately measures what hasn't happened and hasn't been recorded yet and then creates an average which incudes those measurements? There's only two possible ways of achieving a particular LUFS/LKFS value: A. To do so in audio post, after all the recording has been completed and can therefore be measured/analysed or B. To provide a running total of what the LUFS is so far, highly restrict the peak levels and dynamic range of what will be recorded and then, with relatively minor real time adjustments, the LUFS value can be maintained. It should be obvious that "B" is the only option for the live broadcast of sports events but it requires "setting the rack" (as you put it) just so, and "easing off" the compression would make it more difficult or impossible to comply with the LUFS spec/legal requirement.

[1] I can’t believe all of the different sound options in all of my devices. I never appreciated it until I started digging through menus the last few days. It seems so complex for a normal person to get their arms around. Not easily understood or well documented.

[2] Someone just tell me what button to press already.Right now I have most everything on every device set to “auto” and I just hope it’s all playing nice together.

1. It is complicated for the consumer and under the hood, it's far more complicated.

2. What button to press depends on what you want. "Auto" will typically give you what the average consumer wants, the average consumer with a decent/average sound system in a decent/average listening environment. If it's late at night and you don't want to disturb the neighbours/children, choosing a higher compression scheme, often called "night mode" or something similar (and a lower volume) will probably give you what you want. If on the other hand you've got a better than decent/average consumer system and listening environment, don't have to worry about disturbing anyone else and want the full dynamic range actually contained in the dolby datastream, then you should turn off the dialogue normalisation and compression and turn up your volume to suit. Incidentally, "off" for dialogue normalisation is normally represented as "-31" and you should check this after you start the film/program as some AVRs will override your settings with the settings in the dolby metadata. Lastly, you'll have to experiment, even if this is what you want, depending on the individual mix, it might not always give better results plus, exactly what your AVR is doing and is calling these parameters is often not easy to fathom!

[1] I have never seen it in frequency response curves (always exceptions of course, but I mean in the general sense) of speakers/playback equipment/recorded material and why would it be necessary in the first place - [1a] ie why would studios include that emphasis and then the consumer industry design around it?

[2] Is there a specific standard around it for studios and consumer manufacturers to follow?

[2a] What about mastering destined for vinyl which the format inherently emphasises the mid-bass due to the inaccuracies of that medium. Wouldn't it make it worse?

1. Virtually no consumer speakers are full range (20Hz - 20kHz), even very good ones tend to roll-off quite severely by about 40Hz and start rolling-off around 50Hz - 60Hz and of course, most consumers don't own "very good speakers". Many consumer speaker manufacturers slightly boost the bass freqs to try and compensate for bass freqs their speakers can't reproduce at all or can't reproduce powerfully enough. Also, many consumers add more bass with their tone controls. I'm sure you probably know consumers who do this but how many consumers do you know who reduce the bass on their 2 speaker stereo systems?

1a. No, it's the other way around. Mastering is the process of taking the studio mix and adjusting it to sound as intended when played back by the consumer. If the consumer (or their equipment) is adding bass, then the mastering process needs to take this into account, by for example, likewise adding bass to their monitoring system/environment.

2. No, there are no standards for music studios, recording or mastering studios. There are for Film sound mixing studios but they are only applicable to cinemas, not home consumers and home consumers typically don't get the theatrical mix anyway. Many mastering studios are flat but then some/much of the mastering process occurs at very high playback levels which increases the perception of the amount of bass anyway. However, some do have a "house curve" and many recording/mix studios do.

2a. No, it would make it better. If the mastering studio had a "house curve" with a raised mid bass, then the master would contain less mid-bass and compensate for the "emphasised mid-bass" on vinyl. I've never mastered specifically for vinyl though, so I can't say exactly what they did/how they did it. Mastering specifically for vinyl pretty much died out many years ago, most vinyl today (and for the last couple of decades or so) is pressed from the same master as the digital releases and if the RIAA curve is applied at all, it's typically done by the pressing plant rather than the mastering engineer.

Since upgrading my home theater receiver to a new Denon, I've also noticed it has settings that say "cinema" that can slightly roll off treble: with "Cinema EQ" their reasoning is that there can be higher treble in a home system vs center channel theater that's behind a screen.

Yes, the treble can effectively be too high in a theatrical mix ... due to the use of the x-curve. Your system would therefore in theory also have to roll-off the treble (as per the x-curve) in order to get a perceptually flat response. Of course though, this assumes you are reproducing the theatrical mix, which typically isn't the case, typically you're reproducing a BluRay or TV mix (which are not made with the x-curve in the monitoring chain). Unfortunately, it can be difficult to know, as even films/versions listed as "Original Theatrical Cut" or "Mix" quite commonly aren't. Personally, I'd leave that setting "off", the x-curve is actually quite a complex thing, in that it depends on the HF perception of reproduced sound in a large room (such as a cinema) and therefore even if you are listening to a theatrical mix, trying to compensate for the x-curve might not be appropriate in your relatively small room, hence why I said "in theory" above. If you're interested in more than my oversimplified assertion/explanation, try this article, it's short, easy to understand and accurate.

G

Last edited: