Alternatively, you could skip the HPEQ process entirely if you have something like a Sonarworks custom headphone equalization curve and your source was your PC running JRiver or Foobar with the SW plugin feeding ther SVS Realizer.

Suuuuuuuuuure, you could spend an extra $100 (or however much Sonarworks costs, does it have in-app purchases too?) over what the A16 costs, and get a result that is not customized for YOUR head, and limit yourself to only using a PC as your input source...

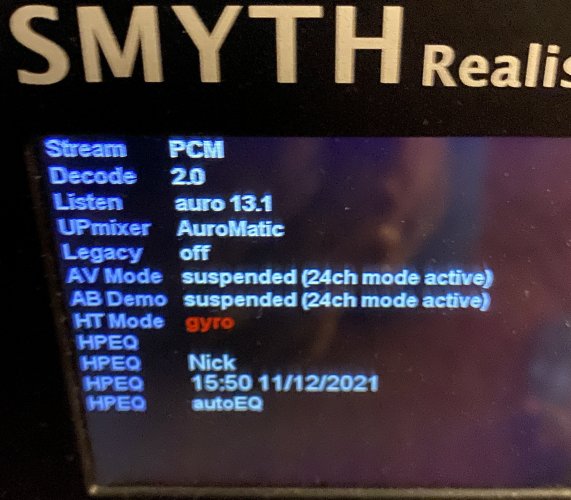

But I'll spend the 10-15 seconds it takes to do a personalized HPEQ with any headphone (including ones not available for Sonarworks) and with any sound source in my house. Sonarworks is great – if you don't have an A16. The A16 does everything Sonarworks does, and better because it's personalized to your head and your headphone sample.