Maybe Virtuoso deserves its own topic?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Smyth Research Realiser A16

zdjh22

Head-Fier

I think so. Under the Computer Audio forum? Or is there a better spot?Maybe Virtuoso deserves its own topic?

I tried to get discussions started over on Quadraphonic Quad but without much success. I could always copy those posts to a new topic here at Head-Fi.

zdjh22

Head-Fier

I just saw this over at QQ (“When the eyes, the eardrums move too”).

https://www.sciencedaily.com/releases/2018/01/180123171437.htm

https://www.sciencedaily.com/releases/2018/01/180123171437.htm

Sanctuary

100+ Head-Fier

- Joined

- Jun 19, 2011

- Posts

- 498

- Likes

- 134

I just saw this over at QQ (“When the eyes, the eardrums move too”).

https://www.sciencedaily.com/releases/2018/01/180123171437.htm

That's pretty interesting, and I wonder if that might have any influence on perceived localization. Like when there are characters on the screen talking, but they are at either side of the screen. Personally, whenever I see someone talking on the side of the screen, it seems as though the localization is also coming from where they are, even when my center speaker is directly in front of me. Could be due to eardrum movement, or it could just be my brain trying to compensate with where the sound is actually coming from and where I expect it should be.

It might be more of the latter in my case, because I sometimes have some odd reactions with spatial audio that I'm not sure should be happening. When I am directly facing a screen, the localization seems to be correct, but...when I get up with my headphones on, and do a 180 degree turn, suddenly the center speaker sounds like it's coming from directly behind me, like a real speaker would, and I don't actually use head tracking. It's as if I have an internalized head tracker or something. Even if I am sitting in my chair, directly facing a screen and I close my eyes it still sounds like it's coming from directly in front of me. If I turn around though? I know where the virtual center should be in relation to the TV, and something is happening in my head that is tricking me into thinking it's actually still there if I turn around.

When I went to the studio to get my first PRIR last year, John and I noticed some "funny stuff" happening with how I was perceiving the virtual speaker placements and me leaning forward or backward seemed to actually affect where I was hearing them. Again, this was without actually using head tracking.

Here's a much more in-depth article on the topic from the previous post:

https://www.pnas.org/doi/10.1073/pnas.1717948115

This also might be why some people have issues with the A8/A16 illusion when real speakers are not present to reinforce it, as well as being why so many seem to benefit, or swear by the necessity of using the head tracker with either.

Last edited:

zdjh22

Head-Fier

Very interesting post!That's pretty interesting, and I wonder if that might have any influence on perceived localization. Like when there are characters on the screen talking, but they are at either side of the screen. Personally, whenever I see someone talking on the side of the screen, it seems as though the localization is also coming from where they are, even when my center speaker is directly in front of me. Could be due to eardrum movement, or it could just be my brain trying to compensate with where the sound is actually coming from and where I expect it should be.

It might be more of the latter in my case, because I sometimes have some odd reactions with spatial audio that I'm not sure should be happening. When I am directly facing a screen, the localization seems to be correct, but...when I get up with my headphones on, and do a 180 degree turn, suddenly the center speaker sounds like it's coming from directly behind me, like a real speaker would, and I don't actually use head tracking. It's as if I have an internalized head tracker or something. Even if I am sitting in my chair, directly facing a screen and I close my eyes it still sounds like it's coming from directly in front of me. If I turn around though? I know where the virtual center should be in relation to the TV, and something is happening in my head that is tricking me into thinking it's actually still there if I turn around.

When I went to the studio to get my first PRIR last year, John and I noticed some "funny stuff" happening with how I was perceiving the virtual speaker placements and me leaning forward or backward seemed to actually affect where I was hearing them. Again, this was without actually using head tracking.

Here's a much more in-depth article on the topic from the previous post:

https://www.pnas.org/doi/10.1073/pnas.1717948115

This also might be why some people have issues with the A8/A16 illusion when real speakers are not present to reinforce it, as well as being why so many seem to benefit, or swear by the necessity of using the head tracker with either.

With both the built-in and 3D Sound Shop PRIRs, I only rarely had the sense of sound coming from objects in front of me. Yes, out of my head, but not far out of my head. The most striking strong occurrence was dialog during a particular scene in a movie, where clearly perception was a strong function of context. OTOH, my own recorded PRIRs are very striking, to me, with the sounds of the individual speakers well located in their usual positions, for example, the center channel clearly being emitted 6 feet in front of me. Even without a head tracker, I also perceive natural movement of the positions of my side and rear speakers when I move my head. Perhaps some of the effect of my own PRIR is familiarity with the usual sounds and reverberations. However, when I transported my A16 100 miles to a different city and demonstrated it to my brother, he also had a very strong sense of out-of-head localization on my PRIR; his head and ear shape, and brain physiology, are obviously linked closely genetically to mine.

Human perception of the senses is amazing! In the visual arena, I highly recommend looking up Edwin Land's Retinex Theory (the Polaroid inventor). Color perception is greatly influenced by context, but there is also brain physiology involved. I've experienced perception of muted colors twice in two demonstrations of Land's Retinex effect, seeing correct blues and greens when the image is only formed by a red and a grey filter; once in person at a lecture by Land, a second time in psychology class. Those with some forms of color blindness are supposed to be able to perceive colors new to them in that demo.

Spatial perception of sound depends on very subtle timing queues - on the order of 10 to 100 microseconds difference between when sound from your speakers hits your left vs right ears (and of course, reverberations and resonances within the shape of your ears and ear canals add more info). Neurons fire quite slowly, however - no faster than up to 1 KHz IIRC - and signal propagation within the nerves is less than about 1/3rd the speed of sound plus measured neuron signal latencies are on the order of a millisecond. The "wiring" and signal processing necessary for us to perceive spatial sound and vision are quite remarkable.

Last edited:

"When the eyes, the eardrums move too" is also explained in other words in the Realiser A16 User Manual, page #9.

One problem with headphone reproduction is that the sound stage rotates with the listeners head causing significant aural confusion and loss of spatialisation. A solution to this rotation problem is the use of head tracking technology. The head tracker tracks the listeners head movement, adjusting the virtual speaker processing such that the virtual speakers move in the opposite direction to the listener’s head. This results in a very stable and natural listening experience causing the headphones to effectively disappear.

Perhaps reading both the article and manual, those who are still not convinced that the head-tracker is still useful, will pay more attention to it from now on.

One problem with headphone reproduction is that the sound stage rotates with the listeners head causing significant aural confusion and loss of spatialisation. A solution to this rotation problem is the use of head tracking technology. The head tracker tracks the listeners head movement, adjusting the virtual speaker processing such that the virtual speakers move in the opposite direction to the listener’s head. This results in a very stable and natural listening experience causing the headphones to effectively disappear.

Perhaps reading both the article and manual, those who are still not convinced that the head-tracker is still useful, will pay more attention to it from now on.

castleofargh

Sound Science Forum Moderator

- Joined

- Jul 2, 2011

- Posts

- 10,435

- Likes

- 6,049

That system is indeed not relying on our internal speed, but on different lengths for the signal to travel.Neurons fire quite slowly, however - no faster than up to 1 KHz IIRC - and signal propagation within the nerves is less than about 1/3rd the speed of sound plus measured neuron signal latencies are on the order of a millisecond.

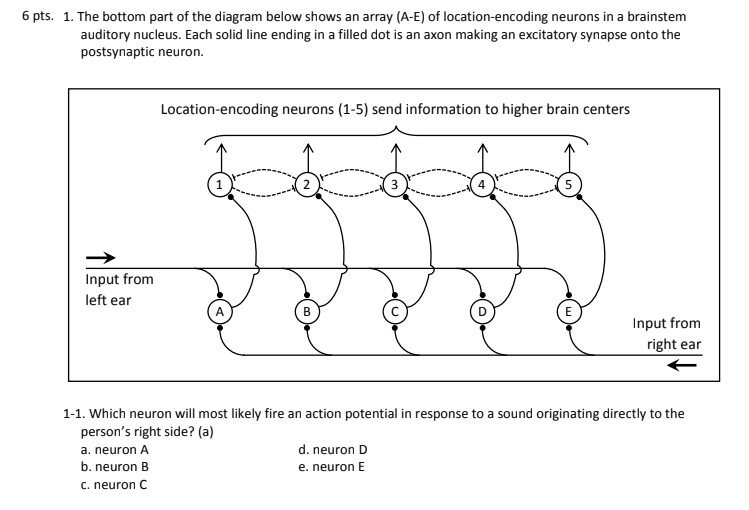

The accepted model is like this one:

The neurons A to E only reach action potential if they get an electrical signal from both inputs at about the same time, and it's simply the time it takes to travel that determines which one is more likely to "fire". There is obviously a lot left untold here, like which sound cue is sending a signal to those neurons and how do they differentiate between the same sound and some other sound coming right before. But hopefully the brain does something clever and this principle still holds ( it's usually not aliens and not magic, beyond that, IDK^_^).

Sanctuary

100+ Head-Fier

- Joined

- Jun 19, 2011

- Posts

- 498

- Likes

- 134

"When the eyes, the eardrums move too" is also explained in other words in the Realiser A16 User Manual, page #9.

One problem with headphone reproduction is that the sound stage rotates with the listeners head causing significant aural confusion and loss of spatialisation. A solution to this rotation problem is the use of head tracking technology. The head tracker tracks the listeners head movement, adjusting the virtual speaker processing such that the virtual speakers move in the opposite direction to the listener’s head. This results in a very stable and natural listening experience causing the headphones to effectively disappear.

Perhaps reading both the article and manual, those who are still not convinced that the head-tracker is still useful, will pay more attention to it from now on.

I don't use it at all, and have no problems with positional accuracy when sitting in a normal position. I also have a guest over regularly who uses their own PRIR who also does not need head tracking for it to sound accurate to them. I'm also not suggesting it's "useless" or anything like that. Some people need it, some don't.

What is being described in the manual is also not exactly the same thing as what is being discussed in the articles, even if the two can be somewhat related. The manual is simply talking about the illusion being broken when you move your head, because you know where the sound should be coming from based off of the position of your ears in relation to where real speakers would be. If you rotate your head, the virtual room does not react in the same way you expect/remember how actual speakers would react. That's not the same thing as "when your eyes move, your eardrums move".

It's like saying you need head tracking for real speakers too.

Last edited:

sander99

Headphoneus Supremus

For anyone who considers purchasing Virtuoso, I received an e-mail:

VIRTUOSO Early Access sale ($99) will continue until 31 Dec, but we decided to offer further discount for Black Friday ($79) until 26 Nov. All early access customers will get free upgrade for all future versions of VIRTUOSO and also receive a free license for our upcoming plugin DUOPAN.

Thanks for spreading good news

gimlet

Head-Fier

The updated Virtuoso User Manual now lists the 11 near-field monitor loudspeakers available for emulation as well as the different room options. Amazing value for $79 - this is now my preferred headphone processor when I haven't got access to my A16. Although it doesn't approach the fidelity of the A16 with good open headphones like the Sennheiser HD800, it does have much more flexibility, a far better GUI and manual, and even provides a convincing surround speaker emulation on my Shure 1200 electrostatic earbuds (better than the A16). Quote from the User Manual below.Thanks for spreading good news

"SPK EQs emulate the complex and unique frequency response of the loudspeakers. Therefore, they allow you to choose virtual loudspeakers with different spectral balances for your monitoring purposes but also can be used for adding a unique tonal colour to your native binaural mix. More headphones and loudspeaker EQs will be added in future updates.

(A) ATC SCM11 (B) ATC SCM7 (C) Barefoot MM45 (D) BW 705 S2 (E) Dutch and Dutch 8C (F) Genelec 8331A (G) Harbeth M30.2 XD (H) Nuemann KH120A (I) PMC6 (J) Q Acoustics 3030i (K) Yamaha HS5

Note: The frequency response of a loudspeaker depends on the frequency and measurement posi- tion and most near-field loudspeakers have limited low-end response. The SPK EQs are designed to emulate only the on-axis response above around 250Hz, thus maintaining the original low-end extension of the default (None) setting."

zdjh22

Head-Fier

On the recent subject of ear movement and sound localization, I would like to express my jealousy towards my greyhounds, who can independently move and reshape each ear in order to better localize sound. Although they might like my A16, none of my earphones, whether over-the-ear or IEM, fit!

Just to prove that the Richard Skipworth drawing above doesn’t exagerate, here are two more examples.

Given the new advances in science and biotechnology, maybe in a few thousand years people of the future will be able to independently move and reshape each ear to better localize sound.On the recent subject of ear movement and sound localization, I would like to express my jealousy towards my greyhounds, who can independently move and reshape each ear in order to better localize sound. Although they might like my A16, none of my earphones, whether over-the-ear or IEM, fit!

Just to prove that the Richard Skipworth drawing above doesn’t exagerate, here are two more examples.

audiohobbit

500+ Head-Fier

A Realiser for those dogs would be a challenge. They would need not only headtracking, but also individual ear-tracking (horizontally and vertically)...

sander99

Headphoneus Supremus

And ear-shape tracking....

A PRIR measurement would be a nightmare: 1000000000 combinations of head-position, left and right ear-position and left and right ear-shape would have to be measured.

A PRIR measurement would be a nightmare: 1000000000 combinations of head-position, left and right ear-position and left and right ear-shape would have to be measured.

Users who are viewing this thread

Total: 13 (members: 1, guests: 12)