This. Audio data within the PC environment is expected to be 32bit, even 16/24 bit data is encapsulated within 32bit frames so it would make no sense to truncate to 16 or even 24bit as it would just be padded with zeros again.

Ok.

If it is really so, then it is indeed unnecessary for the user to convert 16 bit audio to a higher bit rate, because not only it will be done automatically anyway, but it will be kept at a higher bitrate until the very output. (But it's still advisable to set up the Foobar's output setting to 24bit or 32 bit, depending on how much your DAC can accept, because now, at the end of a processing chain, these lower bits - from 16 to 24, contain useful information. They are not zeros anymore.)

But here we need to come back to the issue of upsampling (changing the frequency).

As I am still convinced that it's beneficial to upsample the frequency, let's say, from 44 to 88 or 176, prior to feeding the audio date to a processing chain of plugins, we

anyway end up with not only a higher sample rate,

but also a higher bit rate, because, as it was shown above, the audio data at the end of the upsampler will be 176/32, not 176/16.

Aleksey Vaneev (the author of

Voxengo VST plugins):

"Almost all types of audio processes benefit from an oversampling: probably, only gain adjustment, panning and convolution plug-ins have no real use for it. An oversampling helps plug-ins to create more precise filters with minimized warping at highest frequencies, to reduce aliasing artifacts in compressors and saturators, to improve a level detection precision in peak compressors." (quoting from

here)

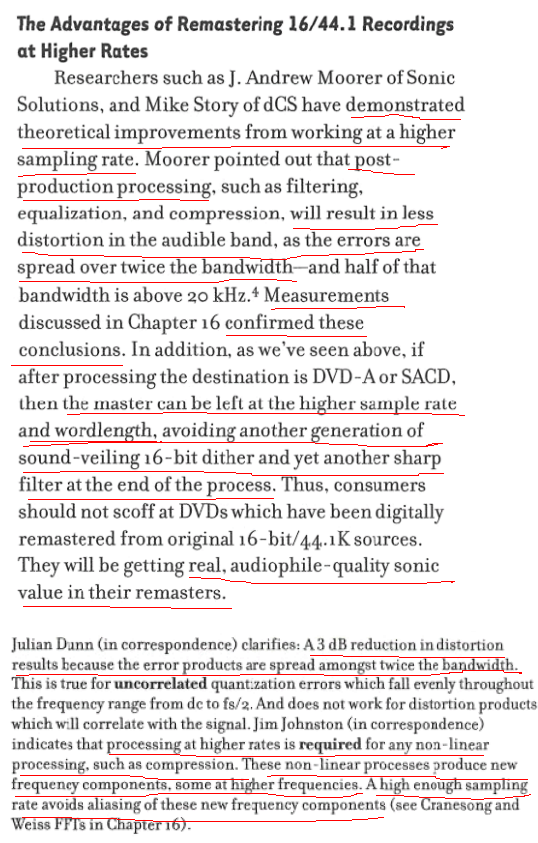

The quote from Bob Katz' book: "The Art & The Science of Mastering":

Also, consider this argument:

plugins oversample (optionally or automatically).

DAСs

also oversample or upsample the signal.

https://en.wikipedia.org/wiki/Oversampling:

"Oversampling improves resolution, reduces noise and helps avoid aliasing and phase distortion by relaxing anti-aliasing filter performance requirements."

So, since plugins/DACs upsample/oversample anyway,

why don't we help them do this work (fully or, at least, partially) by upsampling the signal first ourselves with the high-quality upsampler such as dbPoweramp/SSRC available in Foobar? I don't think that the quality of oversampling/upsampling inside plugins is superior compared to a dedicated upsampler. I am sure dBpoweramp/SSRC can increase the sample rate with a better result -

measurements prove that it's the best compared to similar upsamplers.

So, our signal, while starting its way from modest 44/16, even without an upsampler at Foobar,

at any case, undergoes these changes on its way:

Step A (audio file): 44/16

Step B (VST plugins - let's assume they oversample everything to 176): 176/32

Step C (at the Foobar output limited by the DAC driver, and at the DAC input): 44/24

Step D (inside the DAC - let's assume it oversamples all incoming signals to 352): 352/32

So, if our signal finishes its way being 352/32 in the DAC, why would it not be beneficial to insert our highest-quality upsampler in between steps A and B to upsample the signal first to 88 or 176?

Please note that there is downsampling happening from step B to step C (if we don't upsample before the VST chain).

But if we upsample before our VST chain, then step C looks like this:

Step C (foobar output, limited by the DAC driver, and at the DAC input): 176/24

If we don't upsample, then the VST plugin has to do the full job, i.e. oversample 4X.

If we upsample to 88, then the VST plugin has to do only half of its job, i.e. oversample only 2X.

If we upsample to 176, then the VST plugin does not have to oversample at all.

What's wrong with my logic?

. Keep the suggestions coming. Thanks again.

. Keep the suggestions coming. Thanks again.