This is going to be a wild speculative post, but since nobody risked to give an explanation, I will try.

Consider the following crosstalk cancellation with speakers and externalization with headphones:

13 Is the 3D realism of BACCH™ 3D Sound the same with all types of stereo recordings?

(...)

All other stereophonic recordings fall on a spectrum ranging from recordings that highly preserve natural ILD and ITD cues (these include most well-made recordings of “acoustic music” such as most classical and jazz music recordings) to

recordings that contain artificially constructed sounds with extreme and unnatural ILD and ITD cues (such as the pan-potted sounds on recordings from the early days of stereo). For stereo recordings that are at or near the first end of this spectrum, BACCH™ 3D Sound offers the same uncanny 3D realism as for binaural recordings

18.

At the other end of the spectrum, the sound image would be an artificial one and the presence of extreme ILD and ITD values would, not surprisingly, lead to often spectacular sound images perceived to be located in extreme right or left stage, very near the ears of the listener or even sometimes inside of his head (whereas with standard stereo the same extreme recording would yield a mostly flat image restricted to a portion of the vertical plane between the two loudspeakers).

(...)

By the way, I recently created a PRIR for stereo sources that simulates perfect crosstalk cancelation. To create it, I measured just the center speaker, and fed both the left and right channel to that speaker, but the left ear only hears the left channel because I muted the mic for the right ear when it played the sweep tones for the left channel, and the right ear only hears the right channel because I muted the mic for the left ear when it played the sweep tones for the right channel. The result is a 180-degree sound field, (...).

Binaural recordings sound amazing with this PRIR and head tracking.

Using the first PRIR, central sounds seem to be in front of you, and they move properly as you turn your head. However, far-left and far-right sounds stay about where they were. That is, they sound about the same as they did without a PRIR, and they don't move as you turn your head. In other words, far-left sounds stay stuck to your left ear, and far-right sounds stay stuck to your right ear. It's possible to shift the far-left and far-right sounds towards the front by using the Realiser's mix block, which can add a bit of the left signal to the front speaker for the right ear, and a bit of the right signal to the front speaker for the left ear.

(...).

Why is this happening?

In the second example, why the hard panned sounds are stuck to the side and do not derotate according to the headtracking?

First, let’s see how the convolution, interpolation and headtracking work.

An standard 2 channel

personal impulse response - PRIR comprises 12 impulses:

1.

looking center + left speaker playing + left ear measuring;

2.

looking center + left speaker playing + right ear measuring;

3.

looking center + right speaker playing + left ear measuring;

4.

looking center + right speaker playing + right ear measuring;

5.

looking left + left speaker playing + left ear measuring;

6.

looking left + left speaker + right ear measuring;

7.

looking left + right speaker + left ear measuring;

8.

looking left + right speaker + right ear measuring;

9.

looking right + left speaker + left ear measuring;

10.

looking right + left speaker + right ear measuring;

11.

looking right + right speaker + left ear measuring;

12.

looking right + right speaker + right ear measuring.

ipsolateral impulses (1, 4, 5, 8, 9, 12)

contralateral impulses (2, 3, 6, 7, 10, 11).

If you have only three looking angles (i.e. -30, 0, +30), how does the convolution engine set the

frequency levels, time arrivals and

phase delays for any angle between -30 degrees and 0 degrees and between 0 degrees to +30 degrees?

Frequency levels, time arrivals and

phase delays for any angle are calculated by an interpolation algorithm.

Then head-tracking helps to externalize sounds.

The standard PRIR convolution, interpolation and headtracking allow to exactly emulate, with headphones,

how the measured room/speakers sounds.

Since speakers were located at +/-30 degrees and

contralateral impulses describes an important proportion of the acoustic crosstalk, the expression “

how the measured room/speakers sounds” above means mostly flat image restricted to a portion of the vertical plane between the two virtual loudspeakers. Even if the content has hard panned sounds. Virtual speakers and hard panned sounds within will derotate according to the head-tracking.

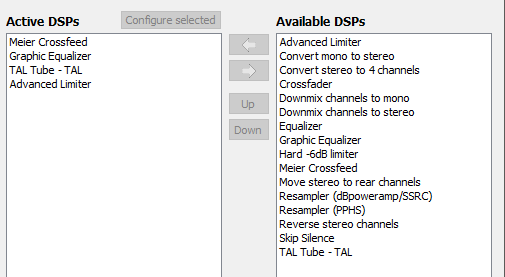

So if you are fond of crossfeed for headphones that emulates what would be to listen to standard stereo recordings, in the old-fashioned way (i.e. with two speakers in a room and corrupting acoustic crosstalk), just use a PRIR convolution without interpolation and headtracking.

So far, so good.

But Erik wanted to test with headphones how Professor Choueiri’s crosstalk cancellation would sound in his room.

What has he done?

Erik’s crossfeed free PRIR comprises also 12 registries, but now both speakers are at 0 degrees and

contralateral impulses registries are left blank (2, 3, 6, 7, 10, 11).

Since only

ipsolateral impulses are computed in Erik’s crossfeed free PRIR, acoustic crosstalk info is lost and no crossfeed is added.

But there is another catch in Erik’s crossfeed free PRIR.

In standard PRIR’s, speakers are measured at +/-30 degrees.

One the one hand,

ipsolateral impulses capture

frequency levels, time arrivals and

phase delays introduced by

room reflections (RIR component of his PRIR) and the

filtering effect of his pinna plus the

scattering effect of the ipsolateral side of his face (two of three HRTF components of his PRIR) varying according to the looking angles.

On the other hand,

contralateral impulses captures

room reflections (RIR component of his PRIR) and

acoustic crosstalk (note that acoustic crosstalk is a mix of room reflections and a third HRTF component). Such third HRTF component is the effect of his

head shadowing directional frequencies.

In Erik’s PRIR both speakers are measured at 0 degrees, with +/-30 looking angles.

On the one hand,

ipsolateral impulses capture

frequency levels, time arrivals and

phase delays introduced by all the

room reflections (RIR component of his PRIR), the

filtering effect of his pinna, the

scattering effect of the ipsolateral side of his face and the

effect of his head shadowing directional frequencies varying in degree according to the looking angles.

On the other hand,

contralateral impulses, that in standard PRIR’s would capture the effects of room reflections and acoustic crosstalk, are left in blank, as I said before.

As he describes, he gets externalization with binaural recordings.

That is because natural ILD an ITD are already in the content. In others words, the effect of your head shadowing directional frequencies (or as, I said earlier, the acoustic relationships between both hemispheres that are separated by the sagital plane were preserved in the content).

If Erik plays back with his crossfeed free PRIR the old stereo songs that contains hard panned sounds, why the hard panned sounds don’t derotate?

Hard panned sounds don't have ITD at all, because there is no reference for the original time in the opposite channel. Every difference in time will be deemed just as the hard panned instrument started to play later (obviously it does not affect artistic coherence with other sounds as ITD are in the range of microseconds). They don’t have ILD either because their full level is on one single channel.

Since Erik’s PRIR has

ipsolateral impulses measured with speakers at 0 degree that, as I said, capture

the effect of his head shadowing directional frequencies, the convolution engine can introduce, in the playback step, his personal ILD and ITD to

center sounds while rotating his head.

That happens because when he turns his head:

a) looking left (maximum looking angle is still 30 degrees),

center sounds become more shadowed by left

ipsolateral impulses and at the same time

center sounds become less shadowed and more scattered by right

ipsolateral impulses;

b) looking right (maximum looking angle is still 30 degrees),

center sounds become less shadowed and more scattered by left

ipsolateral impulses and at the same time

center sounds become more shadowed by right

ipsolateral impulses .

And why that does not work with hard panned sounds? Why in such strong stereo separation recordings, hard panned sounds are stuck to the side when using Erik’s crossfeed free PRIR?

Because when he turns his head looking left (maximum looking angle is still 30 degrees), hard panned sounds in the left channel become more shadowed by left

ipsolateral impulses, but absolutely nothing happens with such hard panned sounds at the right headphone driver, since no correlated sounds are feed into the convolution of right

ipsolateral impulses.

Note that the absence of crossfeed seems to make reflections more perceptible (so better room acoustics would yield no echo?):

By the way, after making the PRIR, the "window" setting should be reduced to 200ms to prevent the right ear from hearing a faint echo of the left ear's signal, and vice versa.

So it is clear that stereo recordings with hard panned sounds won’t result a good spatial rendering with Erik’s PRIR.

What does Erik do to circumvent such error?

The Realiser A8 mix block allows mixing the left channel into the right channel and vice versa with rough steps (consider 0 as mute and 1 as full scale, the step is 0.1; don’t know how to express that logarithmically in dBFS).

If

@Erik Garci mix at least 0.1 of each channel one another then he is able “to shift the far-left and far-right sounds towards the front”.

Do the hard panned sounds also start to derotate according to the headtracking?

Yes.

Why?

Because only using

ipsolateral impulses Erik is to feed some level of left channel hard panned sounds into the convolution of right

ipsolateral impulses.

Then why the original far-left and far-right sounds shift

directly towards the front? Why the far-left and far-right sounds shift directly to the front and not

gradually, going from "stuck to the sides" to rotating as if they were outside the +/-30 degrees looking angles?

Because the ITD and ILD are derived from and is therefore limited by the maximum time difference captured by ipsolateral left and right looking angles.

Could the 0.1 mix ratio be higher than his natural ILD?

Yes.

That’s why, as far as I understood, Erik said:

That would be much easier than manually muting the microphones during measurements, and just about any PRIR could be used.

Allowing fractional values would be even better, such as 0.5 (-6 dB) or 0.1 (-20 dB).

What if the Realiser could mix both channels in increments such as 1dBFS?

We are waiting for Smyth Research response.

Does anybody have an alternative explanation that is not expressed as programming codes or equations?