audiohobbit

500+ Head-Fier

Merry christmas to all.

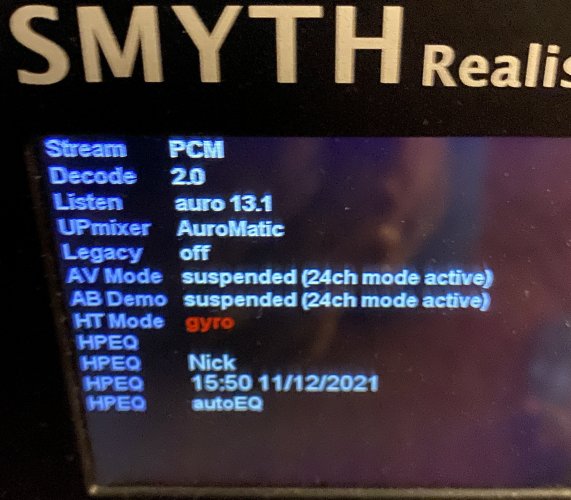

@dsperber: That's good news. Whe I tried magnetic stabilisation I was not satisfied. Soundstage constantly shifted to the left or to the right, I had to recenter it every 10 minutes or so by pressing the button on the head top.

It could be that the head top (or both I have) already lost their gyro calibration, and I didn't know it back then, but I don't think so. When calibration data is lost the soundstage starts to rotate 360 all the time, and this was not the case.

I'm a bit confused about the new HT firmware. I thought this will eliminate the need for the recalibration in the fridge, but no, now you still need to do it but the fridge isn't enough, must be the freezer?

When it comes to liquid nitrogen, I'm out...

Both my headtops constantly lose this calibration data, I put them several times now in the fridge already. Now I updated the HT FW on both but one of them again lost its calibration data. I'm a bit worried to put it in the freezer... Will try the fridge first.

On headphones: For over ear headphones where you can use the mics for auto HPEQ the frequency response gets EQed, so basically all HP should sound similar. But only above 500 Hz! Below 500 Hz the original frequency response of the headphone is used, so especially the bass abilities of the headphone will be important. Since now there is a bass (and treble) shelving filter, one can increase or decrease the bass below a variable frequency so this is a workafound for that.

I'm pretty sure that there still will be slight differences in tonality even after HPEQ though. Therefore one could use the manSPKR process to compare directly to the speakers and EQ manually.

Localisation of the virtual speakers will be similar and very good with all autoHPEQed over ear headphones I think. A perceived "natural" soundstage of a headphone won't plaay a role anymore I'd say (for me personally no headphone ever had any kind of soundstage, it's always all in my head and I don't like it).

If you perceive some kind of natural soundstage with a bit of externalisation with a HP then that is because it coincidentally matches your HRTF better than another HP.

For 100% matching of HRTF weuse the Realiser though...

@dsperber: That's good news. Whe I tried magnetic stabilisation I was not satisfied. Soundstage constantly shifted to the left or to the right, I had to recenter it every 10 minutes or so by pressing the button on the head top.

It could be that the head top (or both I have) already lost their gyro calibration, and I didn't know it back then, but I don't think so. When calibration data is lost the soundstage starts to rotate 360 all the time, and this was not the case.

I'm a bit confused about the new HT firmware. I thought this will eliminate the need for the recalibration in the fridge, but no, now you still need to do it but the fridge isn't enough, must be the freezer?

When it comes to liquid nitrogen, I'm out...

Both my headtops constantly lose this calibration data, I put them several times now in the fridge already. Now I updated the HT FW on both but one of them again lost its calibration data. I'm a bit worried to put it in the freezer... Will try the fridge first.

On headphones: For over ear headphones where you can use the mics for auto HPEQ the frequency response gets EQed, so basically all HP should sound similar. But only above 500 Hz! Below 500 Hz the original frequency response of the headphone is used, so especially the bass abilities of the headphone will be important. Since now there is a bass (and treble) shelving filter, one can increase or decrease the bass below a variable frequency so this is a workafound for that.

I'm pretty sure that there still will be slight differences in tonality even after HPEQ though. Therefore one could use the manSPKR process to compare directly to the speakers and EQ manually.

Localisation of the virtual speakers will be similar and very good with all autoHPEQed over ear headphones I think. A perceived "natural" soundstage of a headphone won't plaay a role anymore I'd say (for me personally no headphone ever had any kind of soundstage, it's always all in my head and I don't like it).

If you perceive some kind of natural soundstage with a bit of externalisation with a HP then that is because it coincidentally matches your HRTF better than another HP.

For 100% matching of HRTF weuse the Realiser though...