chesebert

18 Years An Extra-Hardcore Head-Fi'er

- Joined

- May 17, 2004

- Posts

- 9,330

- Likes

- 4,691

Didn’t know people actually bought ARC cards. What led you to that purchase decision?

Probably went around and around on itDidn’t know people actually bought ARC cards. What led you to that purchase decision?

Didn’t know people actually bought ARC cards. What led you to that purchase decision?

Cool. We all need an adult toy to play withNovelty. lol. I already have a 3080ti for proper gaming, but the A770 is pretty interesting to toy around with. Sometimes you can squeeze some pretty great performance out of it, and sometimes it's a trainwreck.

Can someone explain to me why I still can’t buy any 4090 now that mining is over? What am I missing?

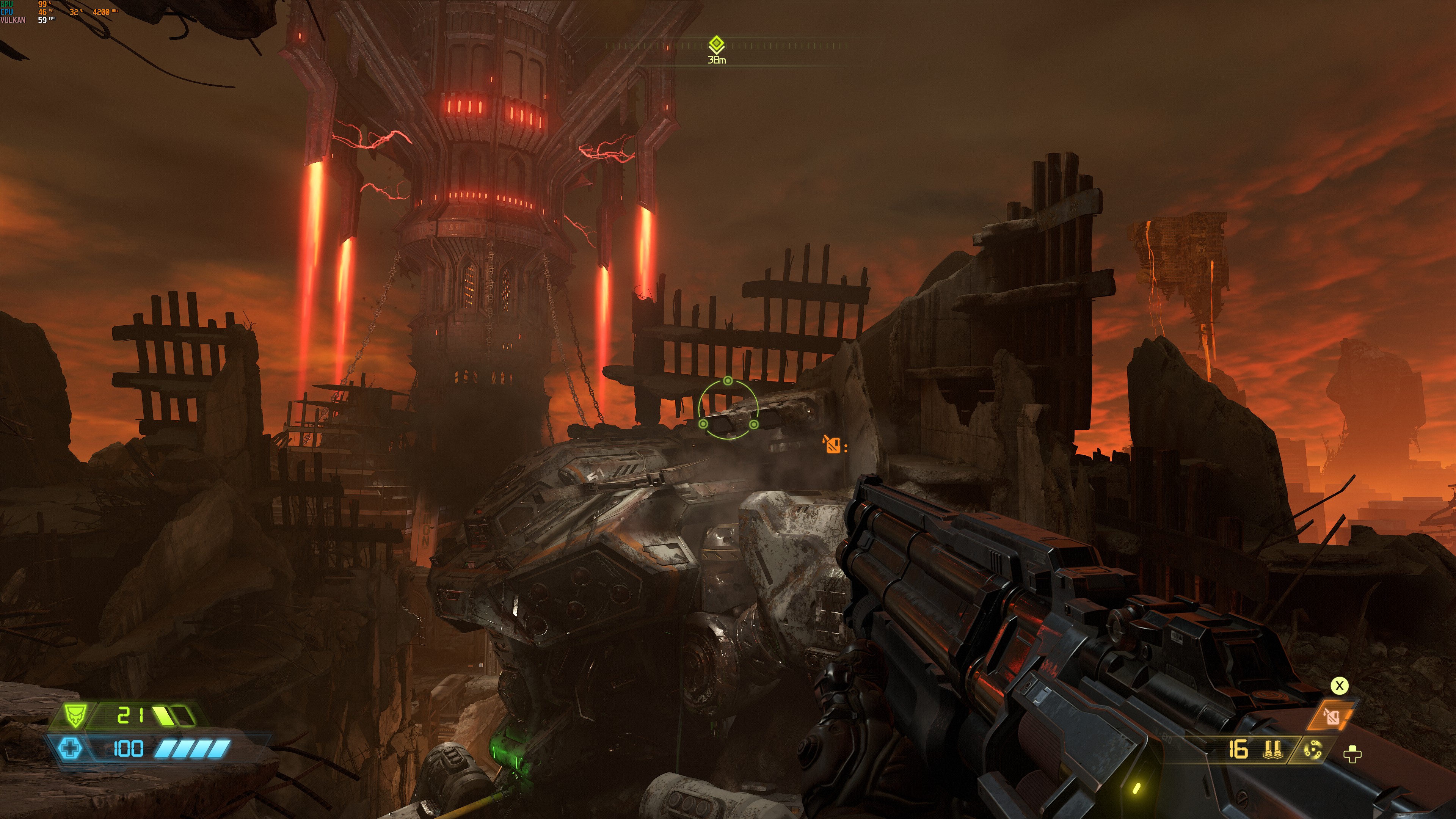

Raja’s fine wine - here we go againMan, Doom Eternal is another game that highlights just how great the hardware is in the A770.

Ultra Nightmare preset, ray tracing, no upscaling to 4k:

It does drop into the high 40s/low 50s sometimes, but if this game supported XeSS/FSR that would more than clear that up.

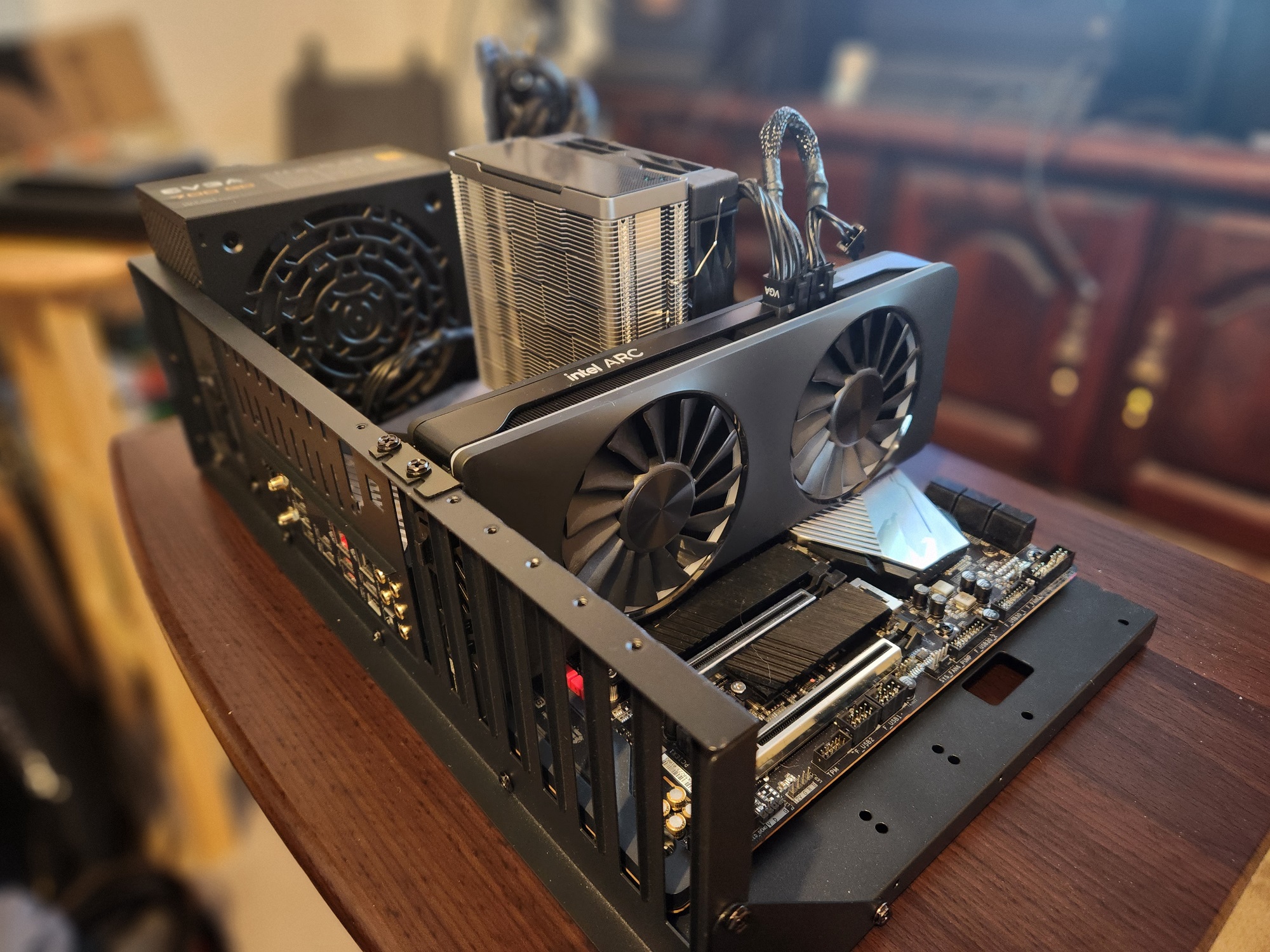

Honestly interesting times in the computing world. I've personally been using mostly a pure AMD/ATi as my main gaming system since the Athlon 64 days with one exception where I had a i7 3770k until I went with the Ryzen 1800X, still using that same X370 motherboard from then with my 5800X3D. I've had a few Nvidia GPU's such as the GTX 280 and more recently the GTX 1080 Ti on my secondary rigs, but never on my main one. Going to wait a few years for another CPU upgrade where I finally retire the motherboard, definitely going for another X3D chip, they're so nice for gaming. Curious to how the Intel GPU's turn out in the long run. The trend for the power hungry CPU's and GPU's this new generation does concern me some. Though maybe they will reign it some in the future generations.The "case" I got. It just works.

I still can't get over how weird it is to have an AMD CPU with an Intel GPU.

Honestly interesting times in the computing world. I've personally been using mostly a pure AMD/ATi as my main gaming system since the Athlon 64 days with one exception where I had a i7 3770k until I went with the Ryzen 1800X, still using that same X370 motherboard from then with my 5800X3D. I've had a few Nvidia GPU's such as the GTX 280 and more recently the GTX 1080 Ti on my secondary rigs, but never on my main one. Going to wait a few years for another CPU upgrade where I finally retire the motherboard, definitely going for another X3D chip, they're so nice for gaming. Curious to how the Intel GPU's turn out in the long run. The trend for the power hungry CPU's and GPU's this new generation does concern me some. Though maybe they will reign it some in the future generations.

Man the first post in this thread...

AMD Athlon 64 X2 6000+

Galaxy GeForce 8800 GT

2gb PC6400 RAM

X-Fi Xtreme Music

two 320gb seagate SATA drives

How things change.

I remember having the ATi Radeon X1950 years ago, I don't recall which Athlon I had back then though. But that was a shared computer. My first computer of my own was a Phenom II X4 940 Black edition cpu with an ATi Radeon 4870. Yeah I skipped the bulldozer cpu's myself.I think I've had a 50/50 split of AMD/ATI and Nvidia GPUs, but I've almost exclusively used AMD CPUs. First rig I ever built for myself had an Athlon X2 4200+ with an ATI Radeon X1300XT; I thought that thing was the coolest thing ever. Hah.

I've had the odd Intel Pentium 3 and Celeron back before I built my computers, but the only computer I ever built with an Intel chip was my 4690k (which I later upgraded to a 4790k) rig. Even at the time I remember the Bulldozer-based AMD chips being hot garbage, so I skipped AMD for that gen.

Yeah, it's a trip going into the early pages of the thread. Aesthetics in particular have changed a lot!