To be able to control what filters to use etc. It's amazing to be able to try out different things and have full control of the chain. May + HQP has teached a lot.

What about low signal accuracy:

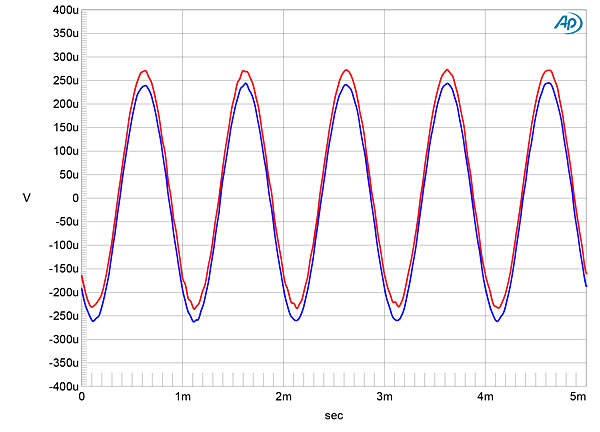

Fig.13 HoloAudio May, waveform of undithered 1kHz sinewave at –90.31dBFS, 16-bit data (left channel blue, right red).

Fig.14 HoloAudio May, waveform of undithered 1kHz sinewave at –90.31dBFS, 24-bit data (left channel blue, right red).

Even if we didn't go to -90dBFS, this still applies. The more levels we have available, the more accurately we can represent the wave. Note that there won't be any further reconstruction so these bits represent the final samples as is.

The problem with sound science forum is that the attitude there is arrogant and passive aggressive. No-one here has any "agenda", but we are merely trying to understand what we hear and go further. My experience with sound science forum has been very negative as the general attitude isn't helpful. The ending of your comment signals the very attitude I'm talking about. You are not trying to help, but you are attacking.

Your discussion about bit depth also isn't starting with a good grounding when you say things like:

"So, what does 20bit or 24bit get you except ever quieter levels of noise? 24bit is useful when recording because it allows a huge amount of headroom but for playback there is literally nothing (other than noise) to be gained.".

I have just stated earlier that I can hear a clear difference between the two. It's trivial to test those. There are also others saying the same thing. IMO it's arrogant to just sweep that under the rug and just talk about how in theory it's not audible. Every theory has it's assumptions, scope and limits. To me the right scientific attitude would be to either study why these people hear these things or then just leave it for others who are interested. I bet that if we would make all the assumptions explicit, we would find out that we have been comparing apples and oranges. As an example: R2R dac converts this PCM directly into analog, while delta-sigma dac will further process it into 1 bit. We can't directly compare the situation to official or unofficial studies where delta-sigma dac has been used.

Yes, it's not possible to study and prove everything that people claim to hear, but assuming that everyone else is delusional, stupid or selling something isn't constructive. We all know the argument about fly next to jet plane, still we can difference between for example 20 and 24 bits in for example these specific circumstances. I started this thread because I couldn't explain that with my knowledge of our current theories. People discussing in these threads are well aware of brain's abilities to imagine. We are not splitting hairs, but talking about clear differences.

Hearing differences happening between 20 and 24bit is the same fact contradicting the facts as we got with Rob Watts and his forever audible impact of -250dB noise shaping.

The instantaneous dynamic of the ear is like 60 or 70dB(maybe someone with a failing acoustic reflex could get over that, but it would also have caused serious damage to his hearing over the years, so that's not our answer).

We sure can hear sounds of lower intensity than some louder ones, and even within ambient noise, we can perceive quieter sound cues up to a point. But human hearing, even with its bag of tricks, still behaves in a way following the well accepted model of auditory masking where a loud 500Hz might not mask completely a nearly as loud 550Hz, but it will mask(as in, we don't notice it's there) a quiet 550Hz(and same for temporal masking). We encounter a fairly broad range of noises from our environment, even in subjectively silent rooms in our house. A quiet recording studio is usually said to have noises in the 20 to 30dB SPL, a house usually is worse.

As gregorio said, in an anechoic chamber with kids, we'll get perception of sound below 0dB sometimes(not much below, but a handful is expected). On the other hand, in a normal listening setting at home, and not being a kid anymore, what are the odds of us picking up stuff at or below 0dB SPL?

We have all the well established, many times verified, data for what to expect on average, those are the facts we know and trust, because they've been tested rigorously and replicated(it's literally what made us know that 0dB was not in fact the lower limit for humans even though that's the definition it was initially given). Now imagine what conditions you'd need, to have a chance of hearing changes in the music below 20bit(so about 20*6=120dB below peaks)? Will those be above 0dB SPL? Let's say we want those audible differences at 10dB SPL in a most ideal scenario and a fair amount of optimism for someone still young. That means listening to music with peaks possibly reaching 10+120+whatever extra dB to reach the actual signal is imagined to change in your hypothesis(somewhere between 1 and 4bit under 20). So we need peaks at least above 130dB SPL. Do you listen to music that way? Doesn't seem like a safe habit.

Let's say you have that situation at home. We still have a few facts that challenge the possibility of hearing anything that actually is below 20bits:

The instantaneous dynamic range of your ears is the most obvious. You'd need an extremely quiet passage, a long enough one to even stand a chance. Do you feel the difference you're noticing, only at the beginning and end of tracks during silent passages? If not, we seem to have a direct conflict with the idea that you have normal human ears. It's more likely that the hypothesis is wrong.

Another obvious issue is the recorded material. What kind of signal are we going to find 20bit down and below? Noises, noises, noises, so very many noises. Even if your hypothesis is correct, it would be a matter of hearing changes in the background noises. Hard to get motivated about that, even if you're entirely right.

Then there is the simple notion that you need a playback system that can handle that kind of dynamic and resolution. No DAC actually manages 24bit, then the amp needs to not create any distortion loud enough to mask below 20bit content. Same for the headphone or speakers. One more necessary condition that doesn't seem realistic.

All in all, the facts disagree with your idea that what you're noticing really are differences below 20bits in the music/signal.

And I have the same conclusion for the same reasons about all the weird ideas Watts brought up about hearing below what humans are expected to hear. Because the facts, and some very well researched ones, disagree with the possibility, meaning the idea is wrong.

Now, of course, this does not mean Rob never heard anything, or that you never heard anything changing. It's one clear possibility that you didn't, and you probably should try to test for it(to prove to yourself that you're indeed hearing something instead of feeling like you do for reason X or Y or some visual cues tricking the brain). But there is another possibility, that's just as likely. Your correlation between the change you are hearing, and the signal changing below 20bits is the wrong one. Pretty much everything we know about human hearing, music albums, ambient noises, and playback gear, is screaming at us that if you're hearing something, it must be a good deal louder than 120dB below peak.

So I think a good start would be to measure the 2 scenarios allegedly making audible changes, and confirm how loud the differences actually are in your house with your gear. Obviously you're not going to measure anything near or below 0dB SPL out of a headphone in your room(other than more noises unrelated to our concerns), but the output of the DAC and that of the amp might already give us the information we're looking for.

And if nothing was found different in the signal louder than 20bit down, then you'd start to have a more serious case to say you're actually hearing something that low(if you also test for biases).

Hearing thresholds, as commonly given, tend to already be fairly optimistic. Some aren't even average values, but the very best someone got under conditions made to get the best possible result(ideal test signal, ideal delays...). So it's important to know where they come from and to not underestimate how rare it is for someone to do better(except kids, kids have undamaged young ears, if they're ever going to break some hearing record, that's when they'll do it, not when they're 45).