MrHaelscheir

100+ Head-Fier

This is getting quite off-topic unless we are now talking about objective alternatives to DAC, amp, and cable magic for achieving soundstaging or imaging improvements. I don't know much about Apple's implementation of Spatial Audio, but if it is true that there is only one official Dolby Atmos mix for a given track, then I would consider it feasible for errors in the computer-vision-based HRTF calculation as well as inherent tonal differences in the absence of in-ear calibrations between the headphone and speaker responses that could contribute to the perception of alterations to the mix--mix panning alters channel balance within different frequency bands if not the entire after all, doesn't it, though at that point, we are arguing semantics of intent? I would expect that Spatial Audio does inherently have to virtualize the standardized angular positions of each channel, which may or not match that of your own physical Dolby Atmos system due to errors in the former or the latter. The presence of reflections in a room or the absence thereof from the headphone experience could also alter tonality and perhaps some aspects of imaging. But I certainly know nothing about how your listening room is currently set up and whether there could be sufficient comb filtering to cause vocals to be panned incorrectly.

Regardless, of course the head-tracking solution will be altering the frequencies instead of keeping them "unchanged in real world space", but don't forget that all this is doing is trying to mimic the same alterations and filtering your own ears make when being reoriented within "real world space". Maybe it is true that Apple's Spatial Audio implementation is inadequate at least for some. Maybe the Smyth Realiser A16 will provide a better match in sound and panning for your own system. Otherwise, "alteration to sound different" certainly depends on what frequencies finally reach your eardrums within both systems. In my case below, I can demonstrate how at least for certain directions, I can definitely measurably calibrate the combination of transfer functions from SPARTA Binauraliser NF (PC loopback and monitoring through the Reaper DAW) and my Meze Elite with hybrid pads to present a very similar frequency response at my ears as from my outdoor Genelec measurements:

(2024-03-19: See "Calibration using threshold of hearing curves" in https://www.head-fi.org/threads/rec...-virtualization.890719/page-121#post-18027627 (post #1,812). Everything I have said about neutral speakers actually having a lot more ear gain than neutral headphones was wrong.)

Anyways, one basic test would be to find a binaural panning tool for both the Dolby Atmos and Apple Spatial Audio systems and compare the perceived panning of individual sound sources. For my in-ear measurements, other than this HRTF measurement method only accounting for an upright torso rotating relative to a horizontally positioned sound source (head-tracking doesn't currently account for relative torso positioning anyways), I can say that dragging a sound source around in SPARTA Binauraliser NF quite convincingly positions it where indicated throughout the entire sphere, and quite responsively so. There are still some slight tonal differences, but I attribute this to my Genelecs being positioned and GLM-calibrated (within limitations) within the right half of a wide and untreated living room. For me, an absence of extraneous reflections are the key to absolute clarity and transparency into a recording though yes, certain distance cues may be lost, but my living room isn't exactly large enough to fit the physical stereo width of an entire orchestra.

Regardless, of course the head-tracking solution will be altering the frequencies instead of keeping them "unchanged in real world space", but don't forget that all this is doing is trying to mimic the same alterations and filtering your own ears make when being reoriented within "real world space". Maybe it is true that Apple's Spatial Audio implementation is inadequate at least for some. Maybe the Smyth Realiser A16 will provide a better match in sound and panning for your own system. Otherwise, "alteration to sound different" certainly depends on what frequencies finally reach your eardrums within both systems. In my case below, I can demonstrate how at least for certain directions, I can definitely measurably calibrate the combination of transfer functions from SPARTA Binauraliser NF (PC loopback and monitoring through the Reaper DAW) and my Meze Elite with hybrid pads to present a very similar frequency response at my ears as from my outdoor Genelec measurements:

(2024-03-19: See "Calibration using threshold of hearing curves" in https://www.head-fi.org/threads/rec...-virtualization.890719/page-121#post-18027627 (post #1,812). Everything I have said about neutral speakers actually having a lot more ear gain than neutral headphones was wrong.)

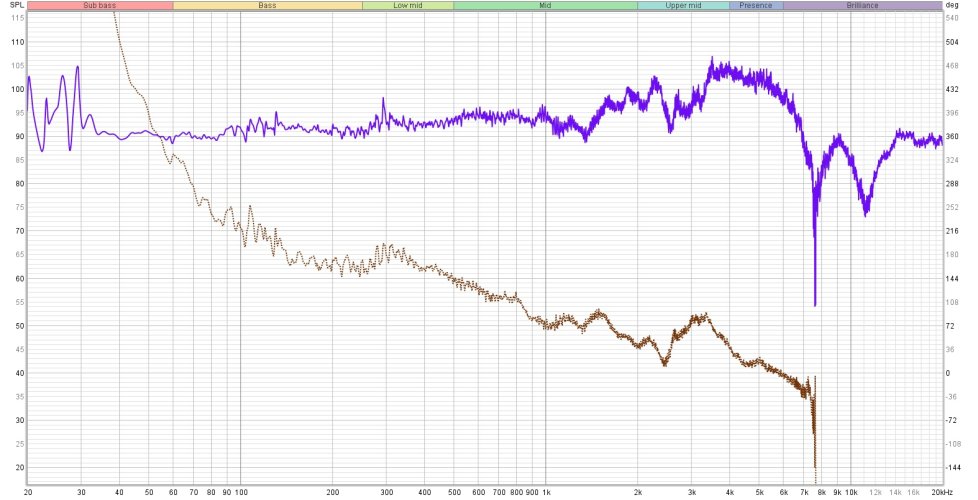

Figure 1: Left mic-compensated in-ear measurement with body and head rotated to the right by 30 degrees relative to the Genelec 8341A ("R 30 L") positioned in my large backyard with ground reflection absorption 1.5 m away, 1.187 m from the ground

Figure 2: Final left in-ear EQed response of Meze Elite with hybrid pads, positioned here for quick comparison

Figure 3: Left uncompensated "R 30 L" measurement with 1.2 ms impulse window as the target for the response from 1 kHz and up

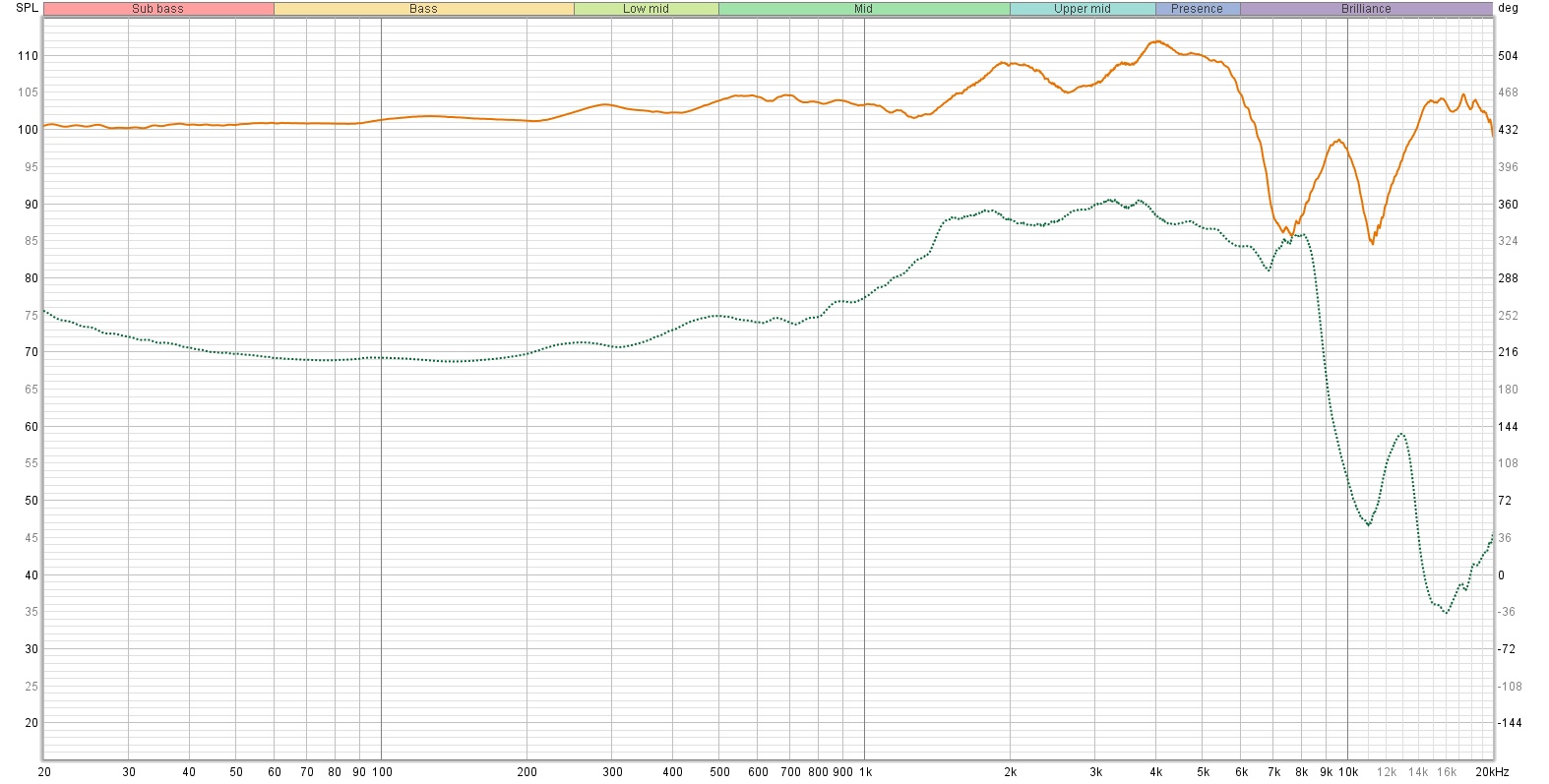

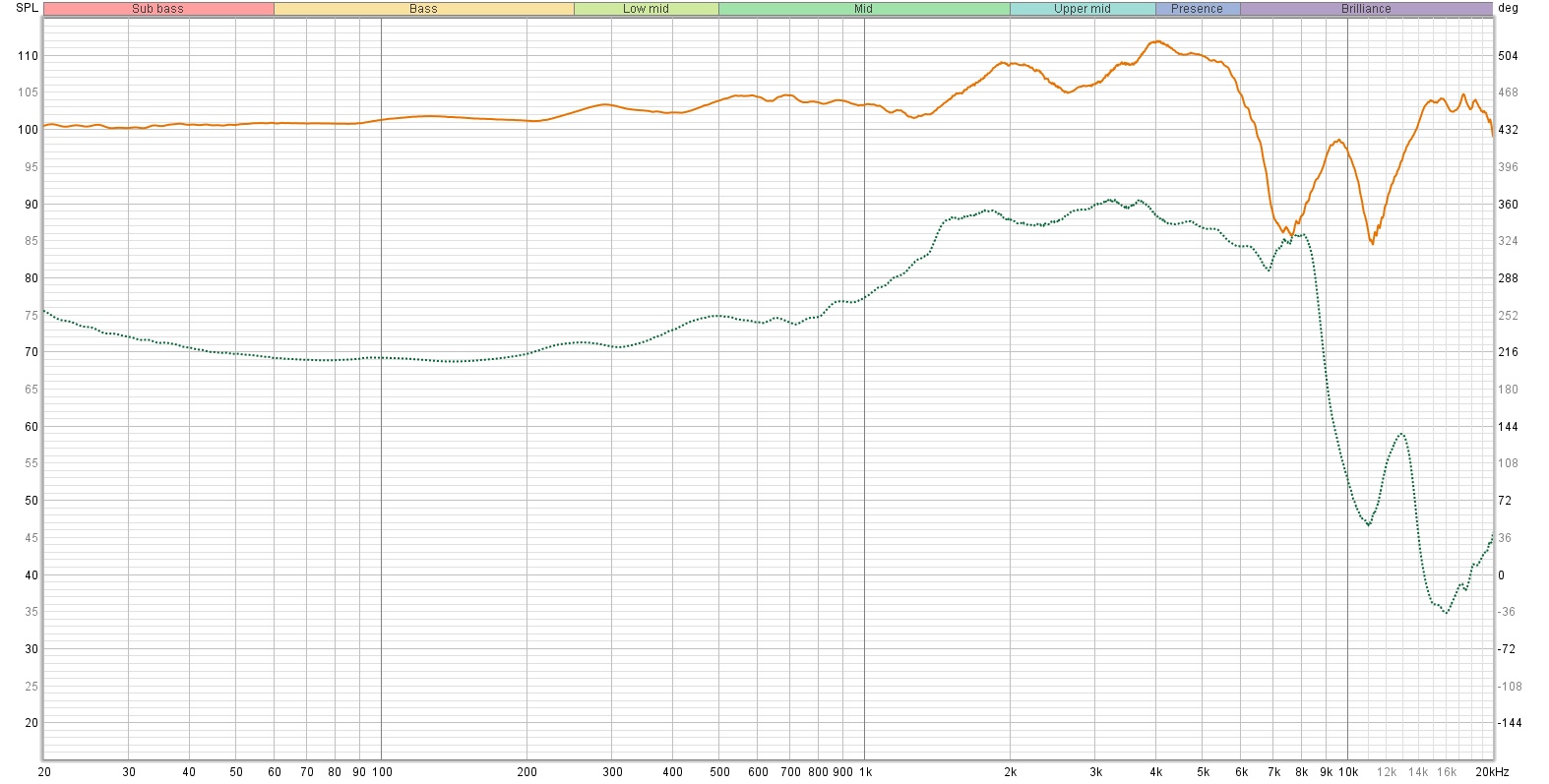

Figure 4: On Excel. Grey trace: Meze Elite hybrid pads left un-EQed in-ear measurement. Orange trace: Meze Elite hybrid pads left in-ear measurement after applying SPARTA Binauraliser NF's transfer function simulating the turning of the head 30 degrees to the right of the sound source. Blue trace: The transfer function per the latter subtracted from the former. Here, it appears that ear gain EQ is minimized for sound sources panned 30 degrees, its mainly applying the measured directional nulls and top octave changes.

Figure 4: On Excel. Grey trace: Meze Elite hybrid pads left un-EQed in-ear measurement. Orange trace: Meze Elite hybrid pads left in-ear measurement after applying SPARTA Binauraliser NF's transfer function simulating the turning of the head 30 degrees to the right of the sound source. Blue trace: The transfer function per the latter subtracted from the former. Here, it appears that ear gain EQ is minimized for sound sources panned 30 degrees, its mainly applying the measured directional nulls and top octave changes.

Figure 5: Orange trace: The compensation curve from subtracting the target "real world space" "R 30 L" measurement from the uncalibrated virtualized Meze Elite hybrid pads "R 30 L" measurement. In practice, I have found only small variations within 1 dB between different seatings of the in-ear microphones, greater variation coming from the measurement setup and procedures itself. Here, I first manually apply a low-shelf filter, let REW generate the rest of the filters, do some fine-tuning of my own, export the filters into Equalizer APO, then spend another 30 minutes to an hour fine-tuning against subsequent in-ear measurements. It is certainly not fun having these mics stuck in your ears for hours and being tethered to your seat; the results were certainly worth it for some recordings.

Figure 6: Left ear final PEQ. Yes, it's a lot of filters, but it does the job. The only noticeable artifacts were when listening to isolated transients, yet such was only audible through the SPARTA Binauraliser NF or AmbiRoomSim chains as extra "zip" and decay perhaps due to the convolution filters used.

Figure 7: The boosting from this EQ fortunately only adds second-order harmonics (plotted at the harmonic frequency) to the ear gain region and treble, still likely barely audible.

Figure 7: The boosting from this EQ fortunately only adds second-order harmonics (plotted at the harmonic frequency) to the ear gain region and treble, still likely barely audible.

Figure 8: The Meze Elite hybrid pads "R 30 L" measurement taken on a different day with a completely redone in-ear microphone and headphone seating. Not too far off. Understandably, a main limitation is variations in the treble peaks and the filling of the 9 kHz null between headphone seatings, but otherwise, I had found that this barely detracts from directional virtualization.

Figure 9: My grail headphone for binaural head-tracking would have no nulls, but as evident from this measurement applying the virtualized direct 90-degree incidence sound source to my left ear, the 6 kHz peak and the 8.7 kHz and 14 kHz nulls I tend to measure through my headphones are accentuated, suggesting their actually being part of my 90-degree free-field response.

If this demonstrates anything, it is that it can take a lot of work to match headphones to speakers' frequency response, mind those in your actual listening room. After that, we are arguing semantics regarding how Apple Spatial Audio and Dolby Atmos are "not the same". I suppose we agree that a completely different Dolby Atmos system with completely different speakers, room acoustics, and hence frequency response is still playing "Dolby Atmos"; hence the question is of how well a given binaural head-tracking system emulates Dolby Atmos with "different speakers" and perhaps "no room acoustics" (anechoic).

Figure 9: My grail headphone for binaural head-tracking would have no nulls, but as evident from this measurement applying the virtualized direct 90-degree incidence sound source to my left ear, the 6 kHz peak and the 8.7 kHz and 14 kHz nulls I tend to measure through my headphones are accentuated, suggesting their actually being part of my 90-degree free-field response.

Anyways, one basic test would be to find a binaural panning tool for both the Dolby Atmos and Apple Spatial Audio systems and compare the perceived panning of individual sound sources. For my in-ear measurements, other than this HRTF measurement method only accounting for an upright torso rotating relative to a horizontally positioned sound source (head-tracking doesn't currently account for relative torso positioning anyways), I can say that dragging a sound source around in SPARTA Binauraliser NF quite convincingly positions it where indicated throughout the entire sphere, and quite responsively so. There are still some slight tonal differences, but I attribute this to my Genelecs being positioned and GLM-calibrated (within limitations) within the right half of a wide and untreated living room. For me, an absence of extraneous reflections are the key to absolute clarity and transparency into a recording though yes, certain distance cues may be lost, but my living room isn't exactly large enough to fit the physical stereo width of an entire orchestra.

Last edited: