ra990

Headphoneus Supremus

Cool, never knew that difference between the two.TT2 for example detects which chanel is which and swaps it automatically.

Dave software has no flag recognition and indeed channels mixing can happen.

Cool, never knew that difference between the two.TT2 for example detects which chanel is which and swaps it automatically.

Dave software has no flag recognition and indeed channels mixing can happen.

Looks like I was wrong. Spdif data format didn’t have the mono option. Is this a custom encoding by Chord?The dual bnc each carry a 384k mono signal, Left and Right. You can swap your stereo channels by swapping the BNC 1 and 2 leads.

I'm not sure. I do remember reading that the L/R split over the dual BNC is only done at the full upsampling 705/768k rate. If below that, I think they carry identical (up to 384k) stereo signal. Maybe Rob will chime in and explain how this works.Looks like I was wrong. Spdif data format didn’t have the mono option. Is this a custom encoding by Chord?

I have just had a play with Mscaler and my Dave. Below 705/768 only the BNC output 1 on the Mscaler carries a signal, ie below 705/768 if one uses Mscaler output 2 into the Dave no music is heard. This is identical to the way the SRC.DX works where the dual outputs are only operational for a 705/768 signal, below that only output 1 is operational.I'm not sure. I do remember reading that the L/R split over the dual BNC is only done at the full upsampling 705/768k rate. If below that, I think they carry identical (up to 384k) stereo signal. Maybe Rob will chime in and explain how this works.

So when you are transmitting 705/768, do you still use spdif encoding or you are actually using 2 spdif encodings where each spdif stream is actually encoded in stereo but with the same channel information (ie channel A and channel B subframes carry the same information)?I have just had a play with Mscaler and my Dave. Below 705/768 only the BNC output 1 on the Mscaler carries a signal, ie below 705/768 if one uses Mscaler output 2 into the Dave no music is heard. This is identical to the way the SRC.DX works where the dual outputs are only operational for a 705/768 signal, below that only output 1 is operational.

edit, this is on Dave. Not sure if it works like that with TT2 .

@Rob Watts or @GoldenOne can you drop some technical knowledge on me.

I've been chatting to a member on ASR who's been very respectful and helpful as I tried to balance the conversation that I had with Rob on my YouTube channel by asking similar questions at ASR.

This is a post that came back that I would like some insight on in relation to the benefits of upsampling and any evidence available relating to it.

Here's the post which was delivered respectfully and with the right intent:

You don't improve the sound by upsampling. Once again, the reason you don't improve the sound by upsampling is not because I can't hear any improvement or someone else can't hear any improvement. The reason is that upsampling simply, and literally, just duplicates samples. So if you upsample from 44.1kHz to 176.4kHz, that's 4x oversampling, which means every original sample is replicated three more times - it's copy-pasted three times so that every original sample is now four samples. All four samples are identical - there is no "interpolation" like you might get with a TV that increases the frame rate by creating new frames that are combinations of the frames before and after them. That's not how upsampling works - it just copies the existing sample exactly.

It might be helpful or useful to ask, Why not create a digital upsampling system that DOES interpolate samples, that looks at Sample 1 and Sample 2 and creates a new Sample 1.5 in between them that is a combination of them, to "smooth" the transition from Sample 1 to Sample 2 just like video frame interpolation on a TV smooths the perceived motion of the moving video?

The answer is that digital audio doesn't work that way: there is only one way to get from Sample 1 to Sample 2. There is no need for interpolation in the digital domain, and in fact the idea of synthesizing such an interpolated new sample doesn't even make any sense. The reconstruction filter does the smoothing during the digital-to-analogue conversion step. It doesn't - and can't - happen within the digital domain.

The only scenario in which there is more than one way to get from Sample 1 to Sample 2 is if the original audio signal contains frequencies higher than 1/2 the sample rate of the digital recording device. That's why analogue signals going into an ADC (analogue to digital converter aka a digital recorder) have to be (or at least should be!) band-limited so that they contain no frequencies higher than 1/2 the sample rate.

This is the same principle that dictates that any frequency needs to be sampled only twice - and that sampling it more than that does nothing and changes nothing.

So no, HQ Player's upsampling does nothing - or more precisely, increasing the sample rate does not change or refine the sound, and it doesn't enhance time accuracy because sample rate has nothing to do with time accuracy - it has to do with which frequencies can be accurately encoded. If you try to record a 15kHz signal using a 20kHz sample rate, then there will be a "time inaccuracy" because a 20kHz sample rate can only encode frequencies up to 10kHz. So it will encode that 15kHz signal as 5kHz (10kHz minus 5kHz instead of the original and proper 10kHz + 5kHz = 15kHz). So the "time inaccuracy" will be that the frequency - the speed, the timing - of the signal will be aliased; reproduced at 5000 cycles per second instead of the original 15000 cycles per second. But as I'm sure you can see, that "timing inaccuracy" would not be subtle (to say the least!) and would be an indication of a fundamental operational error rather than a subtle change or improvement in the "refinement," "pacing," or "precision" of the sound.

As has been explained by folks with far more knowledge than I have, increasing the sample rate does allow the reconstruction filter to operate in a larger frequency range above the limit of human hearing but below the Nyquist limit of 1/2 the sample rate. However, as those with more knowledge than me - including Amir - have shown, Chord products do not need to take advantage of that extra "wiggle room" - instead they use their gazillion taps to create a very well-implemented reconstruction filter that has tons of ultrasonic attenuation and does its work in a very small/narrow frequency band (Amir has repeatedly acknowledged that Chord products have great reconstruction filters). So with Chord products, to the best of my knowledge even this one potential implementation benefit of oversampling is not necessary or taken advantage of.

This is a misunderstanding of point 1 / how oversampling works. Oversampling is not just duplicating samples.You don't improve the sound by upsampling. Once again, the reason you don't improve the sound by upsampling is not because I can't hear any improvement or someone else can't hear any improvement. The reason is that upsampling simply, and literally, just duplicates samples.

It might be helpful or useful to ask, Why not create a digital upsampling system that DOES interpolate samples, that looks at Sample 1 and Sample 2 and creates a new Sample 1.5 in between them that is a combination of them, to "smooth" the transition from Sample 1 to Sample 2 just like video frame interpolation on a TV smooths the perceived motion of the moving video?

The only scenario in which there is more than one way to get from Sample 1 to Sample 2 is if the original audio signal contains frequencies higher than 1/2 the sample rate of the digital recording device. That's why analogue signals going into an ADC (analogue to digital converter aka a digital recorder) have to be (or at least should be!) band-limited so that they contain no frequencies higher than 1/2 the sample rate.

As has been explained by folks with far more knowledge than I have, increasing the sample rate does allow the reconstruction filter to operate in a larger frequency range above the limit of human hearing but below the Nyquist limit of 1/2 the sample rate. However, as those with more knowledge than me - including Amir - have shown, Chord products do not need to take advantage of that extra "wiggle room" - instead they use their gazillion taps to create a very well-implemented reconstruction filter that has tons of ultrasonic attenuation and does its work in a very small/narrow frequency band (Amir has repeatedly acknowledged that Chord products have great reconstruction filters). So with Chord products, to the best of my knowledge even this one potential implementation benefit of oversampling is not necessary or taken advantage of.

Thanks for the detailed post. Just on the studies that found some listeners hearing differences, were they younger listeners who could hear some of the roll off seen in some of the filters?So there is always quite a lot of confusion about upsampling, with a few recurring areas that people are mistaken or unsure about:

- What upsampling is actually doing

- Does upsampling give you information that was not already there?

- How is external oversampling any different than what your DAC is doing?

- Nyquist theory in general

This is a misunderstanding of point 1 / how oversampling works. Oversampling is not just duplicating samples.

You COULD do this, and this method is called 'Zero-Order-Hold'. But this is not at all what most dacs or oversampling software will do as there are various issues with this.

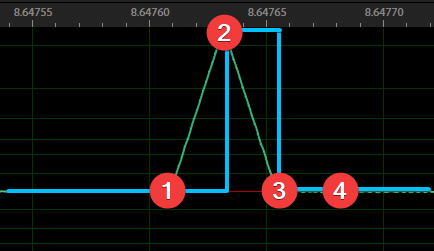

If we take a single impulse response test, which is a deliberately illegal signal representing ALL frequencies at maximum level, it looks like this:

One digital sample at full volume with silence either side.

If we were to apply ZOH/copypaste oversampling, the result would look like this:

This is problematic because in copypasting the samples to make this square wave, the result now has frequency components above the nyquist frequency. And when oversampling other signals you will get imaging/aliasing.

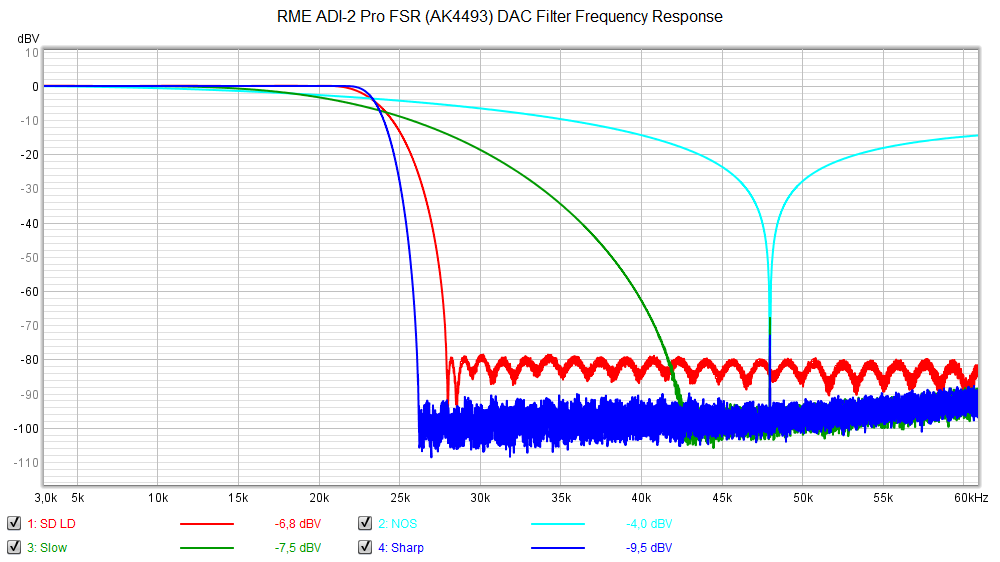

Take a look at the frequency response of the ADI-2 Pro's Zero-Order-Hold oversampling filter for example (Light blue)

Almost no ultrasonic attenuation/filtering whatsoever.

However, if we apply proper sinc oversampling (dark blue in the image above) then there is very little imaging/aliasing (though still some because the filter cannot attenuate fast enough), we have proper ultrasonic attenuation, and the result of the impulse response test looks like this:

Oversampling is NOT just copy-pasting samples. The maths involved is complex.

Some DACs actually do this. And this method is called linear interpolation.

ie: add extra samples drawing a straight line from the previous sample to the next.

Denafrips DACs 'NOS' mode is actually doing this, and so instead of the previous square result we saw from the ZOH processing, we see this:

To illustrate why there is a triangle, it's easier to look at it with the samples overlaid.

After sample 1, the oversampling process adds extra samples in a straight line directly toward sample 2.

And then does the same from sample 2 to sample 3, resulting in a triangle shape.

The blue square overlaid shows a NOS or ZOH result instead, where sample 1 is copied, then it moves up to the value of sample 2 and just holds/duplicates it until sample 3 where it moves back down again.

The problem with this linear interpolation option, is you are not really solving any problems and in fact causing more.

Firstly, you'll have more treble rolloff than a NOS DAC would.

PI/2 ( so 22.05k @ 44.1k sample rate ) SIN(PI/2) / (PI/2) gives us -3.9dB droop for a NOS

PI/2 ( so 22.05k @ 44.1k sample rate ) (SIN(PI/2) / (PI/2))^2 gives us -7.84dB droop for a for a linear interpolate

And when looking at the result of this linear interpolation for a higher frequency signal like 15khz, you'll see a lot of weird behaviour cause once again, there is next to no ultrasonic filtering and the aliasing/imaging is still present:

So just 'smoothing' things with basic interpolation doesn't work, again, you have to use proper sinc interpolation.

(You can arguably use a gaussian filter, but that's a different debate entirely).

This is a misunderstanding of points 2 and 4 up top.

Upsampling is NOT intending to create any information above the nyquist frequency or information that was not already there.

If your file is 44.1khz, you CANNOT get back any information that was above 22.05khz.

Upsampling is about better reconstructing the information you already have, because nyquist does NOT state that the sampled information you have IS the full 22.05khz bandwidth signal, it says you can RECONSTRUCT that information....if you perfectly band-limit the signal.

Band-limiting is exactly what the sinc interpolation is doing, it's cutting off everything above 22.05khz so that you are left with the reconstructed original signal with all information upto 22.05khz intact.

The problem is that we cannot make a perfect band-limiter. This would require infinite processing power.

So instead we can only do a 'pretty damn good' job by doing exactly what Rob Watts, PGGB and HQPlayer are all doing, throwing a ton of compute power at the problem to enable the use of EXTREMELY high tap count sinc reconstruction filters.

As a result you go from a typical filter inside a DAC that produces a band limit / cutoff like this:

To something like this:

Additionally, you can use various windowing functions to optimise the filter for performance in different areas. The WTA/Chord filter uses a windowing function which looks like this:

This is a time-domain optimised window and is intended to ensure that time-domain accuracy of transients is as good as possible.

Oversampling is not about adding back information above the nyquist frequency, it is about better adhering to nyquist theory with the information you do have. Nyquist theory has restrictions and conditions, and high performance oversampling is a way to overcome them.

This seems to be a misunderstanding of point 4.

The reconstruction filter inside any DAC is doing EXACTLY the same task as external oversampling like the MScaler, HQPlayer or PGGB. It's not actually any different.

It just does not have the compute power to do it very well, and so DACs like the 9038Pro have only 128 taps internally for example. Compared to the millions of taps you can use with external tools.

Unless you have a NOS dac, oversampling is not optional. Your DAC is already doing it internally. It's just a question of whether to do it with a higher quality tool or not.

Additionally, it's worth noting that the assertion frequently made that reconstruction filters are inaudible is completely unsubstantiated. To my knowledge no study has ever made that conclusion, and all evidence that does exist regarding reconstruction filters or native high-sample-rate files vs redbook have concluded that the differences are audible to at least some listeners.

Sorry, should have clarified, that is not a publication or taken from any official Chord resource.Glad @GoldenOne answered that. But the WTA windowing function does not look like the one shown, as I have never published it, and it's visually different to that. But don't ask me what it does look like...

My status as running a YouTube channel is in every single post I make as part of my signature. The YouTube revenue model doesn't cover the cost of my time - I don't do the videos for money.Should have researched before putting out YouTube videos. For Chris sake get a mod to add “mot” to your designation so we know you run a hifi YouTube channel for money.