Intellectual certainty is a cure for

ANY sort of uncertainty

Obviously a lot of all this depends on what you're hoping for (or expecting).

Personally, when I listen to music, my goal is to hear exactly what the artist and mixing engineer intended.

And any doubt that this isn't the case takes away from my enjoyment.

(I may choose to alter a file if I detect what I consider to be a flaw, but I absolutely do not want anyone or anything making that decision for me.)

As I mentioned before, when I look at a picture on my monitor, I may not know what the original looked like - so I calibrate my monitor; that way I can trust that it is showing me what the original looked like.

I don't want to use my judgment to decide whether what I'm seeing is as close to the original as I can tell.... that's extra work.... I'd rather just have a guarantee I can trust that it is.

I look at my audio system much the same way; I may not know for sure what the original sounded like, so I rely on my system to let me hear what's there without alteration.

(Of course I cannot know that the music file I have is accurate to the true original; but at least I can ensure that I don't change it any further.)

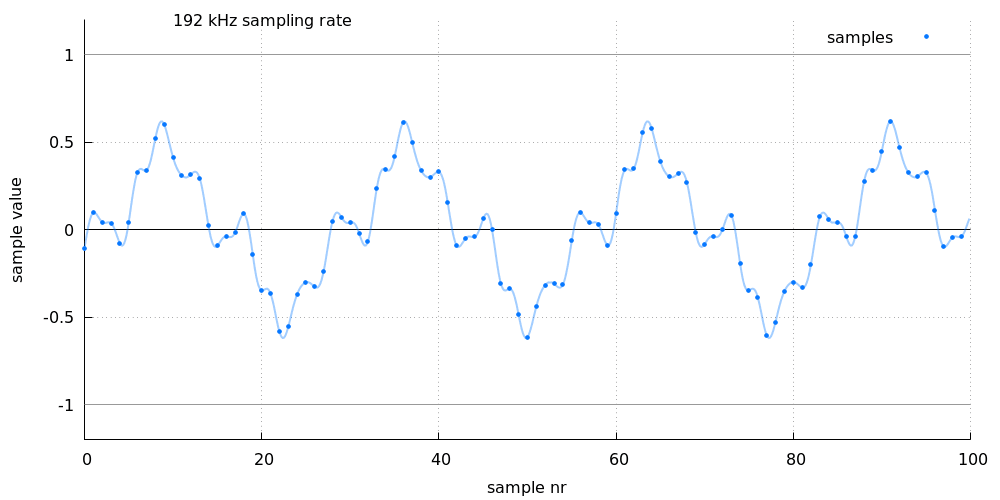

Back when MP3 was the norm, I recall various claims that "MP3 files sounded just like the original"; however, when I listened to them, I occasionally heard artifacts in certain recordings. (I don't recall the settings involved.)

And, when I investigated, I found that I

WAS able to make up special test files which

NO current MP3 encoder was able to encode without artifacts.

When you dig into the encoders, and the assumptions they make, it's often not difficult to figure out how to "trick" them (I used to test computer network products for a living).

And, with today's modern sampling synthesizers, any waveform I can concoct in a test file

MIGHT turn up in electronic music.

A similar situation occurs when a video tape master is converted to a DVD......

There is an algorithm which sets a filter level which is used to remove tape noise (which is necessary if you want to achieve a reasonable level of compression).

However, in specific instances, a particular visual feature (in this case dark swirling clouds or smoke), sometimes ends up "tricking the intelligence", and being

INCORRECTLY removed by the filter.

(The encoder usually does a very accurate job - but, in some small percentage of instances, it gets it significantly wrong.)

I haven't tried this with AAC, so it's possible that it

NEVER makes mistakes and removes something that might have been audible....

However, because of the complexity of the algorithms involved, I would have to run an awful lot of tests to claim that I was 100.0% sure it would

NEVER happen.

Alternately, I would have to listen carefully to

EVERY file I encoded to ensure that "it wasn't the one where the process failed".

Now, to a lot of people, reducing the size of their music library by 75%, even at the risk that one of their 10,000 songs might contain a single audible artifact, might seem like an excellent tradeoff.

However, since I have no issue whatsoever with storage size, and I'll admit to being a bit OCD when it comes to knowing what's going on, I'd still rather take the sure thing.

It also boils down to a matter of process.

If I wanted to fit a bunch of files on an iPod, I could encode them, then carefully compare each to the original to confirm that it was audibly perfect.

However, since I'm not going to discard the original copy anyway, that's an awful lot of extra work (the time it would take is worth more to me than the cost of buying a bigger SD card).

(My "library drive" is simply one of the three copies of each file I retain - one live copy plus two backups - so, if I were to create another AAC encoded copy, it would simply be another copy to keep track of.)

Also, to be honest, I tend to compartmentalize how I prioritize my music.

I could probably cheerfully get rid of about 90% of the CDs I currently own - and simply listen to whatever version of those tunes I can punch up on Tidal.

However, for the small percentage of my collection that I very much care about, a single bit out of place counts as a "fatal flaw".

And, much as everyone on this thread likes to disparage such claims.....

I absolutely have encountered situations where certain details or flaws became audible on a new piece of equipment (that were totally inaudible on my previous equipment).

Therefore, I am very disinclined to believe with absolute certainty that I cannot possibly discover differences tomorrow that really were inaudible today.

(And, again, the

EASIEST way to ensure against that is simply to keep the original file intact.)

And, yes, I

DID have quite a few MP3 copies of albums "back in the day"....

And, yes, it cost me a lot of money to go out and buy the CDs for all of them when I realized there was an audible difference.

Is intellectual certainty a cure for OCD? You probably don't need intellectual certainty if you simply do a controlled listening test to determine your perceptual thresholds and then go with it and not worry any more. That's what I did. I don't care about theoretical sound I've proven to myself that I can't hear. I guess that's a form of intellectual certainty too!