Hello brave Head Fi Scientists! I would like to acknowledge the consistent efforts from the core group in this particular sub forum in pushing for objective standards here and particularly excellent discussion on the virtue of double blind tests for audio.

Double blind testing is a critical tool in evaluating subjective claims like we see thrown around in audiophillia constantly. Being able to take such a test and evaluate your own listening objectively is a very convincing experience, however it's important to note that double blind testing only accounts for discernible, conscious differences. However there are undeniably a variety of phenomena that affect the human body in consistent, objective ways that are not perceptible to us.

For instance, if you were to run a (necessarily quick) double blind test on subjects to see if they could tell the difference between oxygen and carbon monoxide, the subjects would not be aware of any difference due to the lack of odor, and yet the carbon monoxide would

kill them after a short while. It's an extreme scenario, but hopefully you get the point.

Why does it matter? Because our senses are routed first through the lower 'survival' brain for critical evaluations like fight or flight before the conscious upper brain is even made aware of the detection. In evaluating audio capabilities of the human hearing system then, are we artificially constraining results by focusing only on conscious perception?

I would like to invite your consideration...

The Subconscious Case for HD Audio

You hear a lot about cables, amps and DACs having subtle 'unmeasurable' effects on sound in the forum proper and other similar subjective audiophile communities. Less popular (at least these days) are discussions around lossy vs. lossless and even rarer folks claiming benefits from so called 'HD audio' (for the purposes of this discussion, I'll take this meaning as >48 kHz sampling, not going to discuss quantization and 24 bit at all).

Part of this dismissal for the case of HD Audio and even lossy vs. lossless CD (Redbook) quality stems from the fact that it is fairly easy to do online double blind tests that toggle seamlessly back and forth between qualities and even offer 'tests' to gauge your ability to ability to identify them correctly. These tests are exhausting but highly convincing... For instance while I can discern lossy vs. lossless most of the time (slightly over 75% across tests), I can't statistically tell the difference between SD and HD audio myself. Indeed HD audio has consistently failed double blind experiments, whether it's SACD, HDCD, DSD, MQA (lol), or even 192/24 bit FLAC. I think this removes a lot of the speculative room in the hobby for people to claim audible improvements... it's much harder to properly double blind test things like cables or sources that require (blinded) helpers and precise level matching etc.

So that is all tidy and nice for an objectivist leaning listener such as myself, right? Wrong. There exists objective data that not only humans not only hear the difference in music sampled above 44 kHz, and that we

enjoy it more too! We just perceive these differences subconsciously, making listening tests largely invalid. If we instead look at electroencephalogram (EEG) data of listeners exposed to SD and HD music while engaged in an unrelated intellectual task, there are observable differences in the human neurological response:

https://www.frontiersin.org/article...attentional state without conscious awareness.

These isn't the first study on HD effects either, it builds on prior work done. A series of studies have shown measurable differences in the brain response when HD content is present in music:

Oohashi et al., 2000,

2006;

Yagi et al., 2003a;

Fukushima et al., 2014;

Kuribayashi et al., 2014;

Ito et al., 2016. It's expected to see increased alpha brainwaves (arousal!) when there are 'inaudible' HD frequency components.

Interesting take aways from this more recent study:

-The effect takes a while to kick in, ~200s, so rapid switching A/B inherently is a non-starter!

-It lasts ~100s after the test too

-Playing just the >20kHz HD spectrum (without the music) didn't produce the same effect

-There were still no statistically meaningful differences between the subjective ratings of the SD / HD pieces under a forced choice condition,

except for 'natural':

So maybe rather than rapid A/B testing, we should have subjects listen to several minutes of audio and simply ask which sounds more natural rather than high resolution, better etc... indeed the speculation around mechanisms is basically just 'sounds more natural' hand waving.

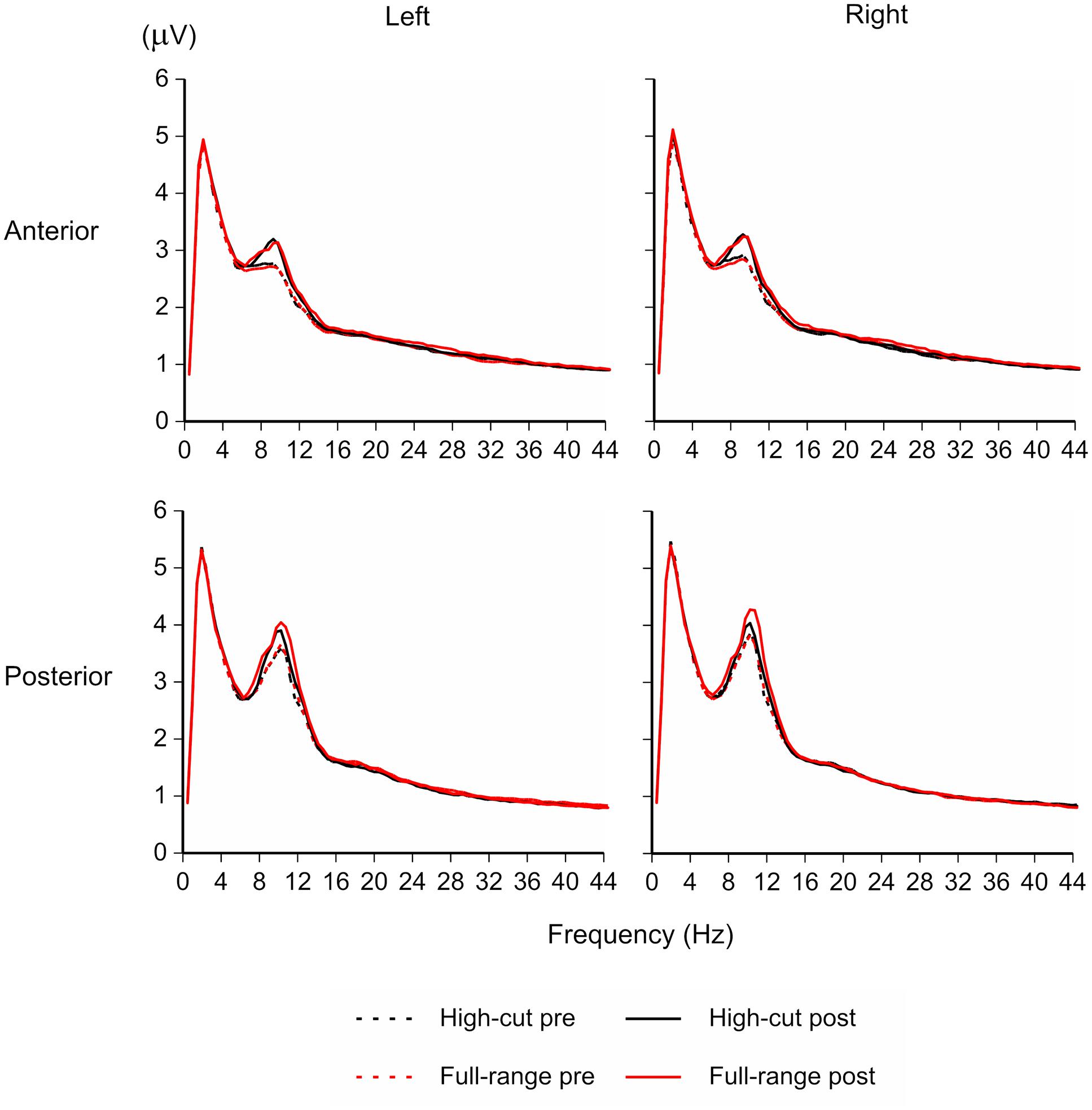

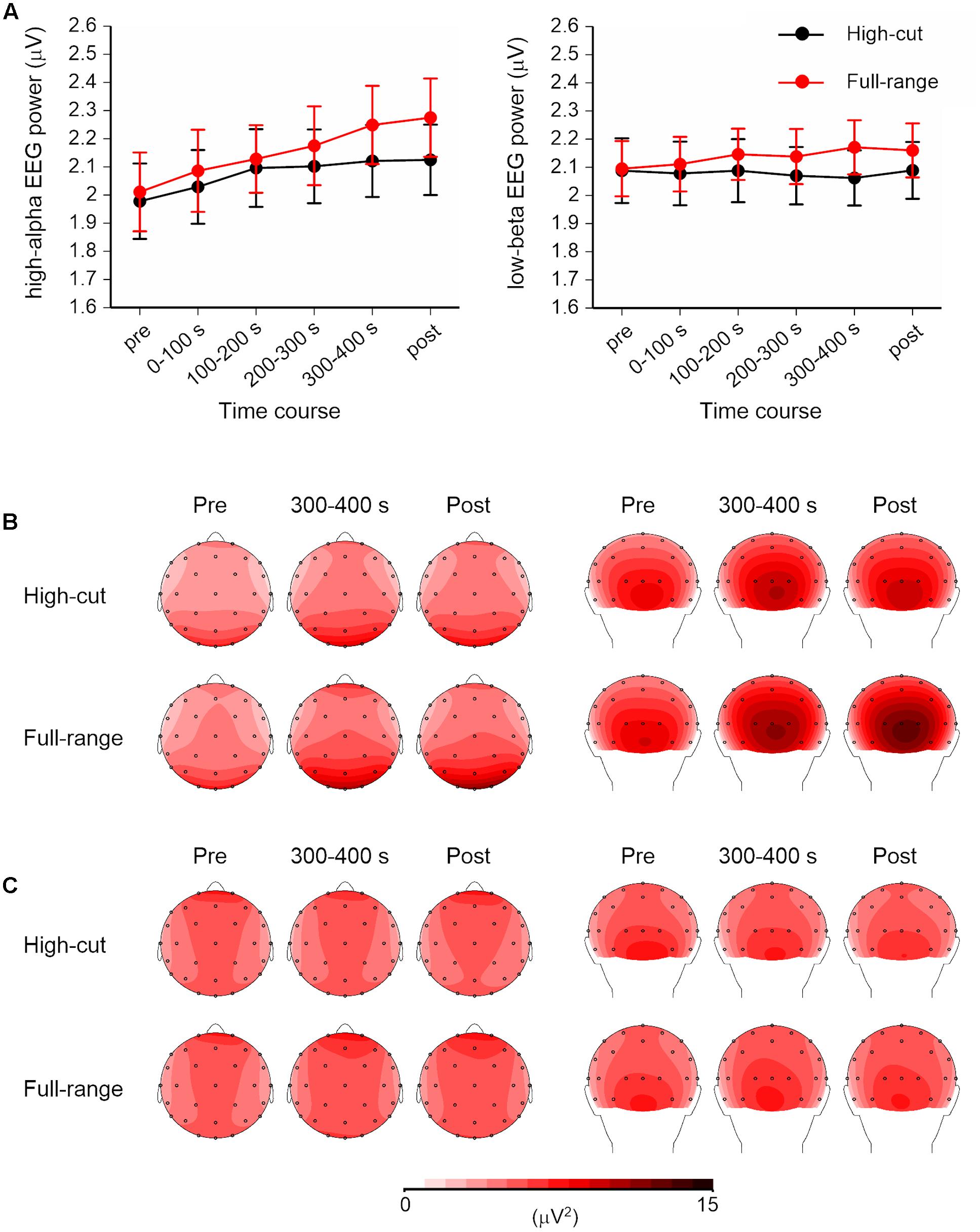

Here are the fun EEG plots:

In particular, note the difference post listening in the posterier, right brain activity. Full range subjects have 1/3 of a uV more activity at ~12Hz.

The top plots show the Integrative effect. Note how alpha waves (arousal pleasure) and slightly less so beta waves (vigilance) pull ahead the longer the listener is exposed to HD audio. Just think of how much more productive and pleasurable my life is after listening to HD music

for hours  On the Importance of Timing

On the Importance of Timing

Us Electrical Engineers tend to think of everything in the frequency domain as that is the convenient design and analysis space for electronics, but our hearing isn't a radio and we don't hear in the frequency domain directly. We perceive things transiently and must consider the biological implementation and motivation of the human ear.

If you think of our ear as a reverse headphone, the eardrum (timpanic membrane) is like the driver diaphragm and behind it the cochlea is like the DAC, transducing the physical vibration recieved into bioelectric neurological signals. The cochlea itself is a spiral containing a series of delicate cochlear hair strands that pickup the sound running past them. These hairs have very fine spacing, and because they are aligned linearly along the length of the cochlea, the brain has physical access to excellent timing data.

The cochlea is a folded up length of sensors displaced slightly from one another, providing extremely high resolution timing data on an incident pulse.

Indeed, we can similarly find objective evidence of human hearing WAY above 20 kHz by focusing on timing differences rather than music or other 'informational' signals. A few years back I came across this nice study looking at identifiable time offsets between a pair of ribbon speakers:

http://boson.physics.sc.edu/~kunchu...isalignment-of-acoustic-signals---Kunchur.pdf

In the study a range of participants (including several in their mid/late 40s!) were sat in front of a pair of aligned ribbon speakers that played a steady tone. A series of tests were done that displaced one of the ribbon speakers by a slight offset in distance from the subject:

The results are absolutely stunning:

All subjects guessed correctly 10/10 times for displacements as small as ~3mm!

Using a chi-squared approach, this puts the threshold of judgement vs. chance at around 2.3 mm, corresponding to a time delay of less than 6.7 us.

If we examine the corresponding max frequency that would be required to capture a 6.7 us period signal without aliasing:

Fsample = 2 * 1/(6.7x10^-6)=3.0 x10^5 Hz, or about 300 kHz, that's nearly an order of magnitude more than the 44kHz sampling assumption used by Redbook standard, and represents a max hearing 'frequency' of ~150kHz, not 20kHz as is commonly assumed!

It's particularly interesting for us here in IEM world, as this paper does an excellent job noting the inherent disadvantage of broad firing speakers:

The transient smearing one gets listening to speakers is far and away the bottleneck compared to human hearing, and we can't speed up the sound waves to compensate - this is an inherent physical limitation for speakers that keep them below the fidelity possible for the ear. IEMs are the ideal solution to this challenge! They and headphones are probably the only form factor with a remote chance of relaying this timing info in real world systems/environments...

So given an exceptional, close, lab grade transducer and chain, we still have room to grow even past 192 kHz sampling in terms of transient audibility... this makes a lot of sense if we think in evolutionary terms rather than audiophile:

Our senses are designed to tell us about opportunity and threat in our environment. Spatial offset information allows our brain to extrapolate positional data about the source but also speed information too (displacement vs. time). It's not hard to understand that those able to better hear where that tiger is coming from would more often survive to reproduce... the compounded evolutionary effect is our ears are spatial specialists, capable of 'super human' data extraction in this space far beyond what we would expect from pleasurable signals like music.

As noted in the intro, if we examine the human brain structure, we find it's actually 2 brains in bunk beads. The lower 'lizard' brain is primordial and instinctive, and handles our basic regulatory and survival functions. It makes sense that all sensory data is first run by the lizard. If you a Tiger is about to pounce on you, the luxury of time it takes to get the neocortex involved and in approval will mean you're cat lunch... It makes total sense then that it would be far more effective at evaluating timing info then we are consciously aware with our neocortex.

Conclusion

It's ironic given the historical dismissal, that the perhaps the best arguments for HD audio benefits come not from subjectivists, but rather objective studies. This is a natural product of the fact that for musical info, most if not all of the observable benefit is subconscious. If we look to nature for an explanation, we find a very tidy biological explanation grounded in evolutionary theory, and in particular the ear's ability to perceive incredibly minute timing differences.

The end result? You're not crazy for paying extra for Qobuz, and you're definitely not crazy if you prefer IEMs and headphones to 2 channel and surround!