Many thanks for the elaboration. Just highlights se's point about being careful to distinguish evidence from proof, especially when both procedures and statistics can go haywire.

Nice discussion of the paper here -

http://www.sa-cd.net/showthread/58757/58908

Post by

eesau September 8, 2010 (9 of 111)

that fits in pretty well with Steve's comments:

"

this is an interesting paper but the authors need to carry our further research before the results can be accepted as a scientific fact.

There are some interesting results in the paper:

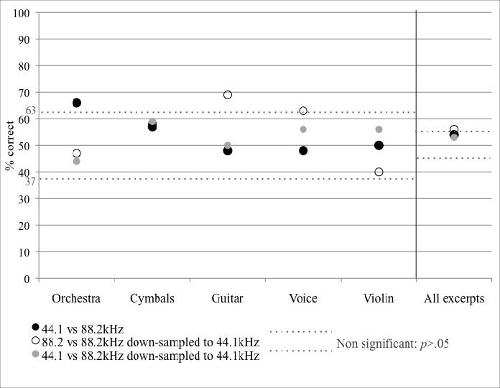

Using orchestral music material, 13 participants out of 16 were statistically able to detect

+ native 88.4kHz and native 44.1kHz from each other

However, they were not able to detect

- native 88.4kHz from the same signal down sampled to 44.1kHz

- nor native 44.1kHz from the 88.4kHz down sampled to 44.1kHz

There existed no statistically relevant detection using ”cymbals” or ”violin” material.

With ”guitar” and ”voice”, these participants were able to tell

+ native 88.4kHz from the same signal down sampled to 44.1kHz

but with this material, they were not able to tell

- native 88.4kHz and native 44.1kHz from each other

- nor native 44.1kHz from the 88.4kHz down sampled to 44.1kHz

3 participants out of 16 provided with reverse results that (may) indicate that they detected a difference but could not really tell which was which …

These participants doing detection in reverse were able to non-detect

+ native 44.1kHz from the 88.4kHz down sampled to 44.1kHz using ”guitar” material (but nothing else statistically relevant)

and further with the ”violin” material they were able to non-detect both

+ native 88.4kHz and native 44.1kHz from each other and

+ native 44.1kHz from the 88.4kHz down sampled to 44.1kHz

So, don't you think that the results are somewhat contradictory … and this, again, shows that AES is not very discriminative when accepting papers to their conventions.

24-bit 88.4kHz and 44.1kHz sample rates were used possibly because 24/88.4kHz is considered to be ”high resolution audio” and sample rate conversion is very straight forward. They could have used 48kHz and 96kHz as well but would you have been so interested in the results?

ABX is a scientific method to compare results and AES and McGill University are trying to be scientific. For some reason, ABX does not seem to work for audiophile use ….

All participants in the tests had musical training and a professional or scientific relation to digital audio with an average age of 30 years. They were describing high definition audio as having a better spatial reproduction, high frequency richness, precision or fullness …

"

Other comments by me:

Both the McGill and Meridian papers claim to have used ABX tests, but these were not the ABX tests that are commonly discussed on audiophile forums.

"Participants were asked to perform a double blind

ABX task. For each trial, the excerpt was presented

with three versions, namely A, B and the reference X.

A and B always differ. X is always either the same as

A or the same as B. The participant’s task is to

indicate whether X = A or X = B. To nullify order

effects, the order of presentation across trials and

blocks was randomized."

In fact the ABX tests that are commonly discussed on audiophile forums are run quite differently:

For each trial listeners are allowed to freely select from 3 versions namely A, B, and X and listen to them as many times as they wish in what ever order they wish to reach any conclusions they wish to reach about them. The listener's conclusion that X sounds most like A or B or sounds least like A or B is recorded and the test moves to the next trial.

Thus the ABX test that these experimenters used forced more constraints on the listeners, and may have provided less reliable results than might be possible.

The benchmark paper that these newer AES conference papers want to criticize is Meyer and Moran's JAES paper

http://www.aes.org/e-lib/browse.cfm?elib=14195 that did make the final cut. Unfortunately it had an inhrerent flaw that was not the author's fault or even widely recognized until some years later. The problem was at the time they did their research a very high proportion of DVD-A and SACD discs that were on the market, including many that they used in their tests, were sourced from legacy analog and CD-quality or thereabouts digital masters. Therefore the presumption that the SACD and DVD recordings were all high rez was probably not true.