71 dB

Headphoneus Supremus

You must mean Geogia Brown?The highest note that has supposedly ever been sung is G10 at 25.1 kHz. Most music won't go quite that high though.For comparison, a typical soprano part rarely goes above "soprano C", which is C6 at a mere 1.0 kHz! Falsetto and coloratura singers can go higher than this though.

Most of the info above a certain frequency in the treble range will generally be overtones and timbral info, I believe, as opposed to actual "notes".

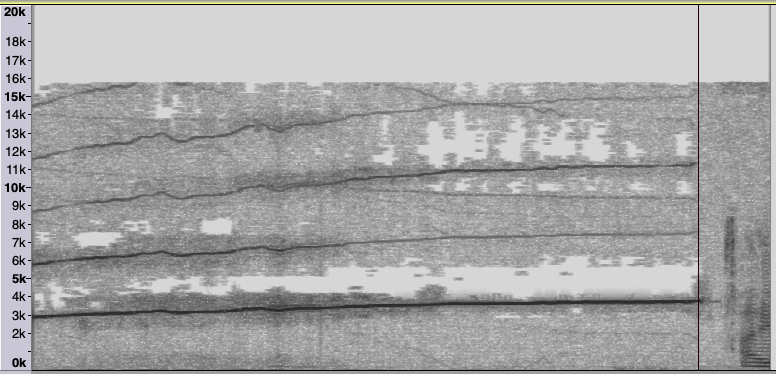

I recorded her high singing and checked it out in Audacity. Her singing goes up to about 3.8 kHz, which is impressive (not the kind of "brown note" we usually talk about), but far from this ridiculous G10 claim. Maybe they mean significant harmonic overtones go up to 25.1 kHz? I used linear frequency scale, because here it works nicely.