Edit:

Totally misunderstood up-sampling, what I read on it was misleading and referred it to some kind of advanced interpolation and not just filtering as in bandwidth limiting through higher sampling rate (+ digital filter, also HF low pass analogue filter to eliminate aliasing etc on up sampled waveform).

Since then "mathematically" (as in not using filter programs) made a rudimentary FIR closed from digital filter for a DAC.

/Edit

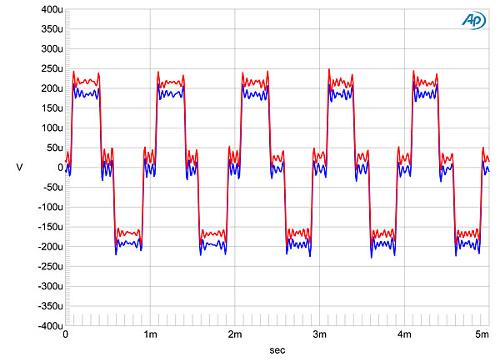

Looking at -90db sine waves tests 16 bit is well.. 16 bit (stepped at -90db). Vs 24 bit which smooth. Yet this still happens with dacs which upsample data to a higher number of bits?

16 bit

24bit

The dac the images are from upsamples source data to 32bit (1.5Mhz).

Totally misunderstood up-sampling, what I read on it was misleading and referred it to some kind of advanced interpolation and not just filtering as in bandwidth limiting through higher sampling rate (+ digital filter, also HF low pass analogue filter to eliminate aliasing etc on up sampled waveform).

Since then "mathematically" (as in not using filter programs) made a rudimentary FIR closed from digital filter for a DAC.

/Edit

Looking at -90db sine waves tests 16 bit is well.. 16 bit (stepped at -90db). Vs 24 bit which smooth. Yet this still happens with dacs which upsample data to a higher number of bits?

16 bit

24bit

The dac the images are from upsamples source data to 32bit (1.5Mhz).

Last edited: