Rob Watts

Member of the Trade: Chord Electronics

- Joined

- Apr 1, 2014

- Posts

- 3,141

- Likes

- 12,370

My advice over many years has been not to use up-samplers, but to always feed my DACs with bit perfect data, as the WTA algorithm would always do a much better job than conventional algorithms in terms of recovering transient timing more accurately and hence better sound quality and musicality (musicality being defined as being able to get emotional with the music). In the past, that advice was based on two factors - much longer tap lengths of the FIR filter, and a better algorithm (the WTA). Today of course there are solutions that claim long tap lengths too, so the recommendation on not using up-samplers is losing out on one benefit.

Moreover, as the tap length increases, the SQ should converge - an infinite tap length filter would sound identical to pure sinc, to rectangular sinc (tap length infinite -1 for setting window to 1) and Kaiser filter, and the WTA. This assumes that the filter is designed appropriately - scaled properly - I once saw a 1M tap length filter where they had just taken a normal filter and increased the taps so it had 99% of coefficients that were near zero. He wondered why it didn't sound any different... So a key question is are we getting close to convergence at 1M taps?

So I thought I would do two things - explain in more detail about what the WTA algorithm is all about, and to design a couple of filters that use conventional algorithms but also has the same 1M tap length, so I can listen to WTA against other algorithms, and measure their performances too. Measurements do provide one indication as to how badly they will sound (but not necessarily how good - more of that later).

But I need to explain first how an FIR interpolation filter is constructed. FIR means finite impulse response, and the other type of filter is the IIR or infinite impulse response. IIR filters are like analogue filters, as they can't see into the future; FIR filters delay the incoming data into a sample buffer, and hence can "see" into the future. This means that an FIR filter can be made linear phase with a symmetric impulse response. A FIR filter that is infinitely long and has a sinc (sin(x)/x) impulse response will perfectly reconstruct a bandwidth limited signal - so if you are interested in transparency, then a sinc function FIR filter is the only way to go. But a pure sinc function needs an infinite amount of taps (one tap is one sample multiplied by a coefficient and fed into an accumulator to sum the result) and so we need to modify the sinc function to make it smaller so it can be processed. The most popular way of doing this modification is via something known as a windowing function. The WTA algorithm is a unique windowing function designed to maximise the accuracy of recovering transient timing from the original analogue signal.

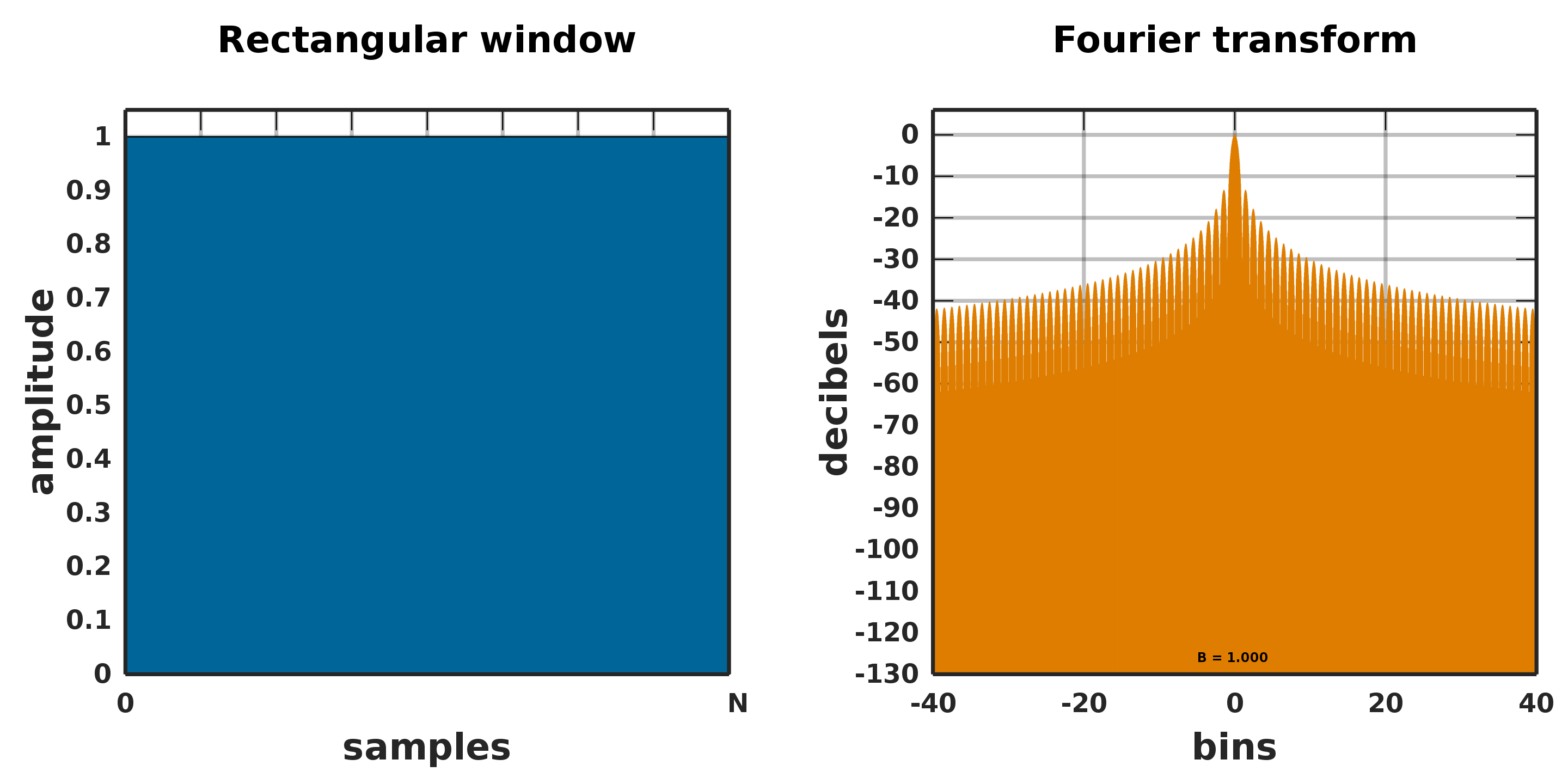

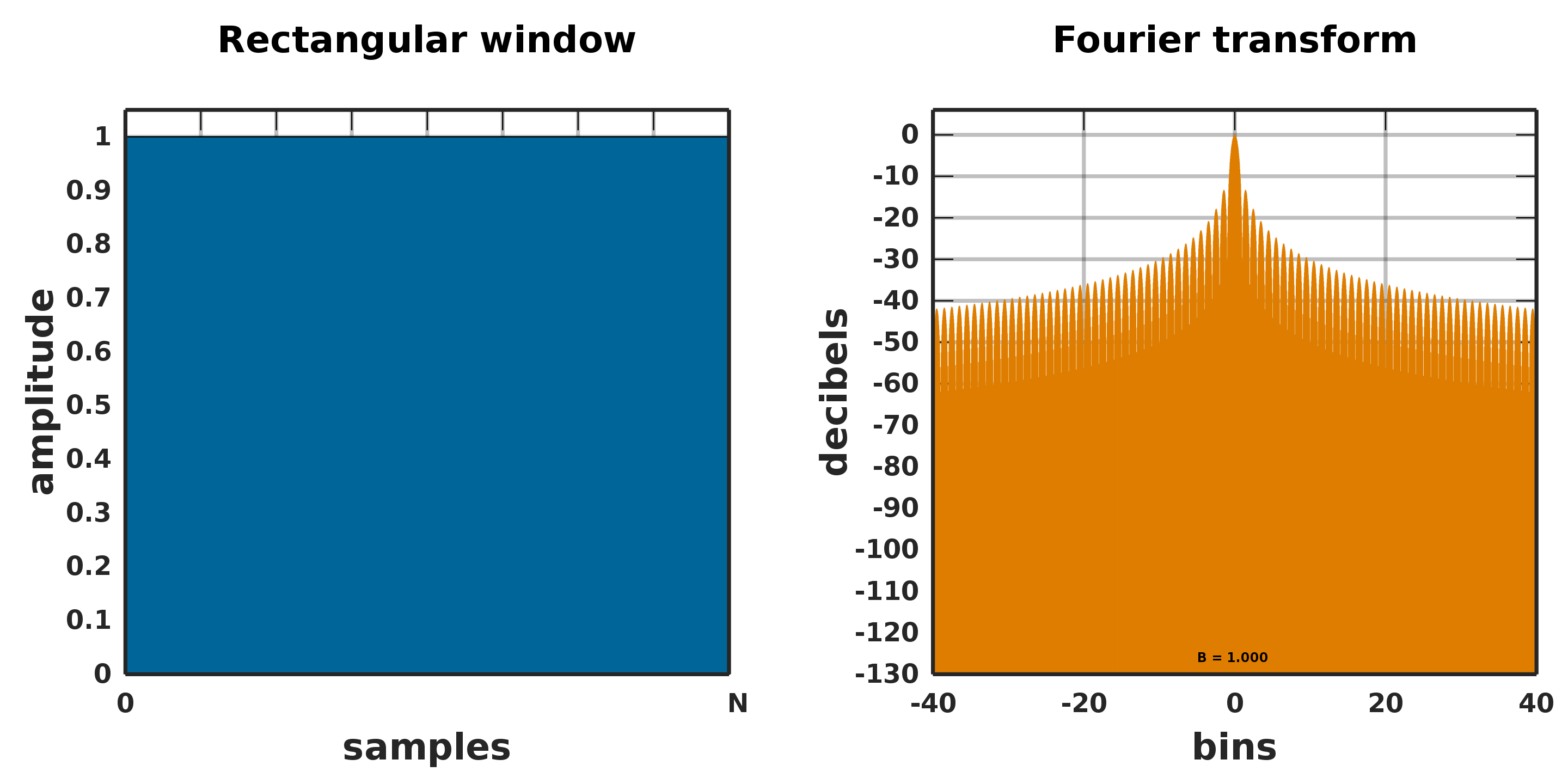

There are many types of windowing functions, and you can see an excellent discussion on Wikipedia here. The simplest windowing function is the rectangular window; you simply multiply the sinc values with 1, and when you want to stop the filter you set it to 0. This graphic from Wikipedia shows it here:

The benefit of the rectangular window is that all of the filter values are sinc - but the downside is we have a rapid transition from 0 to a sinc coefficient - and this abrupt change (or discontinuity) causes massive problems in the final filter. No competent audio filter designer would ever use a rectangular window because of this.

A much better windowing function, and in my experience the best sounding of the conventional windows, is the Kaiser filter. This has a coefficient that you can tune, to optimise stop band attenuation against the transition bandwidth. Transition bandwidth (how fast the filter rolls off) is important subjectively - but so too is stop band attenuation (how well sampling images are suppressed), and there exists a subjectively optimum value for these two factors.

From the Fourier transform of the window we can see that at bin 40 we have much better attenuation than the rectangular window. Also, there is no discontinuity - it is a visibly smooth function. But the number of coefficient values that are close to sinc is now very small. This will mean that the filter will not perfectly reconstruct transients, as only sinc will do this.

So I designed an M scaler filter and used a rectangular window and Kaiser window to create the coefficients - and then a filter was created in the Xilinx tools. Using my M scaler test rig I could program three different filters - rectangular sinc, Kaiser or WTA, then measure and listen to the alternatives.

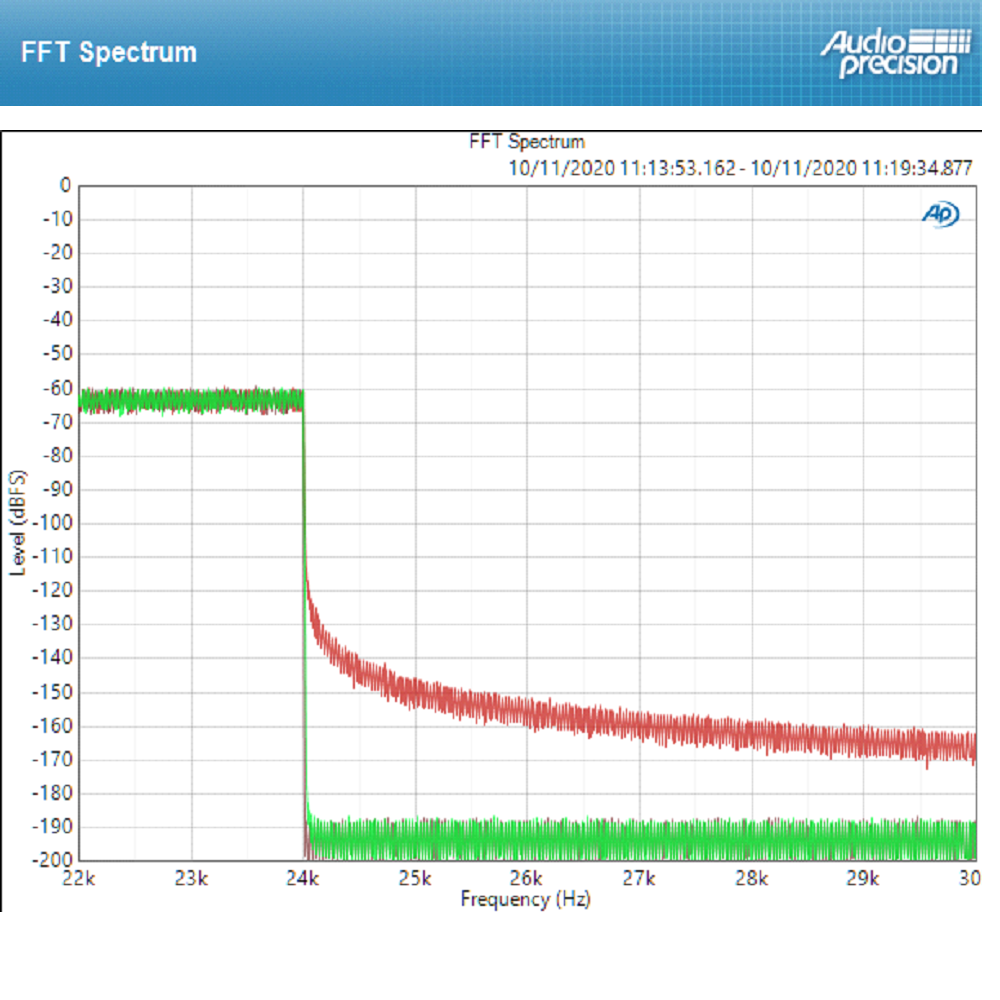

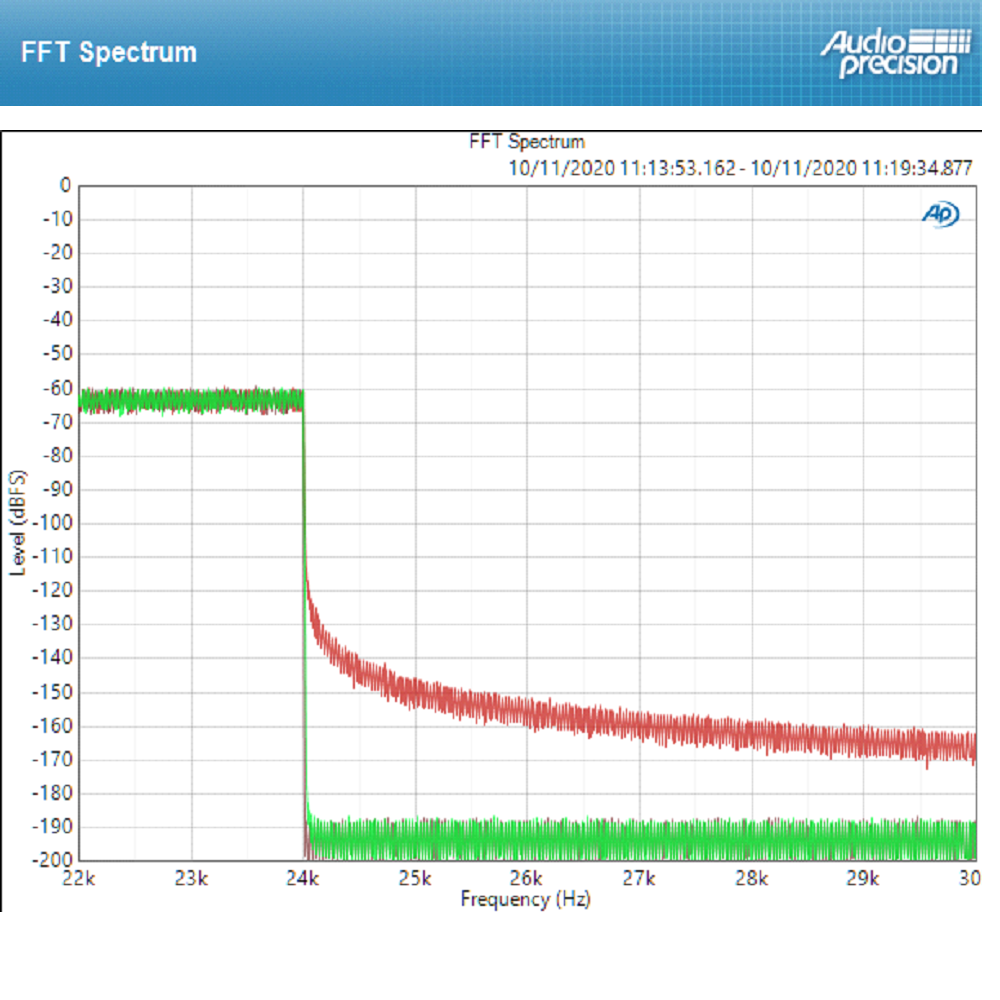

Firstly I needed to check the measurements against the filter performance to check the implementation - and they agreed. The AP measurements of the three are shown, using random noise at 0dBFS as the stimulus:

The red is 1M rectangular sinc, green plot is WTA, and brown the Kaiser.

The rectangular sinc was quite a surprise, as the transition band (I use the frequency from corner to -100dB) is appallingly bad - some 6kHz - even though all the coefficients are sinc. The WTA is some 12Hz - that's 500 times more effective. So to get this filter to have the same transition performance as the 1M tap WTA, we would have to use 500 million taps, as doubling the tap length halves the transition band. Transition band is very important subjectively, so the outlook for this sounding good is not promising. Remember also that the objective is to have a sinc function filter - this needs to be the same (or close as possible) as sinc in the time domain, and same as sinc (or close as possible) in the frequency domain - that is a brick wall filter. Both aspects are vitally important. This clearly fails as a brick wall filter, and the poor suppression of sampling images will have a big subjective consequence.

The Kaiser measures better than the WTA - it's transition band is only 3Hz. But because almost all the coefficients are not sinc, the reconstruction of the timing of transients will be not as accurate as sinc. This will affect the sound quality. Kaiser has the almost identical frequency domain performance as ideal sinc, but has a time domain performance that looks nothing like ideal sinc. The WTA filter uniquely has both very close to ideal sinc in the frequency domain and very close to ideal sinc in the time domain.

Before talking about my listening tests, I ought to give a quick re-cap as to why transients and the timing of transients are important. What we hear is not the output from the ears, but the brain's processing of that ear data; audio is a reconstructed processed illusion created by the brain. We do not understand how the brain separates individual instruments out into discrete entities and create extremely accurate placement data on those entities. What we do know is that transients are part of the perceptual cues that the brain uses to construct the audio illusion - transients are used for the perception of bass pitch, locating instruments in space, and timbre perception. They also get used to separate individual instruments into discrete entities - I am sure correlated patterns from transients plays a part in this. So if the transient timing is being constantly affected (too early or too late, constantly changing) then our perception of space, timbre, instrument separation, and perception of bass pitch will get affected. In the past, when coming up with the WTA filter, I optimised it by using specific tracks to evaluate all these factors, and these tracks were used for the listening tests.

The first test was 1M rectangular sinc against 1M WTA. My notes are not pleasant to read:

Depth: flat as a pancake, soundstage wide and out of focus

Timbre variation: hard and metallic, sibilance on vocals. Poor variation

Instrument separation: poor; sounds distorted, loudest instrument dominates - listening fatigue. Moby un-listenable.

Double bass: soft and diffuse, not easy to follow pitch

I didn't bother listening to it against Dave's WTA filter - it was that bad. The measurements indicate from transition band POV that it is 500 times worse than WTA - my listening impressions would agree with that. It's not fit for purpose, both from a measurement and SQ point of view.

As to the 1M Kaiser against 1M WTA - this was clearly better than 1M rectangular sinc but:

Depth: flatter and instruments out of focus in space

Timbre variation: suppressed, voice slight edge

Instrument separation: little congested, not good focus, loud instrument dominates.

Double bass: bit soft, not very well defined

Tonal balance: slightly bright, some edge to vocals

I then used pass through on the test M scaler to listen to the Kaiser 1M against Dave's 164k WTA and Dave clearly won out.

So with 1M sinc we can put a number based on the transition band measured performance, and subjectively the number rings true - it really does sound 500 times worse. As to Kaiser, it's difficult to put a number on coefficients that are almost the same as sinc against the WTA. That number would be around 50 times less than the WTA, and that seems about right - the 1M Kaiser sounds similar to what I expect a 20k tap WTA filter would sound like.

So a word now about the WTA filter and how that was created. WTA is a compromise between ensuring as much of the coefficients are sinc (so we tend to get perfect transient timing reconstruction), but on the other hand avoid a rapid change in coefficients going from zero (you have run out of taps on the filter) up to full sinc. The WTA windowing function is unique and is aimed to maximise these compromises. After many years of experimentation and trials (starting in 1999) I ended up with an equation that calculated the coefficients. This has 5 variables - the first being tap length, which of course is ultimately hardware determined. On the other four variables, two are independent - listening tests confirms which one sounds best. One of these variables has to be set to 10 parts per million accuracy - that means a 10 PPM shift is clearly audible. The other two variables concern the shape of the function at the beginning (start at zero) and another controls the end (hitting 1 for the window function). These variables are interdependent, so you have to start with a rough set of both to determine which sounds best, then move to the next variable, then repeat until you can no longer hear a change in performance. To give you an idea of the accuracy needed for this - a 1% shift in the curve over 10% of the function is audible. The process of optimising the WTA equation took many weeks with probably thousands of listening tests (sometimes when the difference is really subtle you have to listen as many as 10 times on all 5 tracks to get a consensus as to which is best). But this effort pays off - as a subtle change in values can give the subjective equivalence of doubling the tap length. And increasing tap length ad infinitum is not a practicable solution - more processing costs more in FPGAs and power, and has longer latency (delay). Tweaking a coefficient just costs me my time.

So with 1M taps are we getting close to subjective convergence? Absolutely not. Indeed, I ended up being more convinced that algorithm is vastly more important than tap length. Having said that, I am certain that more musical benefits are to be had by further increasing the tap length.

Happy listening, Rob

PS - the excellent news that a Pfizer vaccine with 90% effectiveness means that CanJams should be starting again sometime next year. I have sorely missed these shows, so I am looking forward to seeing and talking to enthusiasts again.

Moreover, as the tap length increases, the SQ should converge - an infinite tap length filter would sound identical to pure sinc, to rectangular sinc (tap length infinite -1 for setting window to 1) and Kaiser filter, and the WTA. This assumes that the filter is designed appropriately - scaled properly - I once saw a 1M tap length filter where they had just taken a normal filter and increased the taps so it had 99% of coefficients that were near zero. He wondered why it didn't sound any different... So a key question is are we getting close to convergence at 1M taps?

So I thought I would do two things - explain in more detail about what the WTA algorithm is all about, and to design a couple of filters that use conventional algorithms but also has the same 1M tap length, so I can listen to WTA against other algorithms, and measure their performances too. Measurements do provide one indication as to how badly they will sound (but not necessarily how good - more of that later).

But I need to explain first how an FIR interpolation filter is constructed. FIR means finite impulse response, and the other type of filter is the IIR or infinite impulse response. IIR filters are like analogue filters, as they can't see into the future; FIR filters delay the incoming data into a sample buffer, and hence can "see" into the future. This means that an FIR filter can be made linear phase with a symmetric impulse response. A FIR filter that is infinitely long and has a sinc (sin(x)/x) impulse response will perfectly reconstruct a bandwidth limited signal - so if you are interested in transparency, then a sinc function FIR filter is the only way to go. But a pure sinc function needs an infinite amount of taps (one tap is one sample multiplied by a coefficient and fed into an accumulator to sum the result) and so we need to modify the sinc function to make it smaller so it can be processed. The most popular way of doing this modification is via something known as a windowing function. The WTA algorithm is a unique windowing function designed to maximise the accuracy of recovering transient timing from the original analogue signal.

There are many types of windowing functions, and you can see an excellent discussion on Wikipedia here. The simplest windowing function is the rectangular window; you simply multiply the sinc values with 1, and when you want to stop the filter you set it to 0. This graphic from Wikipedia shows it here:

The benefit of the rectangular window is that all of the filter values are sinc - but the downside is we have a rapid transition from 0 to a sinc coefficient - and this abrupt change (or discontinuity) causes massive problems in the final filter. No competent audio filter designer would ever use a rectangular window because of this.

A much better windowing function, and in my experience the best sounding of the conventional windows, is the Kaiser filter. This has a coefficient that you can tune, to optimise stop band attenuation against the transition bandwidth. Transition bandwidth (how fast the filter rolls off) is important subjectively - but so too is stop band attenuation (how well sampling images are suppressed), and there exists a subjectively optimum value for these two factors.

From the Fourier transform of the window we can see that at bin 40 we have much better attenuation than the rectangular window. Also, there is no discontinuity - it is a visibly smooth function. But the number of coefficient values that are close to sinc is now very small. This will mean that the filter will not perfectly reconstruct transients, as only sinc will do this.

So I designed an M scaler filter and used a rectangular window and Kaiser window to create the coefficients - and then a filter was created in the Xilinx tools. Using my M scaler test rig I could program three different filters - rectangular sinc, Kaiser or WTA, then measure and listen to the alternatives.

Firstly I needed to check the measurements against the filter performance to check the implementation - and they agreed. The AP measurements of the three are shown, using random noise at 0dBFS as the stimulus:

The red is 1M rectangular sinc, green plot is WTA, and brown the Kaiser.

The rectangular sinc was quite a surprise, as the transition band (I use the frequency from corner to -100dB) is appallingly bad - some 6kHz - even though all the coefficients are sinc. The WTA is some 12Hz - that's 500 times more effective. So to get this filter to have the same transition performance as the 1M tap WTA, we would have to use 500 million taps, as doubling the tap length halves the transition band. Transition band is very important subjectively, so the outlook for this sounding good is not promising. Remember also that the objective is to have a sinc function filter - this needs to be the same (or close as possible) as sinc in the time domain, and same as sinc (or close as possible) in the frequency domain - that is a brick wall filter. Both aspects are vitally important. This clearly fails as a brick wall filter, and the poor suppression of sampling images will have a big subjective consequence.

The Kaiser measures better than the WTA - it's transition band is only 3Hz. But because almost all the coefficients are not sinc, the reconstruction of the timing of transients will be not as accurate as sinc. This will affect the sound quality. Kaiser has the almost identical frequency domain performance as ideal sinc, but has a time domain performance that looks nothing like ideal sinc. The WTA filter uniquely has both very close to ideal sinc in the frequency domain and very close to ideal sinc in the time domain.

Before talking about my listening tests, I ought to give a quick re-cap as to why transients and the timing of transients are important. What we hear is not the output from the ears, but the brain's processing of that ear data; audio is a reconstructed processed illusion created by the brain. We do not understand how the brain separates individual instruments out into discrete entities and create extremely accurate placement data on those entities. What we do know is that transients are part of the perceptual cues that the brain uses to construct the audio illusion - transients are used for the perception of bass pitch, locating instruments in space, and timbre perception. They also get used to separate individual instruments into discrete entities - I am sure correlated patterns from transients plays a part in this. So if the transient timing is being constantly affected (too early or too late, constantly changing) then our perception of space, timbre, instrument separation, and perception of bass pitch will get affected. In the past, when coming up with the WTA filter, I optimised it by using specific tracks to evaluate all these factors, and these tracks were used for the listening tests.

The first test was 1M rectangular sinc against 1M WTA. My notes are not pleasant to read:

Depth: flat as a pancake, soundstage wide and out of focus

Timbre variation: hard and metallic, sibilance on vocals. Poor variation

Instrument separation: poor; sounds distorted, loudest instrument dominates - listening fatigue. Moby un-listenable.

Double bass: soft and diffuse, not easy to follow pitch

I didn't bother listening to it against Dave's WTA filter - it was that bad. The measurements indicate from transition band POV that it is 500 times worse than WTA - my listening impressions would agree with that. It's not fit for purpose, both from a measurement and SQ point of view.

As to the 1M Kaiser against 1M WTA - this was clearly better than 1M rectangular sinc but:

Depth: flatter and instruments out of focus in space

Timbre variation: suppressed, voice slight edge

Instrument separation: little congested, not good focus, loud instrument dominates.

Double bass: bit soft, not very well defined

Tonal balance: slightly bright, some edge to vocals

I then used pass through on the test M scaler to listen to the Kaiser 1M against Dave's 164k WTA and Dave clearly won out.

So with 1M sinc we can put a number based on the transition band measured performance, and subjectively the number rings true - it really does sound 500 times worse. As to Kaiser, it's difficult to put a number on coefficients that are almost the same as sinc against the WTA. That number would be around 50 times less than the WTA, and that seems about right - the 1M Kaiser sounds similar to what I expect a 20k tap WTA filter would sound like.

So a word now about the WTA filter and how that was created. WTA is a compromise between ensuring as much of the coefficients are sinc (so we tend to get perfect transient timing reconstruction), but on the other hand avoid a rapid change in coefficients going from zero (you have run out of taps on the filter) up to full sinc. The WTA windowing function is unique and is aimed to maximise these compromises. After many years of experimentation and trials (starting in 1999) I ended up with an equation that calculated the coefficients. This has 5 variables - the first being tap length, which of course is ultimately hardware determined. On the other four variables, two are independent - listening tests confirms which one sounds best. One of these variables has to be set to 10 parts per million accuracy - that means a 10 PPM shift is clearly audible. The other two variables concern the shape of the function at the beginning (start at zero) and another controls the end (hitting 1 for the window function). These variables are interdependent, so you have to start with a rough set of both to determine which sounds best, then move to the next variable, then repeat until you can no longer hear a change in performance. To give you an idea of the accuracy needed for this - a 1% shift in the curve over 10% of the function is audible. The process of optimising the WTA equation took many weeks with probably thousands of listening tests (sometimes when the difference is really subtle you have to listen as many as 10 times on all 5 tracks to get a consensus as to which is best). But this effort pays off - as a subtle change in values can give the subjective equivalence of doubling the tap length. And increasing tap length ad infinitum is not a practicable solution - more processing costs more in FPGAs and power, and has longer latency (delay). Tweaking a coefficient just costs me my time.

So with 1M taps are we getting close to subjective convergence? Absolutely not. Indeed, I ended up being more convinced that algorithm is vastly more important than tap length. Having said that, I am certain that more musical benefits are to be had by further increasing the tap length.

Happy listening, Rob

PS - the excellent news that a Pfizer vaccine with 90% effectiveness means that CanJams should be starting again sometime next year. I have sorely missed these shows, so I am looking forward to seeing and talking to enthusiasts again.

Last edited: