if streaming redbook 44.1kHz do we leave it at 44.1 and not try to upsample to say 96kHz before passing on to mscaler. I don't understand this point as the post said 96 was better than 44.1 but that upsampling was bad.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hugo M Scaler by Chord Electronics - The Official Thread

- Thread starter ChordElectronics

- Start date

x RELIC x

Headphoneus Supremus

if streaming redbook 44.1kHz do we leave it at 44.1 and not try to upsample to say 96kHz before passing on to mscaler. I don't understand this point as the post said 96 was better than 44.1 but that upsampling was bad.

Native recording sampling at 96 is better than native recording sampling at 44.1.

Up-sampling to 96 from 44.1 is bad.

Two USB inputs possible? I usually have my PC hooked up via one (and the only) USB input. A second USB input would be handy for my mobile/tablet...

mlxx

100+ Head-Fier

- Joined

- Apr 7, 2012

- Posts

- 484

- Likes

- 153

For non Chord DACs, if the HMS is fed from the optical input with 192kHz music and output to optical, will the HMS actually do anything in this case, will there be any benefit?

Arpiben

100+ Head-Fier

- Joined

- Apr 25, 2016

- Posts

- 489

- Likes

- 192

@Rob Watts

How is serial data transfer between MScaler and Chord DACs managed in terms of synchronisation: asynchronously or synchronously with MScaler Master/Slave?

How is serial data transfer between MScaler and Chord DACs managed in terms of synchronisation: asynchronously or synchronously with MScaler Master/Slave?

Rob Watts

Member of the Trade: Chord Electronics

- Joined

- Apr 1, 2014

- Posts

- 3,141

- Likes

- 12,368

Cool, so my question for the inter web? For folks that have servers with upsampling from 44 to 786 pcm or dsd64 to 512, how does m scaler fit inbetween said server and tt. What benefits, I understand more taps for what that does, does scaler provide. Is this for folks who don’t own servers? Or is this a universal benefit. The sound from my present rig is quite awesome so just seeing where the inn is for me, or isn’t it.

You need to send the M scaler bit perfect data. This is because conventional up-samplers - of whatever type - do not do an accurate enough job of interpolation - for two reasons - limited processing power, and not using the WTA algorithm. You simply can't replace 528 dsp cores with a PC. Also, if you did have 528 dsp cores, and used a regular conventional algorithm then the extra tap length would be of little benefit - you need an algorithm, like the WTA, that is close to a sinc function in order to accurately recover the original analogue signal, and conventional algorithms are very different to the ideal sinc function.

For non Chord DACs, if the HMS is fed from the optical input with 192kHz music and output to optical, will the HMS actually do anything in this case, will there be any benefit?

No benefit - except perhaps the galvanic isolation.

@Rob Watts

How is serial data transfer between MScaler and Chord DACs managed in terms of synchronisation: asynchronously or synchronously with MScaler Master/Slave?

It's identical to regular SPDIF, so it's asynchronous from the DAC POV. This doesn't matter, as the DPLL is as transparent as DAC controlling the data source.

Rob Watts

Member of the Trade: Chord Electronics

- Joined

- Apr 1, 2014

- Posts

- 3,141

- Likes

- 12,368

Here is my presentation from CanJam:

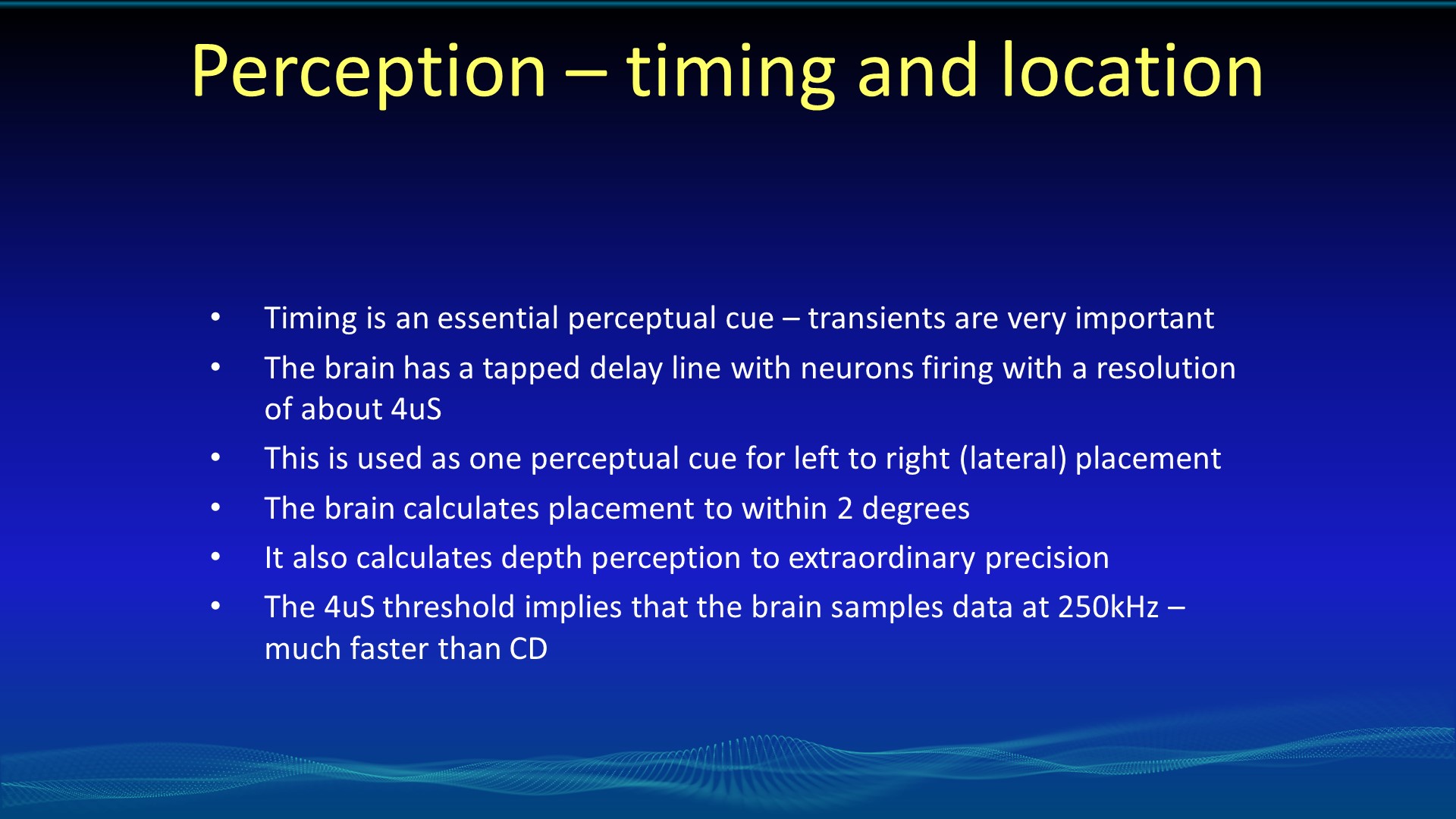

So here I am talking about how important timing of transients is to perception - the implication being is if transient timing has errors (transients being too early or too late, then this will degrade lateral imagery, or left to right instrument placement.

This illustrates that the brain relies on transients of bass notes in order to infer the pitch. Again, the implication is if there are timing errors then the brain will be confused and won't be able to perceive bass notes.

Again psychoacoustic studies have shown that transients are important information for the perception of timbre. Again, the implication is if transients have timing errors, then timbre perception will get degraded.

So I have talked about the fact that transients are important, and now it's about the specific timing problem that DAC's introduce.

So this is a simple illustration of the problem of reproducing transients, and why it is conventional interpolation filters create very large timing errors. So we can see here a sine wave tone burst, and the digital data actually loses the initial burst, as it samples it at the start of the transient.

So the top waveform will be the output from the "audiophile" filters - that actually create timing errors, as in this case we have a peak error of full amplitude - so the reconstruction gets the sign right, but nothing else. So in this particular circumstance, the OP is only 1 bit accurate. But the second waveform is perfectly reconstructed - and if we used an ideal sinc function filter (known as a Whittaker-Shannon interpolation filter) then it will perfectly reconstruct it, with no errors, and in particular no transient timing errors.

You may ask if the audiophile filters have such huge problems, then why do people like them? The issue is simple; when you get these huge transient errors, the brain can't make sense of the audio; when it can't make sense of something, it draws a blank, and you can't actually perceive the transients. And when you can't perceive transients properly, then things sound softer - it's the equivalent of making the image go out of focus, as you can no longer perceive sharp details. You can't follow the pitch of the bass, and it sounds big and fat. But of course the image goes big and flat too, as imagery is degraded; and instruments sound similar with poor timbre variations.

So this covers the theoretical ideal - if we use a sinc function filter, it will perfectly reconstruct it, with no transient timing errors.

But to implement an ideal sinc function interpolation filter, we need an infinite amount of processing... Something not possible!

So we can improve on conventional algorithms ability to reconstruct transients correctly - and this led to my WTA interpolation algorithm. It has been optimised to reduce the error, with a finite amount of processing (or taps). Actually, the WTA algorithm has evolved as tap length has increased, and today it is very similar to an ideal sinc function - over half the coefficients are identical to the ideal sinc function. A windowing function is the process that is used to tailor the sinc function from an infinite response into a small finite one, of a size we can actually use.

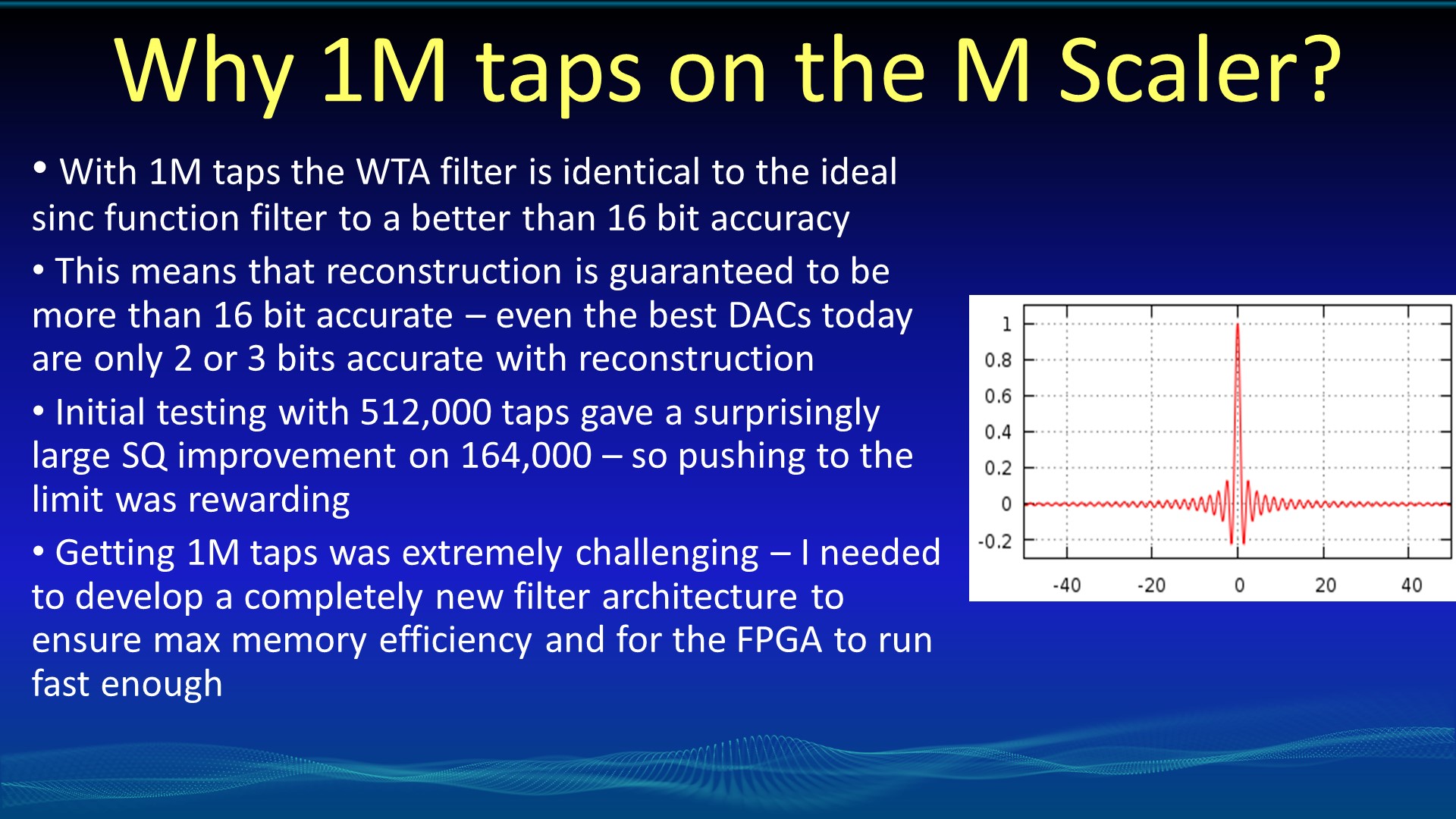

So this links history, and shows the relationship between sound quality and tap length with the WTA filter.

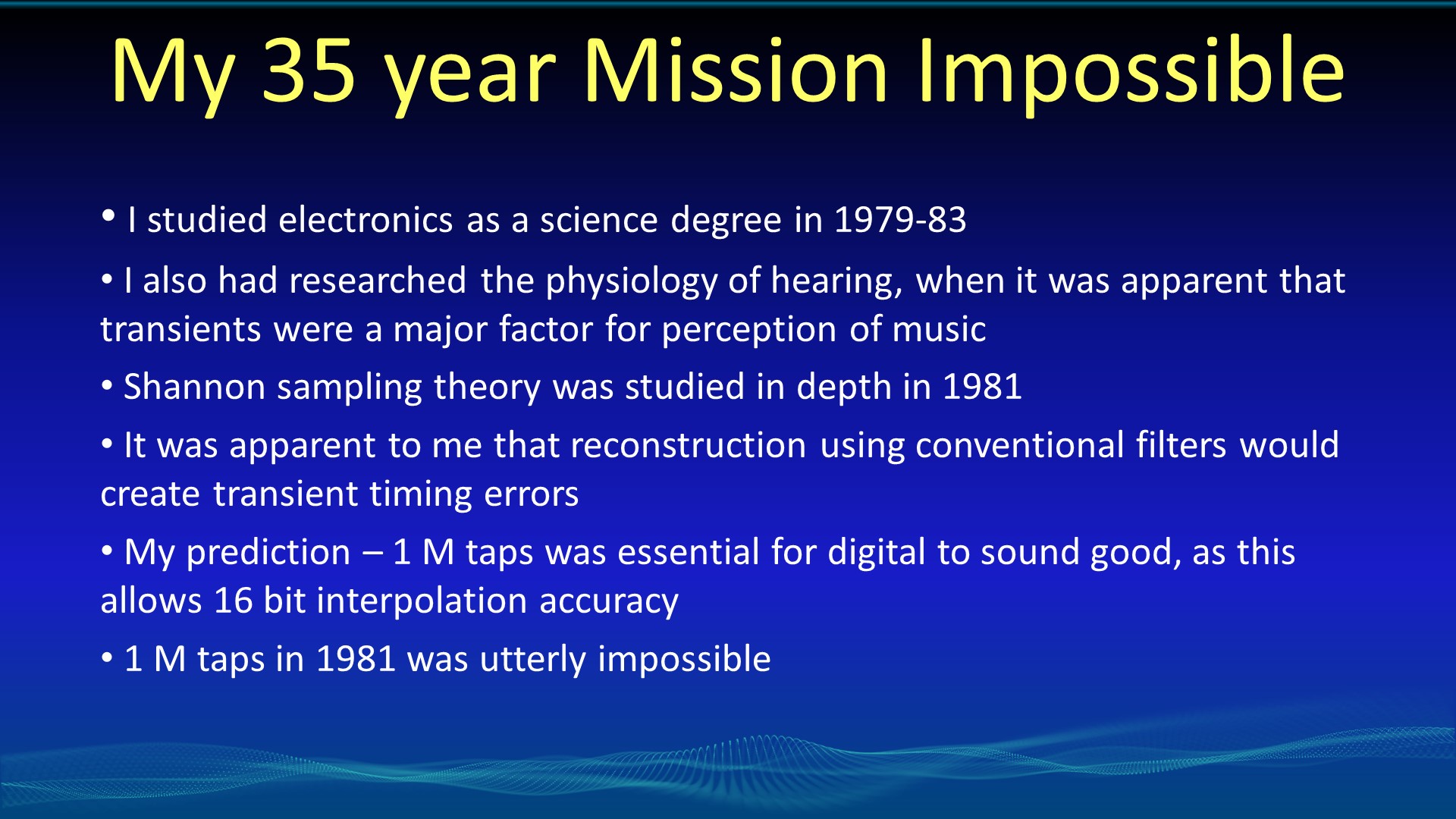

Some personal history - I have been waiting for this for a long time!

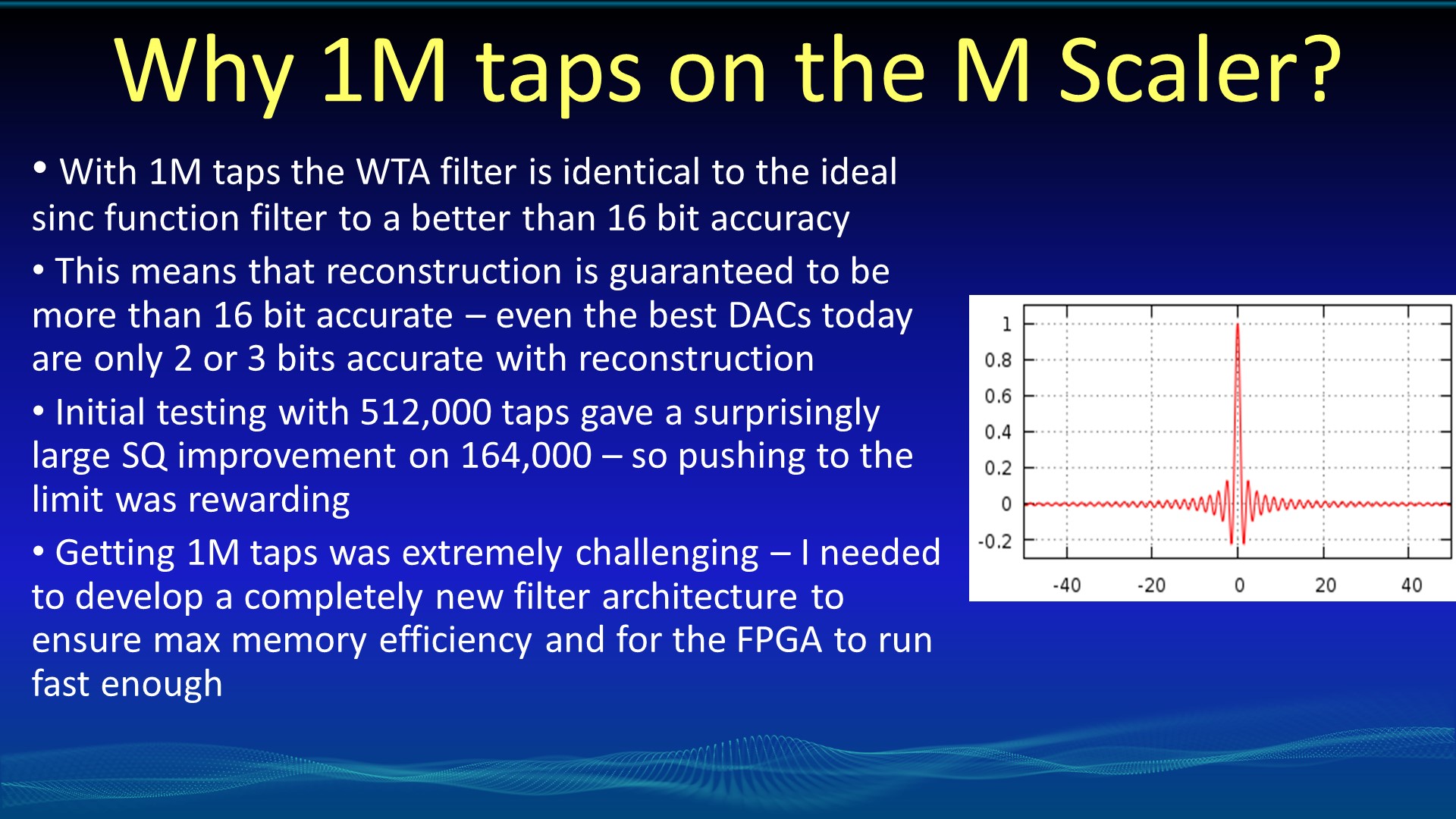

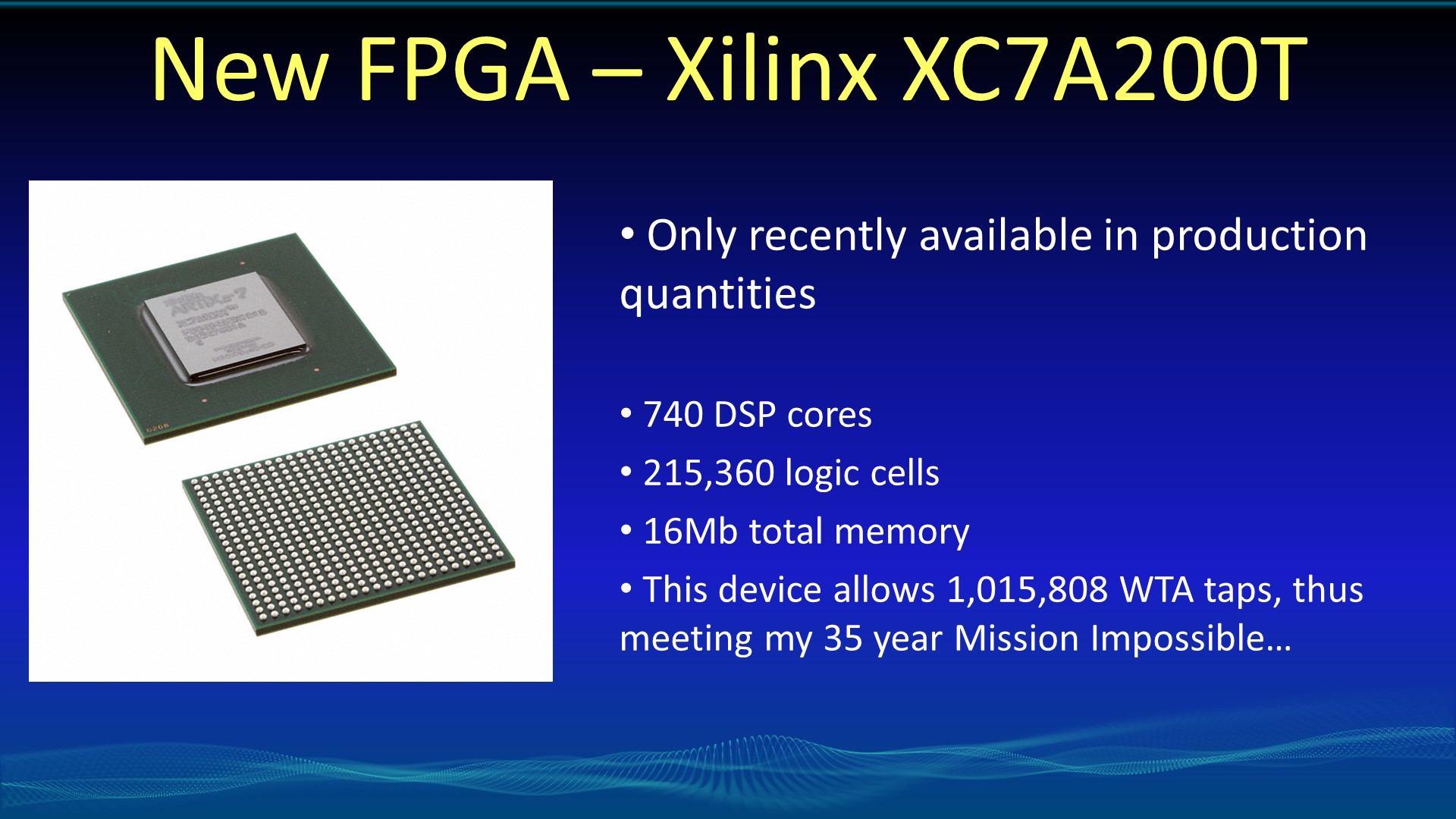

The importance of 1M taps is that I use identical to sinc coefficients to a 16.6 bit accuracy - this means that since it is the same as an ideal sinc function, and it's only a sinc function that will perfectly reconstruct, then we are guaranteed to reconstruct the bandwidth limited analogue signal to a better than 16 bit accuracy, with all signals, under all conditions. So your 16 bit file is now being perfectly reconstructed - at least to better than 16 bits accuracy and to 705.6 kHz (1.3 uS sample period).

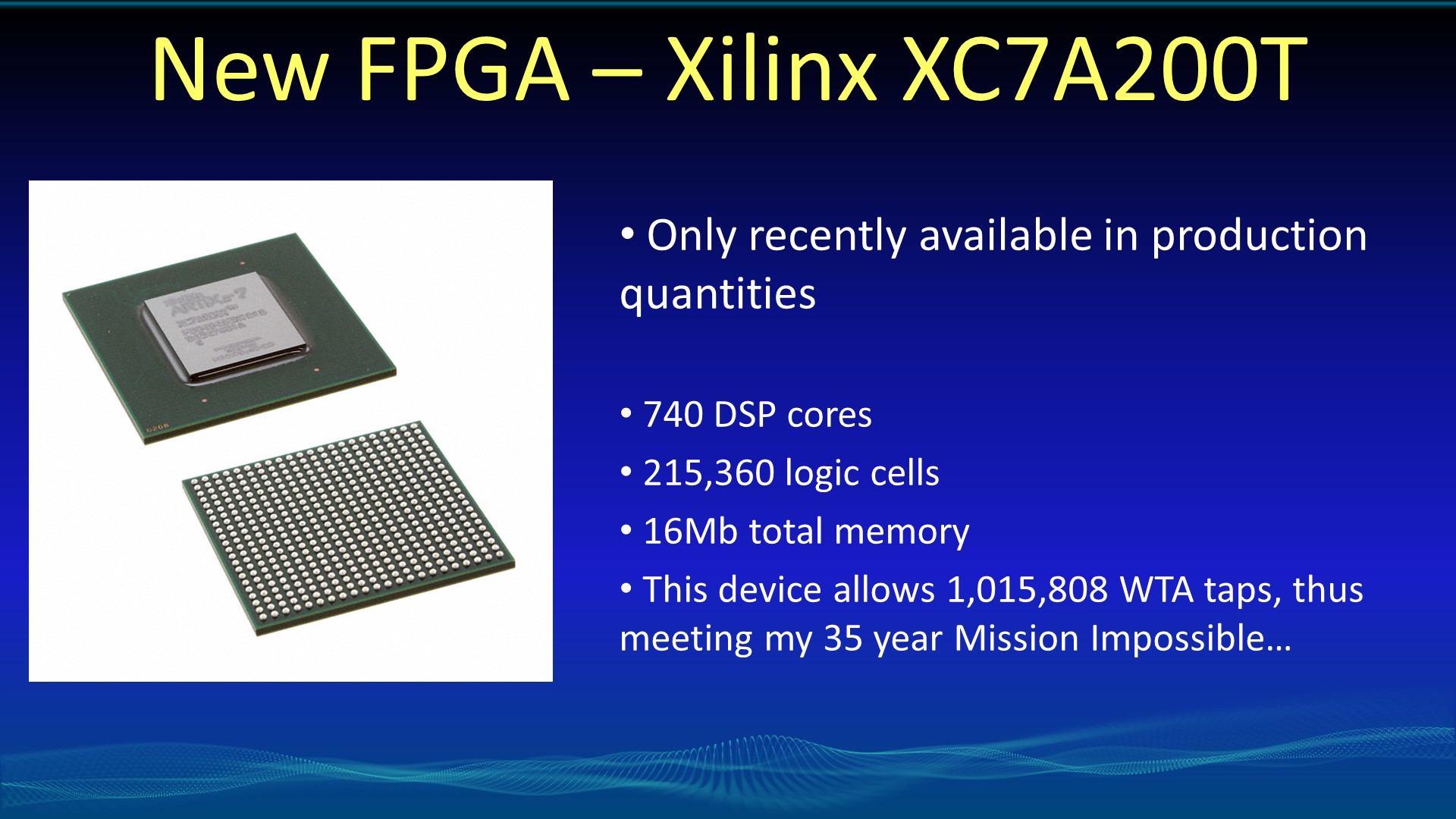

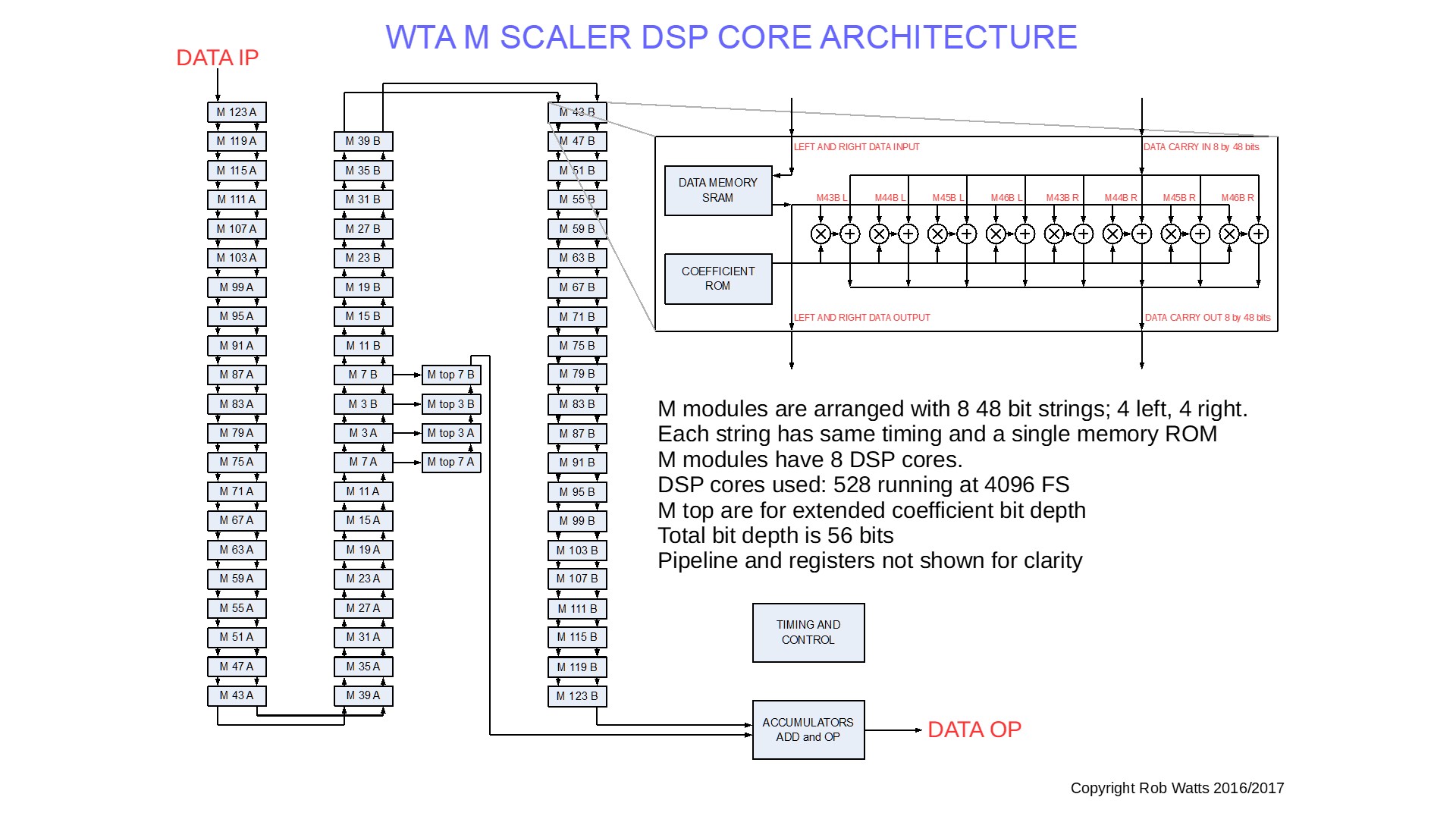

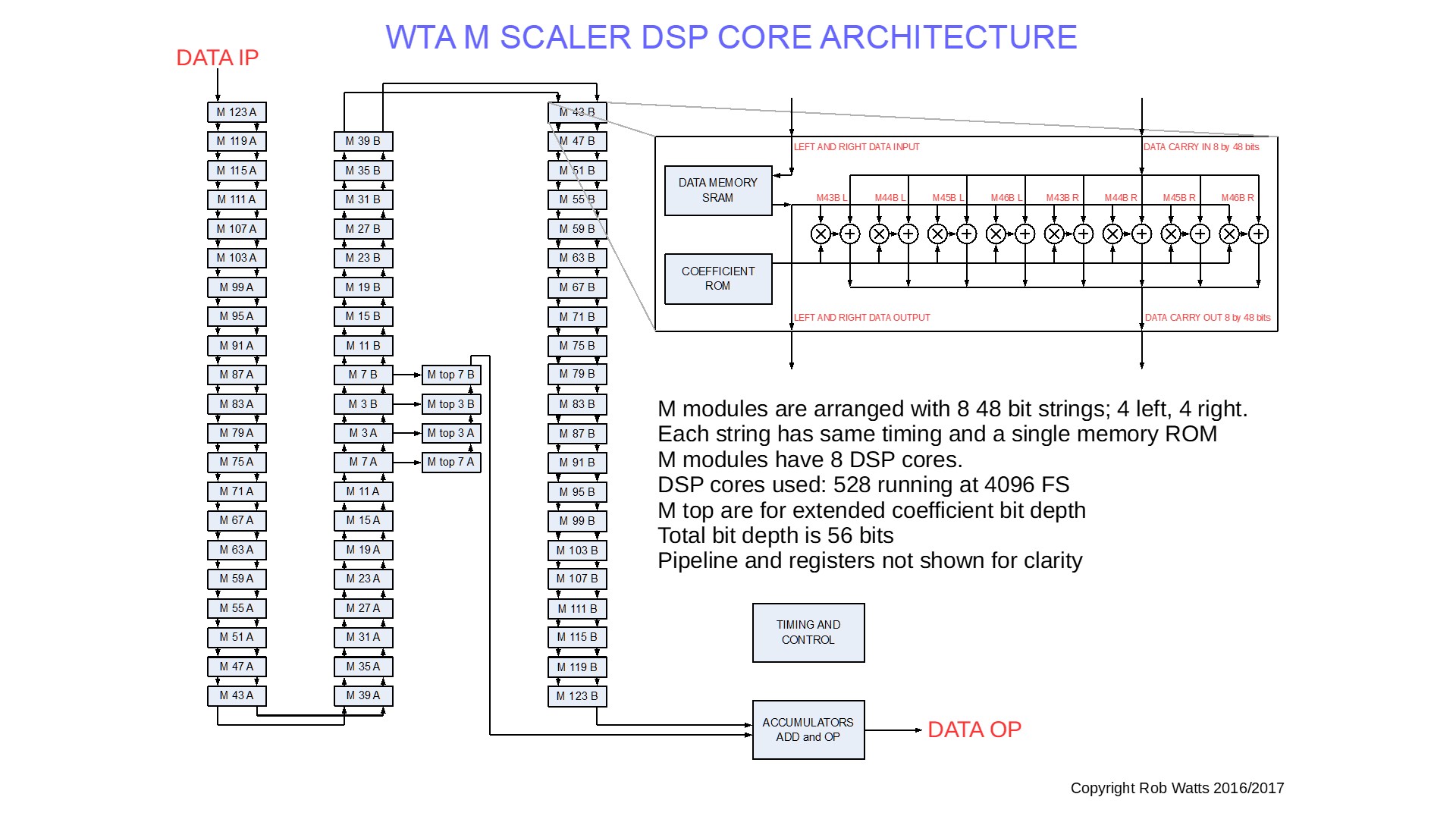

I needed to create a new filter architecture in order to do the M scaler - 528 DSP's operating together, with half a million lines of code. This gives you a flavour of the complexity. Fortunately, I can verify exactly that the filter works perfectly, although it took some months to test and de-bug.

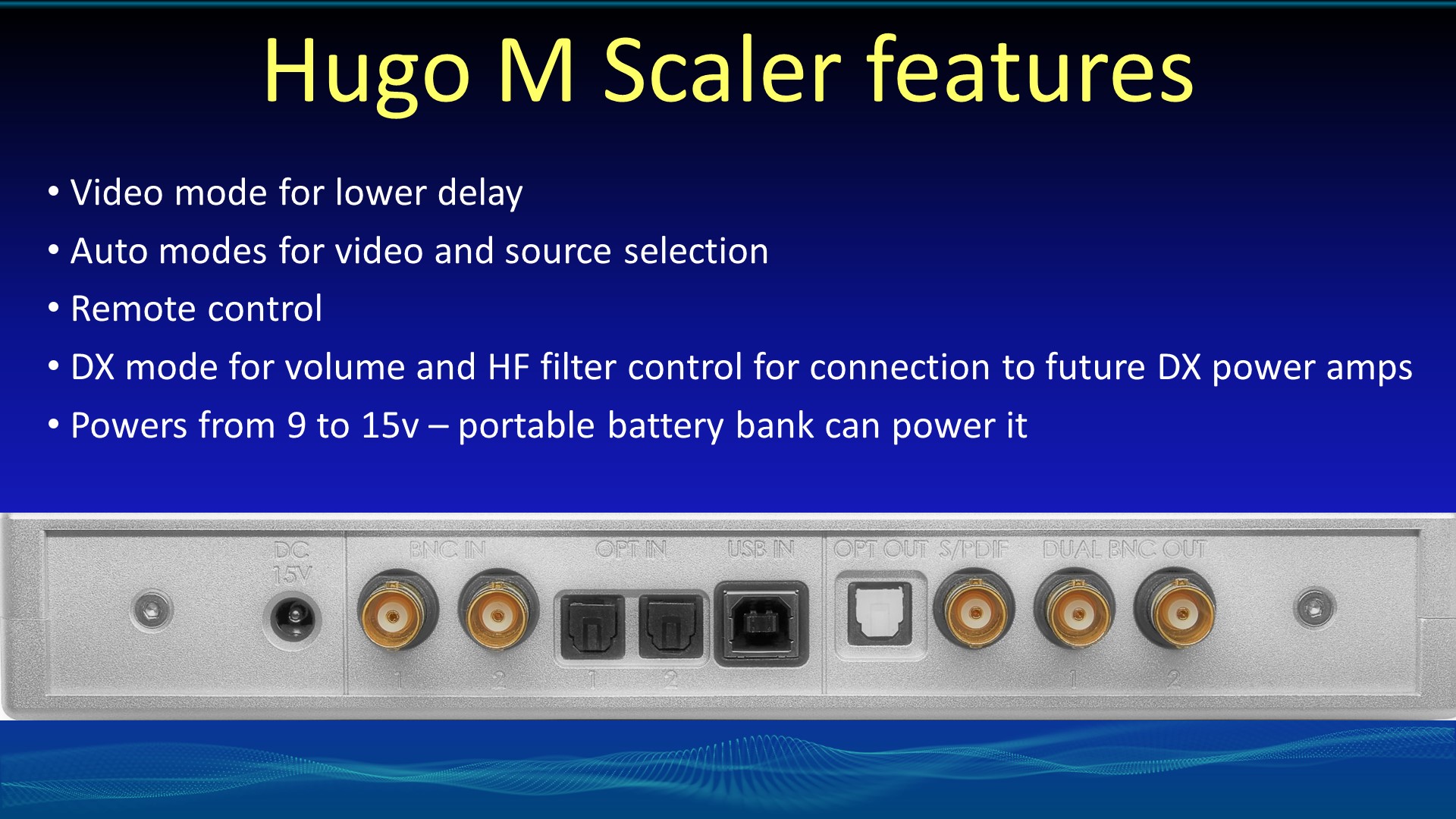

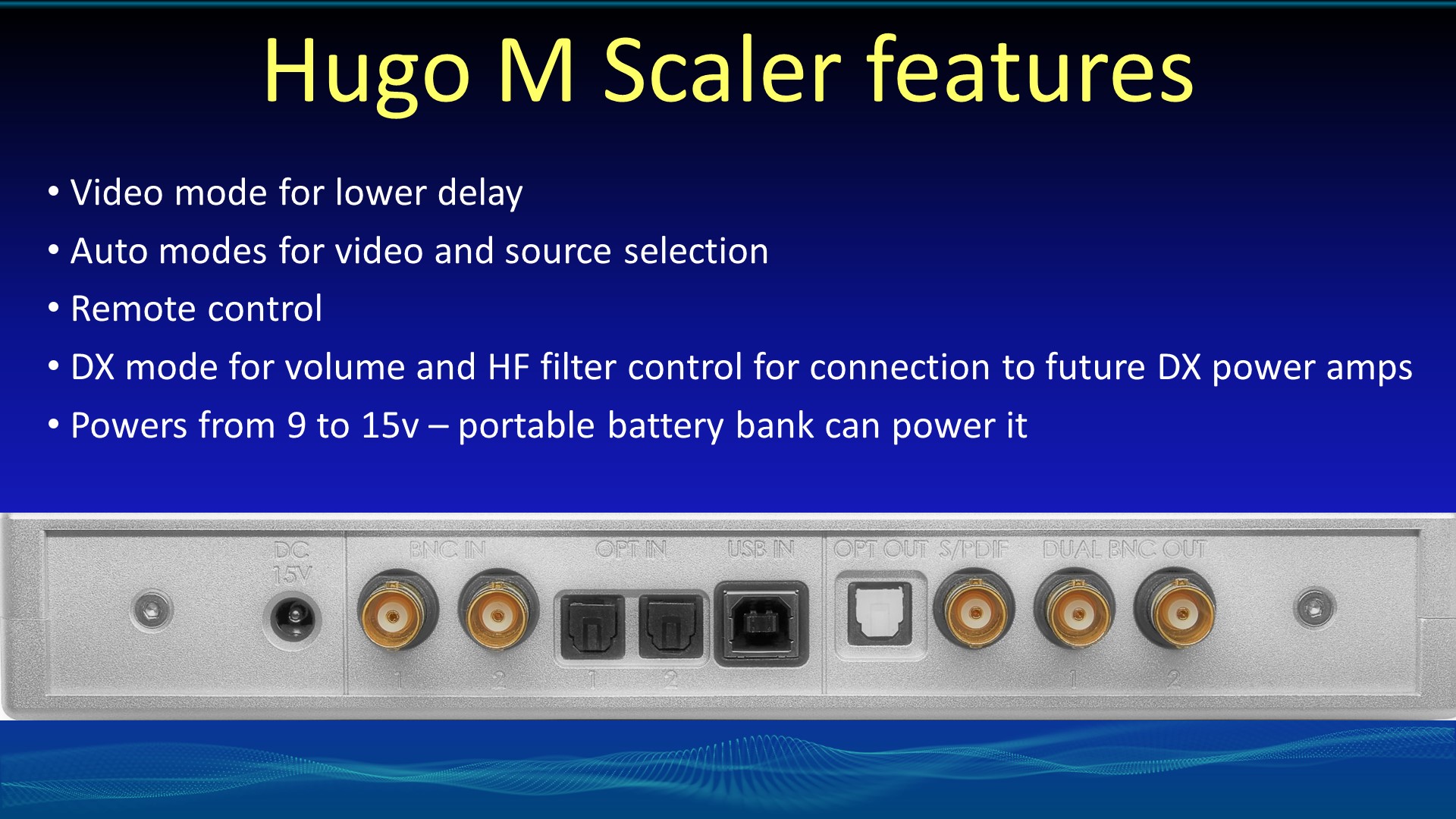

Features of the Hugo M scaler. I added the pass through mode so it was easy to be able to hear the difference.

So just to summarise. This whole concept is actually very very simple in reality:

1. We know that transients are essential from a perception POV.

2. Only an ideal sinc function interpolation filter will reconstruct the bandwidth limited analogue signal perfectly.

3. All other filters will create differences from the original analogue signal.

4. These differences will make transients come a little early or a little late, creating audible problems. The differences in timing depends upon when it was sampled, the past and future signals; so transients are randomly coming backwards or forwards; it's this constant change in the timing of transients that confuses the brain, as it can't process the audio to extract placement, timbre, pitch and tempo information all things that are essential for musicality.

5. Every time I have doubled the tap length and so increased the accuracy to an ideal sinc function by 1 bit I have noticed improved sound quality, as the transients are being more closely reproduced to the original. And the sound quality changes are consistent with what we know about transients and perception.

6. The M scaler is the same as an ideal sinc function filter to better than 16 bits; this means the bandwidth limited signal is reconstructed to a better than 16 bit accuracy.

To me, the M scaler has made a huge difference to sound quality when assessed objectively; much more importantly it has transformed my own enjoyment of music.

Rob

So here I am talking about how important timing of transients is to perception - the implication being is if transient timing has errors (transients being too early or too late, then this will degrade lateral imagery, or left to right instrument placement.

This illustrates that the brain relies on transients of bass notes in order to infer the pitch. Again, the implication is if there are timing errors then the brain will be confused and won't be able to perceive bass notes.

Again psychoacoustic studies have shown that transients are important information for the perception of timbre. Again, the implication is if transients have timing errors, then timbre perception will get degraded.

So I have talked about the fact that transients are important, and now it's about the specific timing problem that DAC's introduce.

So this is a simple illustration of the problem of reproducing transients, and why it is conventional interpolation filters create very large timing errors. So we can see here a sine wave tone burst, and the digital data actually loses the initial burst, as it samples it at the start of the transient.

So the top waveform will be the output from the "audiophile" filters - that actually create timing errors, as in this case we have a peak error of full amplitude - so the reconstruction gets the sign right, but nothing else. So in this particular circumstance, the OP is only 1 bit accurate. But the second waveform is perfectly reconstructed - and if we used an ideal sinc function filter (known as a Whittaker-Shannon interpolation filter) then it will perfectly reconstruct it, with no errors, and in particular no transient timing errors.

You may ask if the audiophile filters have such huge problems, then why do people like them? The issue is simple; when you get these huge transient errors, the brain can't make sense of the audio; when it can't make sense of something, it draws a blank, and you can't actually perceive the transients. And when you can't perceive transients properly, then things sound softer - it's the equivalent of making the image go out of focus, as you can no longer perceive sharp details. You can't follow the pitch of the bass, and it sounds big and fat. But of course the image goes big and flat too, as imagery is degraded; and instruments sound similar with poor timbre variations.

So this covers the theoretical ideal - if we use a sinc function filter, it will perfectly reconstruct it, with no transient timing errors.

But to implement an ideal sinc function interpolation filter, we need an infinite amount of processing... Something not possible!

So we can improve on conventional algorithms ability to reconstruct transients correctly - and this led to my WTA interpolation algorithm. It has been optimised to reduce the error, with a finite amount of processing (or taps). Actually, the WTA algorithm has evolved as tap length has increased, and today it is very similar to an ideal sinc function - over half the coefficients are identical to the ideal sinc function. A windowing function is the process that is used to tailor the sinc function from an infinite response into a small finite one, of a size we can actually use.

So this links history, and shows the relationship between sound quality and tap length with the WTA filter.

Some personal history - I have been waiting for this for a long time!

The importance of 1M taps is that I use identical to sinc coefficients to a 16.6 bit accuracy - this means that since it is the same as an ideal sinc function, and it's only a sinc function that will perfectly reconstruct, then we are guaranteed to reconstruct the bandwidth limited analogue signal to a better than 16 bit accuracy, with all signals, under all conditions. So your 16 bit file is now being perfectly reconstructed - at least to better than 16 bits accuracy and to 705.6 kHz (1.3 uS sample period).

I needed to create a new filter architecture in order to do the M scaler - 528 DSP's operating together, with half a million lines of code. This gives you a flavour of the complexity. Fortunately, I can verify exactly that the filter works perfectly, although it took some months to test and de-bug.

Features of the Hugo M scaler. I added the pass through mode so it was easy to be able to hear the difference.

So just to summarise. This whole concept is actually very very simple in reality:

1. We know that transients are essential from a perception POV.

2. Only an ideal sinc function interpolation filter will reconstruct the bandwidth limited analogue signal perfectly.

3. All other filters will create differences from the original analogue signal.

4. These differences will make transients come a little early or a little late, creating audible problems. The differences in timing depends upon when it was sampled, the past and future signals; so transients are randomly coming backwards or forwards; it's this constant change in the timing of transients that confuses the brain, as it can't process the audio to extract placement, timbre, pitch and tempo information all things that are essential for musicality.

5. Every time I have doubled the tap length and so increased the accuracy to an ideal sinc function by 1 bit I have noticed improved sound quality, as the transients are being more closely reproduced to the original. And the sound quality changes are consistent with what we know about transients and perception.

6. The M scaler is the same as an ideal sinc function filter to better than 16 bits; this means the bandwidth limited signal is reconstructed to a better than 16 bit accuracy.

To me, the M scaler has made a huge difference to sound quality when assessed objectively; much more importantly it has transformed my own enjoyment of music.

Rob

Attachments

Last edited:

Whazzzup

Headphoneus Supremus

Nice presentation, will have to read that again, touch above my pay grade. You sneaky little b-st-rds. So as I understand it dual bnc is the preferred connection from m scaler to tt2. So if one owned the original TT, that doesn’t have that connection and less taps etc, the benefit is what numerically. Not implying anything. So the tt2 with all its improvements and dual bnc really upscale the m scaler to its potential. This will make the path forward a little tricky and expensive. You really want both tt2 then m scaler.

Fortunately I’m grooving on my present system and No pressure to move forward, thx for the tech. Wish I could hear this on my system...I mean are our aging ears good enough to appreciate these improvements...

Fortunately I’m grooving on my present system and No pressure to move forward, thx for the tech. Wish I could hear this on my system...I mean are our aging ears good enough to appreciate these improvements...

Rob Watts

Member of the Trade: Chord Electronics

- Joined

- Apr 1, 2014

- Posts

- 3,141

- Likes

- 12,368

Mine are!

Many thanks Rob fascinating read. I did a little background reading regarding timbre too. In theory then does the mscaler perform equally to an infinite tap length filter if this could be modelled ? I ask because i assume 1M taps is perfectly reconstructing the original analogue waveform to at least 99.99% accuracy so any increase over 1m taps would have negligible effect or none at all. Thanks MK.

Last edited:

Rob Watts

Member of the Trade: Chord Electronics

- Joined

- Apr 1, 2014

- Posts

- 3,141

- Likes

- 12,368

Many thanks Rob fascinating read. I did a little background reading regarding timbre too. In theory then does the mscaler perform equally to an infinite tap length filter if this could be modelled or will we never know as an infinite tap length filter can never be a reality? I ask because i assume 1M taps is perfectly reconstructing the original analogue waveform so any increase over 1m taps would have negligible effect or none at all. Thanks MK.

Well all I can say is that it will reconstruct to better than 16 bits; given that there is a big change from 0.5 M taps to 1 M taps then I can't be sure if we can get even more SQ improvement from more taps. Davina project will tell us for certain how much loss is involved with the interpolation process. All I can say for sure is that it makes a huge difference for me now - and that the only 768 kHz file I have heard sounds like an M scaler is being used...

Triode User

Member of the Trade: WAVE High Fidelity

I mean are our aging ears good enough to appreciate these improvements...

My 63 year old ears are!

ufospls2

Headphoneus Supremus

- Joined

- Dec 9, 2014

- Posts

- 2,467

- Likes

- 4,000

This is a silly question, but how does one know if what they are sending the M Scaler is bit perfect? For example, is Spotify premium bit perfect? Is 320kbps MP3 bit perfect? Is lossless FLAC bit perfect? Or does it depend on the file, rather than the file type?

ZappaMan

Headphoneus Supremus

Rob, is there anything fundamentally different about how mscaler receives and processes it’s usb I put (apart from galvanic isolation) compared to the blu2?

If users perceived good synergy between a server/usb reclocker and blu2, is it very likely, that that same synergy will be with the mscaler?

If users perceived good synergy between a server/usb reclocker and blu2, is it very likely, that that same synergy will be with the mscaler?

Users who are viewing this thread

Total: 15 (members: 0, guests: 15)