charleski

100+ Head-Fier

- Joined

- Nov 10, 2014

- Posts

- 385

- Likes

- 374

I posting this here to get some feedback on my technique. Please jump in and let me know if I've done anything wrong or made some invalid assumptions.

My source component is an LG G7, which I got late last year and am very happy with. For those not familiar with the phone, its headphone jack is powered by an ES9218P which marries ESS's Sabre DAC with an on-chip headphone amplifier. Up until recently I've been using it to drive low-impedance iems and had no problem at all with the volume output, but I recently decided to resurrect my vintage (25yr-old) HD580 Jubliee cans which are 300ohm nominal. The phone correctly switched to high-impedance mode (it auto-switches output gain depending on the impedance sensed at the jack), but I did notice that I was having to turn the volume up higher than when using the iems. Which left me wondering if I really needed a proper external amp to get the best out of them. The review of the G7 on audiosciencereview gives a power output of 14mW into 300ohms. But I've had problems finding a proper spec for the HD580's sensitivity. There's a page on Stereophile's site claiming it's '97dB' with no unit attached, though their other sensitivity specs seem to be in db/mW, and I found another review site that stated it was '~98dB/mW' (these are all for the standard HD580 model, but AFAIK the Jubilee model should be identical in this respect). If we take it as 97dB/mW, the G7 should be able to drive these phones to a maximum of around 108.5dB, which is reasonably close to the recommendation of 110dB (which refers to maximum transitory peak output, obviously average sane listening level will be 30-40dB lower and this number just specifies maximum headroom).

So on paper it looks fine, but I wanted to find some way to check this, if only to prevent myself giving in to audiophilia nervosa and buying a more powerful amp purely to satisfy a baseless anxiety. But I wanted to do this on the cheap, and sadly I've yet to find any bargain APx555s is the SpecialBuy section of my local Aldi. So here's the plan I came up with, which cost me a grand total of $0 for equipment.

I'd expect the line-in input impedance to be around 10kOhms, which shouldn't have much effect on the load seen by the amp when placed in parallel with the 300Ohm cans. I then used REW to generate a wav file with a 10-22k sweep at -0.3dB, which should represent the maximum signal level the player might encounter. I checked that the phone was still in high-impedance mode and played back this file through Neutron at 8 different volume levels (Neutron's only fault is that while in-app it forces volume changes to fairly crude 3dB steps. The volume numbers given below are those seen in the app). Each playback sweep was recorded in Audition and then saved, and the recorded audio was imported in REW for analysis.

Results:

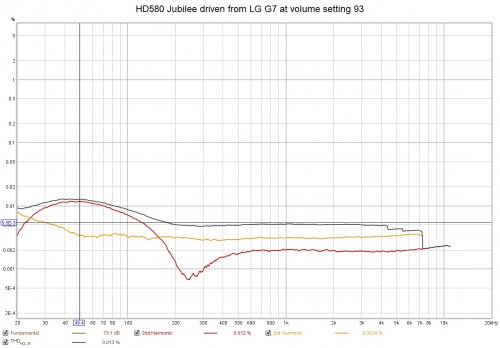

Obviously full volume is completely unusable (this is something I noted before in my testing with iems). But anything below that is just fine, and the maximum volume I'd been using was 87, meaning the amp still had at least another 3dB available. There was some increase in THD in the bass, but this peaked at 0.013% at around 50Hz on Vol93.

It's important to note that this experiment was designed to answer a specific question: should I waste money on an external amp? The actual distortion produced by the on-chip amp will be lower than measured here, as these numbers include distortion coming from the computer's recording interface. They merely provide an upper bound to the amp's output distortion, and from Vol93 down are well below audibility.

The answer to my question is clearly No, which leaves my wallet with a smile on its face. Since an extra amp is just going to increase noise and distortion without providing any truly usable increase in headroom (unless I decide I want to commit auditory suicide), it also means I've avoided degrading the sound quality as well.

As I said at the start, though, I thought I'd run this by you lot to see if I've done anything clearly wrong here which might affect my conclusion.

My source component is an LG G7, which I got late last year and am very happy with. For those not familiar with the phone, its headphone jack is powered by an ES9218P which marries ESS's Sabre DAC with an on-chip headphone amplifier. Up until recently I've been using it to drive low-impedance iems and had no problem at all with the volume output, but I recently decided to resurrect my vintage (25yr-old) HD580 Jubliee cans which are 300ohm nominal. The phone correctly switched to high-impedance mode (it auto-switches output gain depending on the impedance sensed at the jack), but I did notice that I was having to turn the volume up higher than when using the iems. Which left me wondering if I really needed a proper external amp to get the best out of them. The review of the G7 on audiosciencereview gives a power output of 14mW into 300ohms. But I've had problems finding a proper spec for the HD580's sensitivity. There's a page on Stereophile's site claiming it's '97dB' with no unit attached, though their other sensitivity specs seem to be in db/mW, and I found another review site that stated it was '~98dB/mW' (these are all for the standard HD580 model, but AFAIK the Jubilee model should be identical in this respect). If we take it as 97dB/mW, the G7 should be able to drive these phones to a maximum of around 108.5dB, which is reasonably close to the recommendation of 110dB (which refers to maximum transitory peak output, obviously average sane listening level will be 30-40dB lower and this number just specifies maximum headroom).

So on paper it looks fine, but I wanted to find some way to check this, if only to prevent myself giving in to audiophilia nervosa and buying a more powerful amp purely to satisfy a baseless anxiety. But I wanted to do this on the cheap, and sadly I've yet to find any bargain APx555s is the SpecialBuy section of my local Aldi. So here's the plan I came up with, which cost me a grand total of $0 for equipment.

Code:

LG G7 ---> Y-splitter ---> HD580J

|---> line-in on computer audio interfaceI'd expect the line-in input impedance to be around 10kOhms, which shouldn't have much effect on the load seen by the amp when placed in parallel with the 300Ohm cans. I then used REW to generate a wav file with a 10-22k sweep at -0.3dB, which should represent the maximum signal level the player might encounter. I checked that the phone was still in high-impedance mode and played back this file through Neutron at 8 different volume levels (Neutron's only fault is that while in-app it forces volume changes to fairly crude 3dB steps. The volume numbers given below are those seen in the app). Each playback sweep was recorded in Audition and then saved, and the recorded audio was imported in REW for analysis.

Results:

Code:

Vol THD at 1kHz

100 11.3%

93 0.0051%

87 0.0039%

80 0.0045%

73 0.0063%

67 0.0053%

60 0.0050%

53 0.0063%Obviously full volume is completely unusable (this is something I noted before in my testing with iems). But anything below that is just fine, and the maximum volume I'd been using was 87, meaning the amp still had at least another 3dB available. There was some increase in THD in the bass, but this peaked at 0.013% at around 50Hz on Vol93.

It's important to note that this experiment was designed to answer a specific question: should I waste money on an external amp? The actual distortion produced by the on-chip amp will be lower than measured here, as these numbers include distortion coming from the computer's recording interface. They merely provide an upper bound to the amp's output distortion, and from Vol93 down are well below audibility.

The answer to my question is clearly No, which leaves my wallet with a smile on its face. Since an extra amp is just going to increase noise and distortion without providing any truly usable increase in headroom (unless I decide I want to commit auditory suicide), it also means I've avoided degrading the sound quality as well.

As I said at the start, though, I thought I'd run this by you lot to see if I've done anything clearly wrong here which might affect my conclusion.

Last edited:

I was actually just measuring the limit of the input buffer on the motherboard. I suppose an answer would be to insert a voltage divider to pad the input down to a level it can accept, though this would affect the noise floor (which is already not great). I might see if an entry-level pro-audio interface can take higher levels (since these can be picked up second hand for not that much.)

I was actually just measuring the limit of the input buffer on the motherboard. I suppose an answer would be to insert a voltage divider to pad the input down to a level it can accept, though this would affect the noise floor (which is already not great). I might see if an entry-level pro-audio interface can take higher levels (since these can be picked up second hand for not that much.)